Setting the Scene

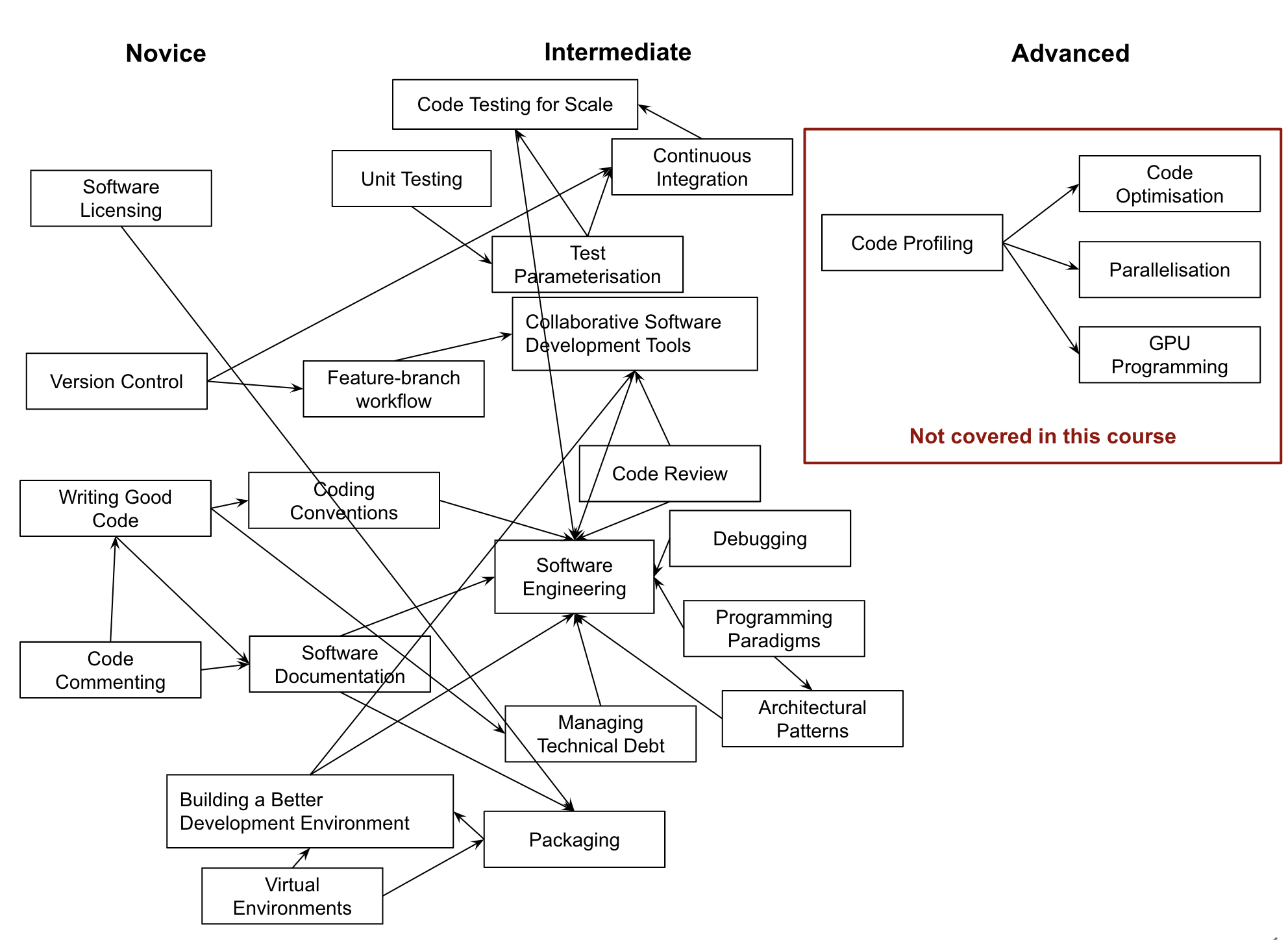

Overview

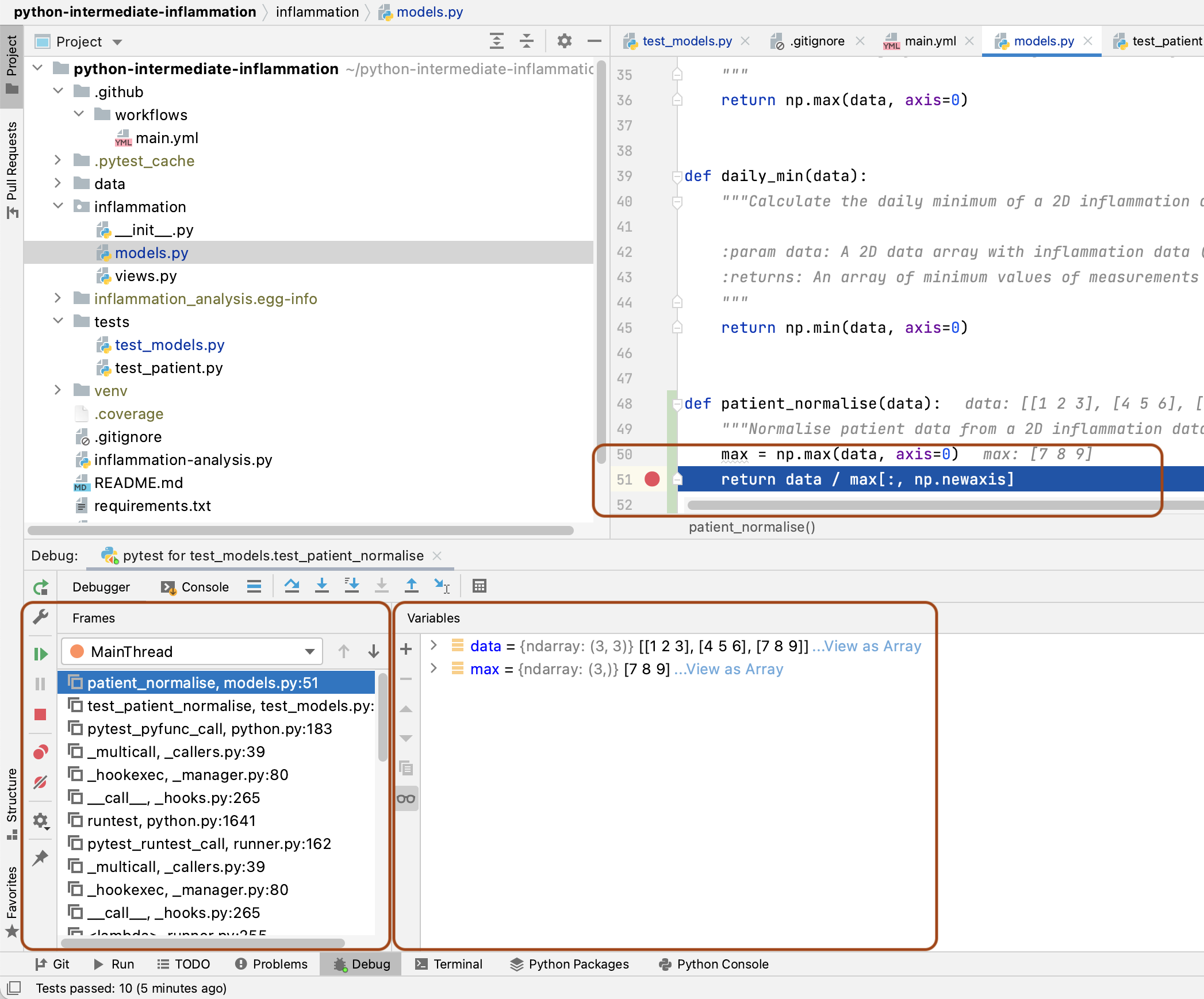

Teaching: 15 min

Exercises: 0 minQuestions

What are we teaching in this course?

What motivated the selection of topics covered in the course?

Objectives

Setting the scene and expectations

Making sure everyone has all the necessary software installed

Introduction

So, you have gained basic software development skills either by self-learning or attending, e.g., a novice Software Carpentry course. You have been applying those skills for a while by writing code to help with your work and you feel comfortable developing code and troubleshooting problems. However, your software has now reached a point where there’s too much code to be kept in one script. Perhaps it’s involving more researchers (developers) and users, and more collaborative development effort is needed to add new functionality while ensuring previous development efforts remain functional and maintainable.

This course provides the next step in software development - it teaches some intermediate software engineering skills and best practices to help you restructure existing code and design more robust, reusable and maintainable code, automate the process of testing and verifying software correctness and support collaborations with others in a way that mimics a typical software development process within a team.

The course uses a number of different software development tools and techniques interchangeably as you would in a real life. We had to make some choices about topics and tools to teach here, based on established best practices, ease of tool installation for the audience, length of the course and other considerations. Tools used here are not mandated though: alternatives exist and we point some of them out along the way. Over time, you will develop a preference for certain tools and programming languages based on your personal taste or based on what is commonly used by your group, collaborators or community. However, the topics covered should give you a solid foundation for working on software development in a team and producing high quality software that is easier to develop and sustain in the future by yourself and others. Skills and tools taught here, while Python-specific, are transferable to other similar tools and programming languages.

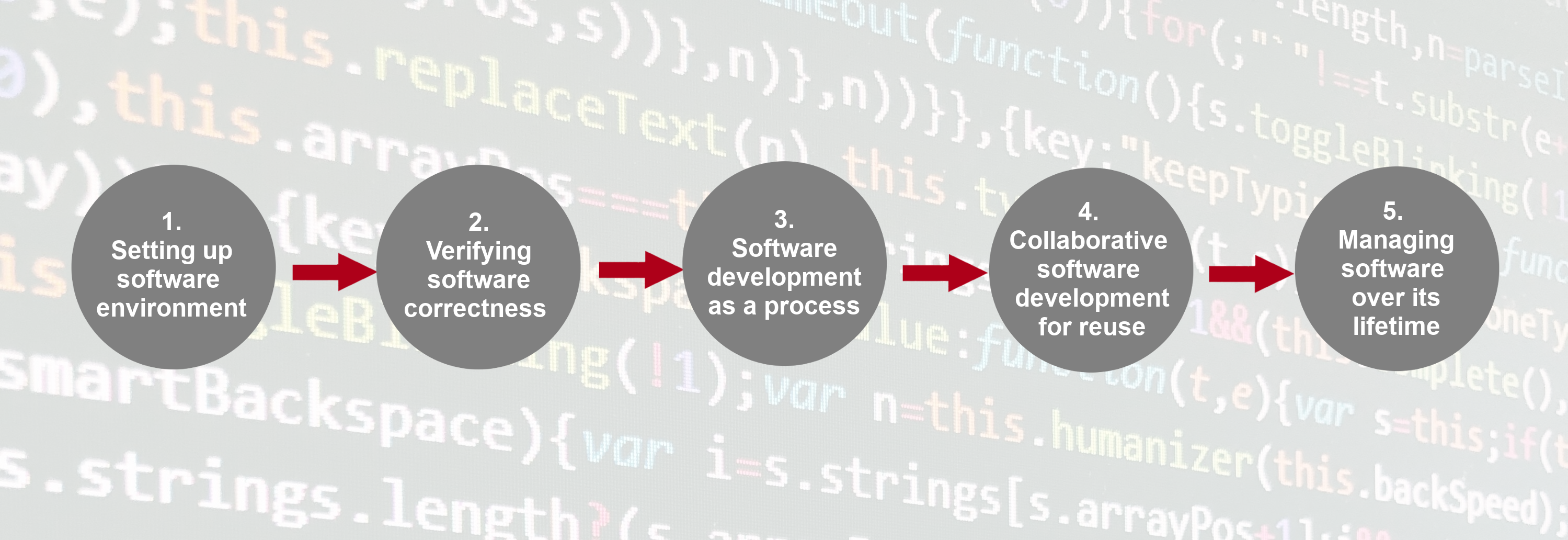

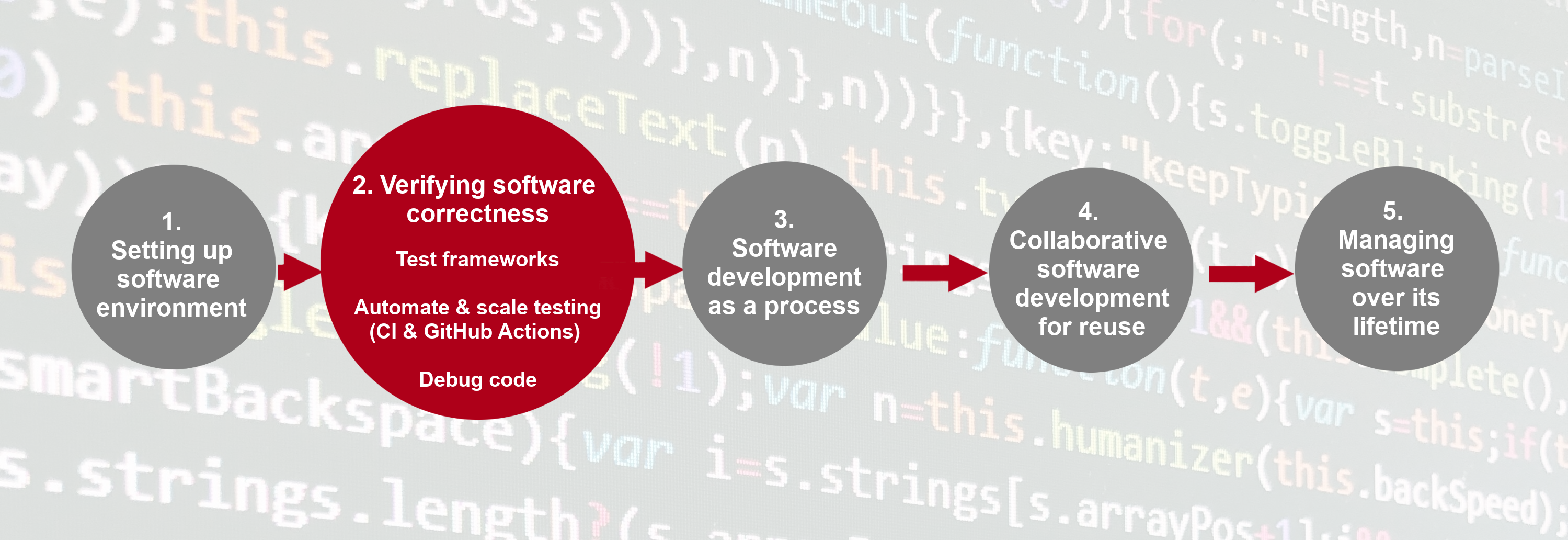

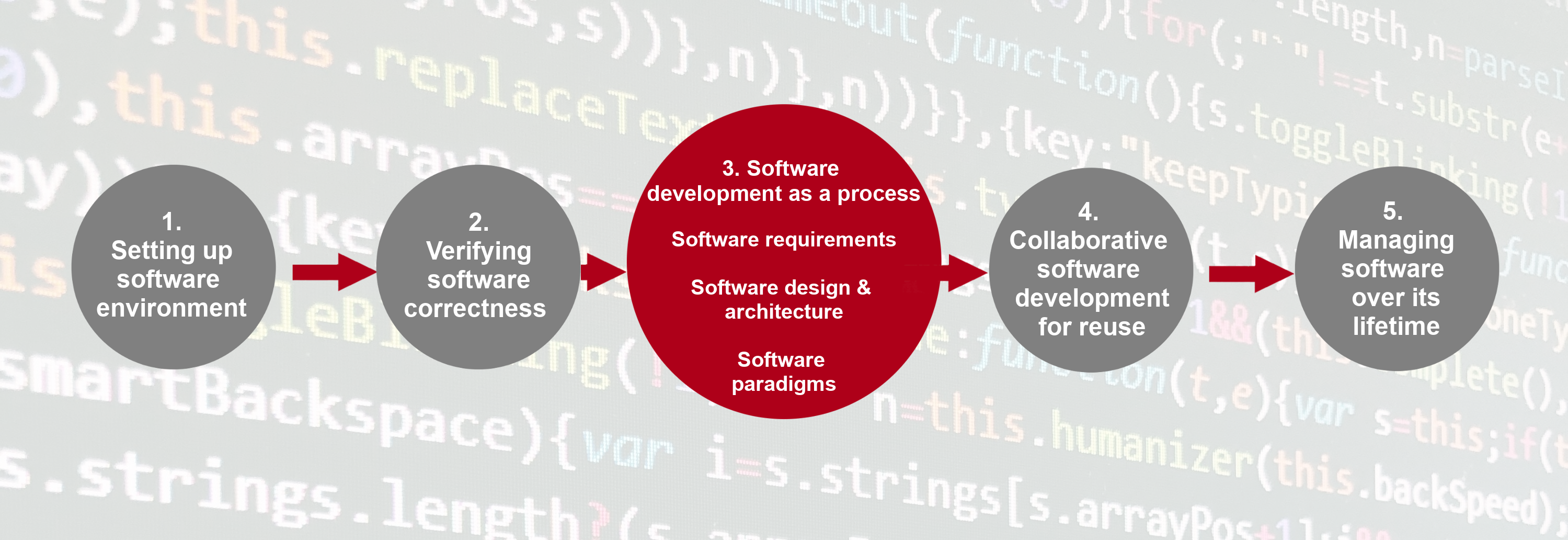

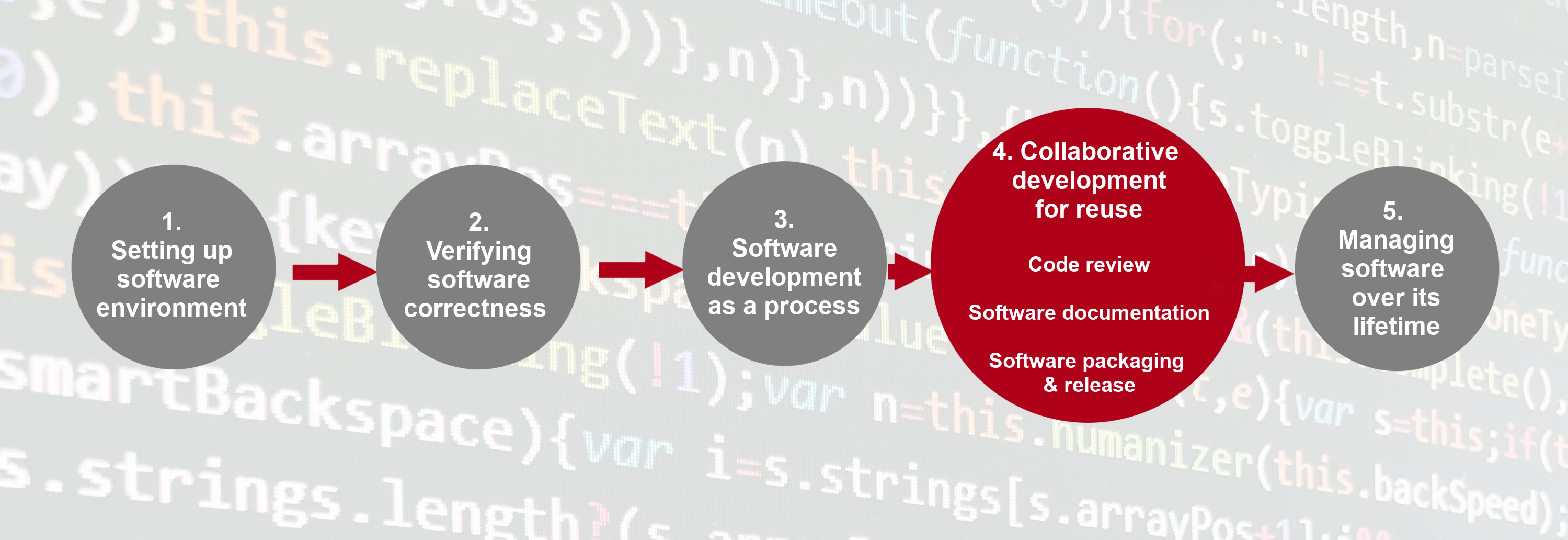

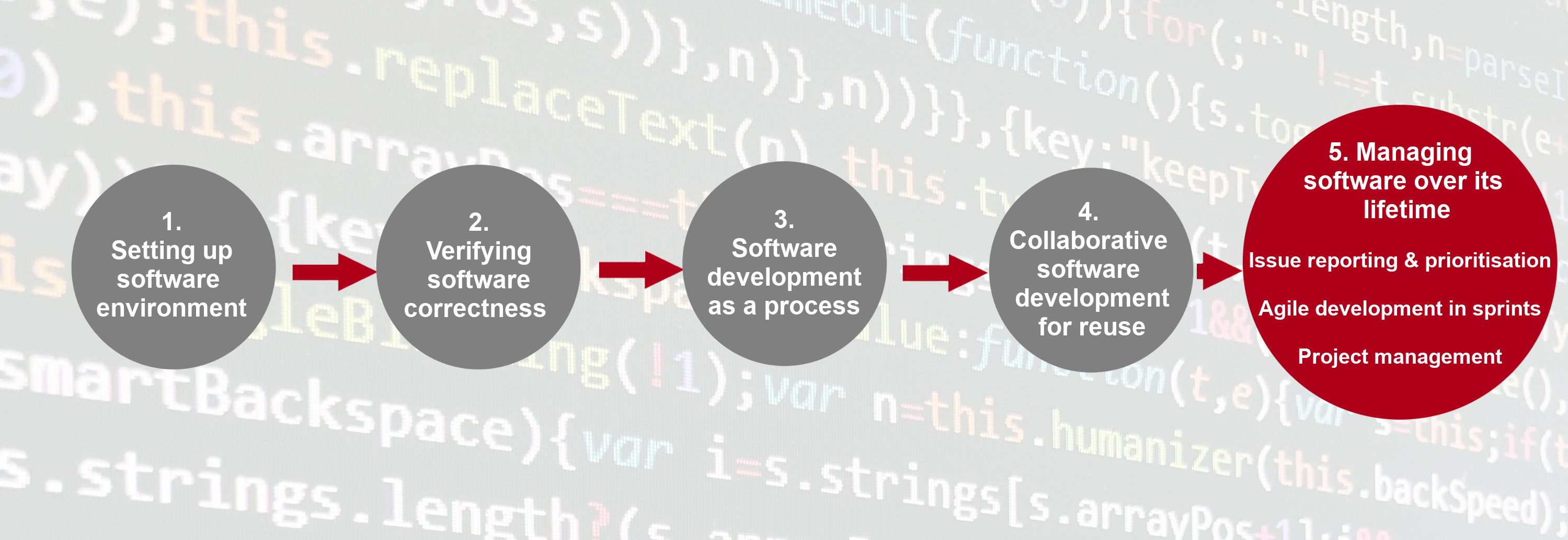

The course is organised into the following sections:

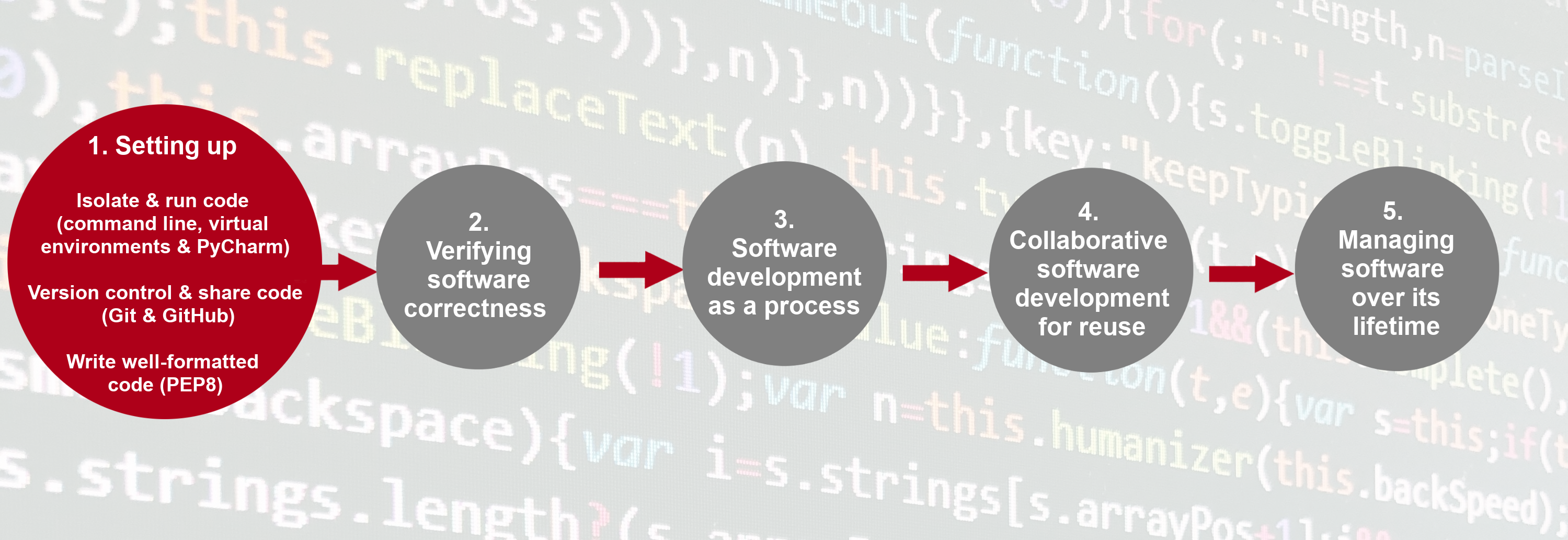

Section 1: Setting up Software Environment

In the first section we are going to set up our working environment and familiarise ourselves with various tools and techniques for software development in a typical collaborative code development cycle:

- Virtual environments for isolating a project from other projects developed on the same machine

- Command line for running code and interacting with the command line tool Git for

- Integrated Development Environment for code development, testing and debugging, Version control and using code branches to develop new features in parallel,

- GitHub (central and remote source code management platform supporting version control with Git) for code backup, sharing and collaborative development, and

- Python code style guidelines to make sure our code is documented, readable and consistently formatted.

Section 2: Verifying Software Correctness at Scale

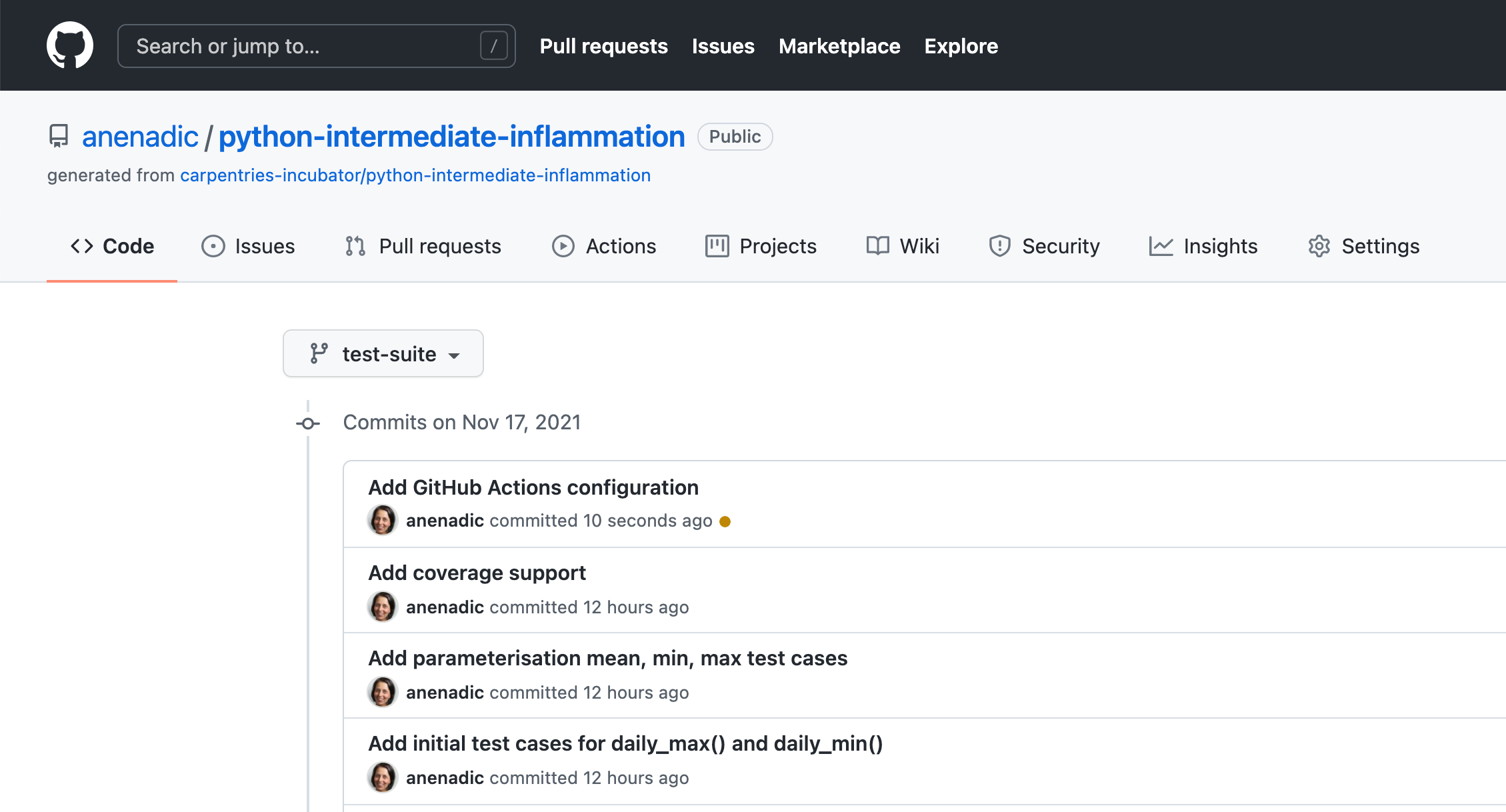

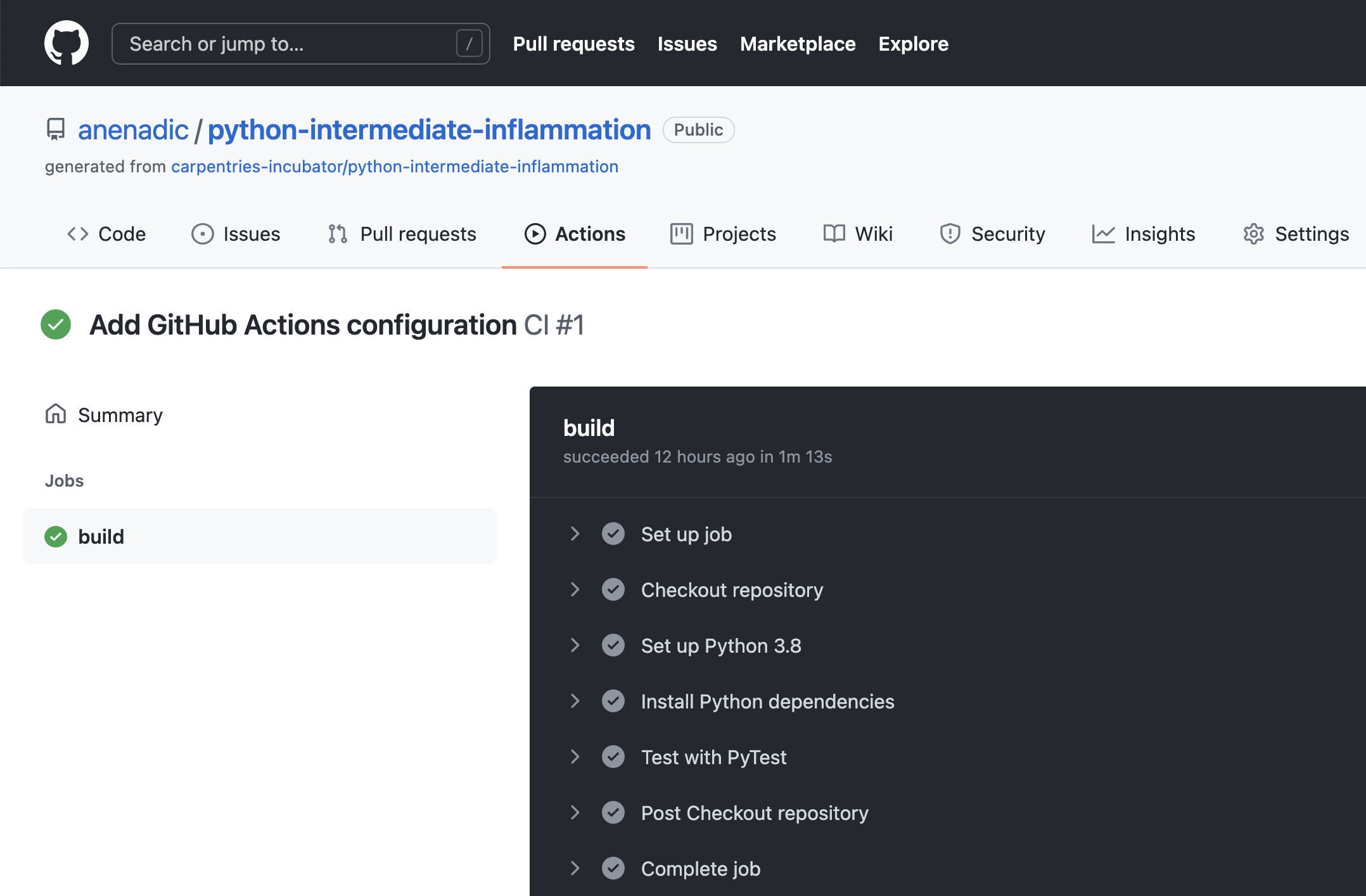

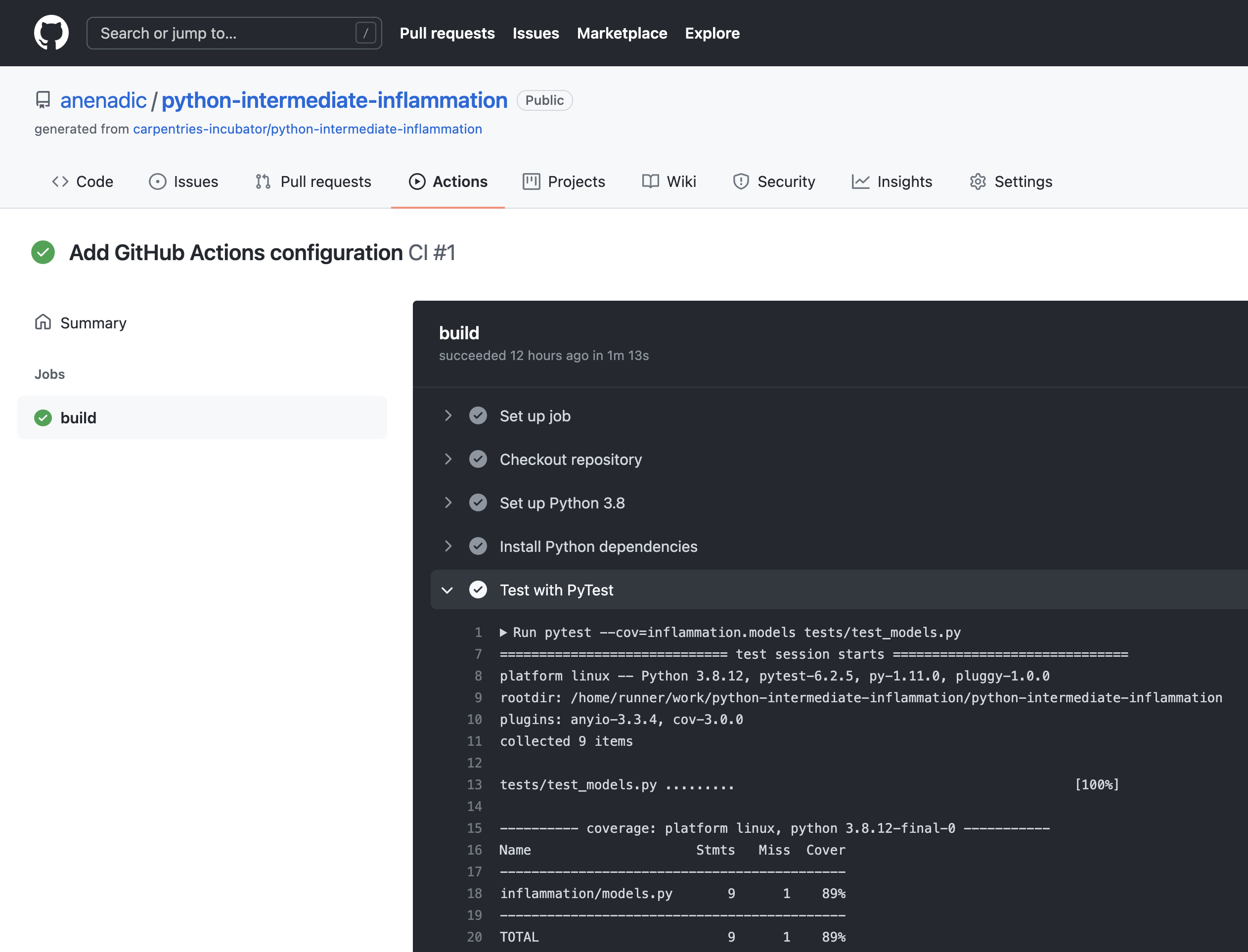

Once we know our way around different code development tools, techniques and conventions, in this section we learn:

- how to set up a test framework and write tests to verify the behaviour of our code is correct, and

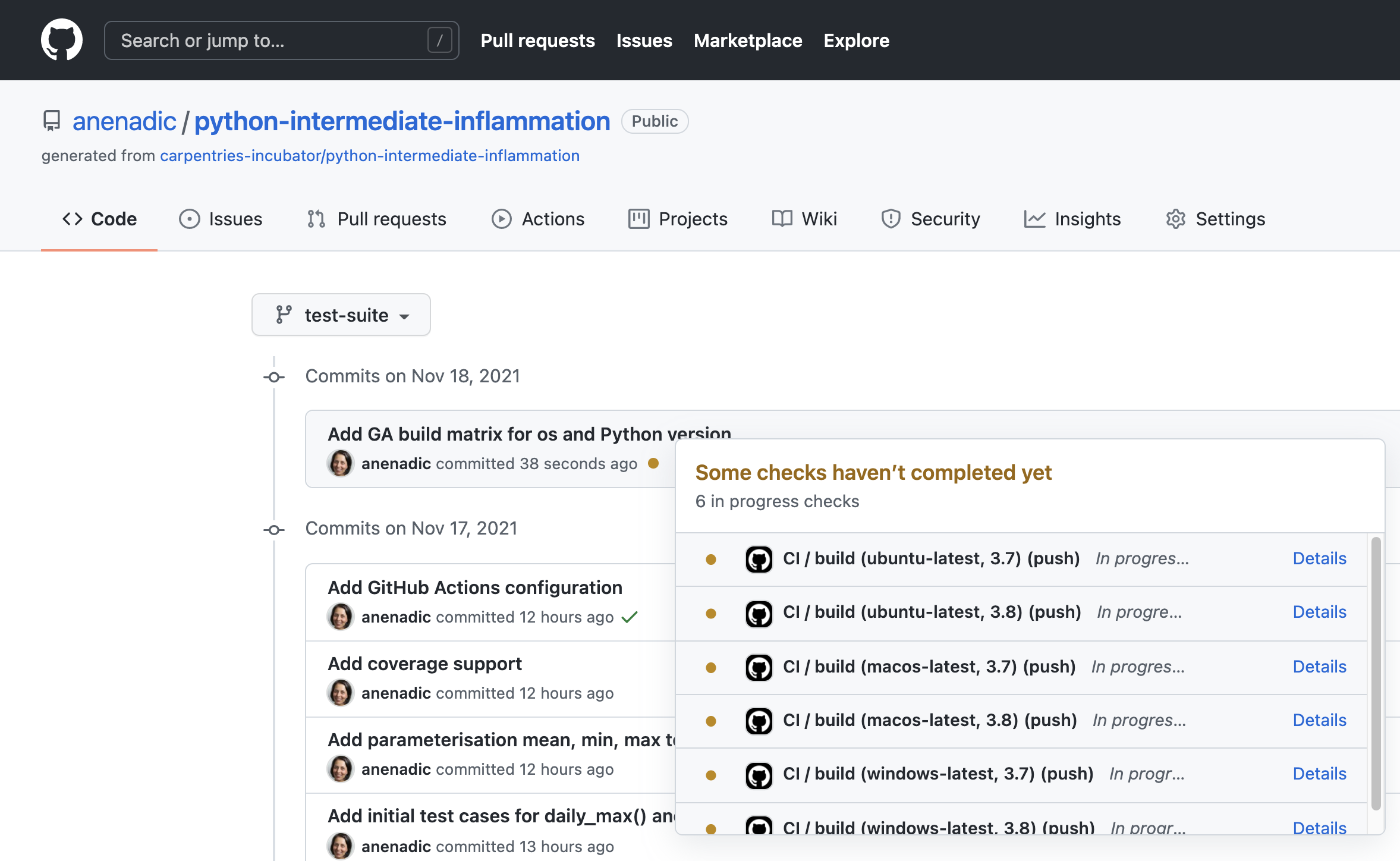

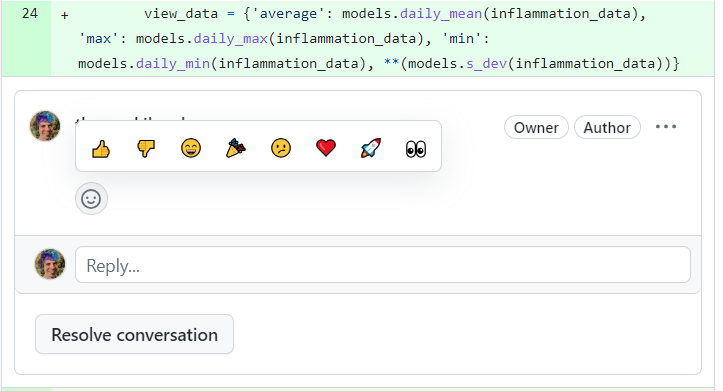

- how to automate and scale testing with Continuous Integration (CI) using GitHub Actions (a CI service available on GitHub).

Section 3: Software Development as a Process

In this section, we step away from writing code for a bit to look at software from a higher level as a process of development and its components:

- different types of software requirements and designing and architecting software to meet them, how these fit within the larger software development process and what we should consider when testing against particular types of requirements.

- different programming and software design paradigms, each representing a slightly different way of thinking about, structuring and implementing the code.

Section 4: Collaborative Software Development for Reuse

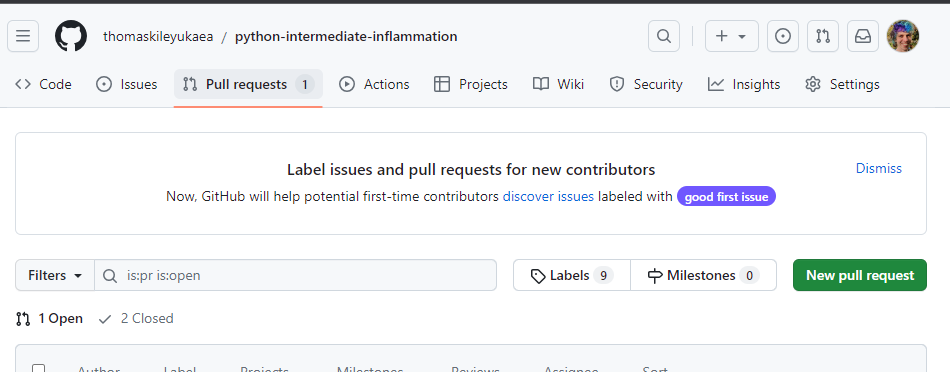

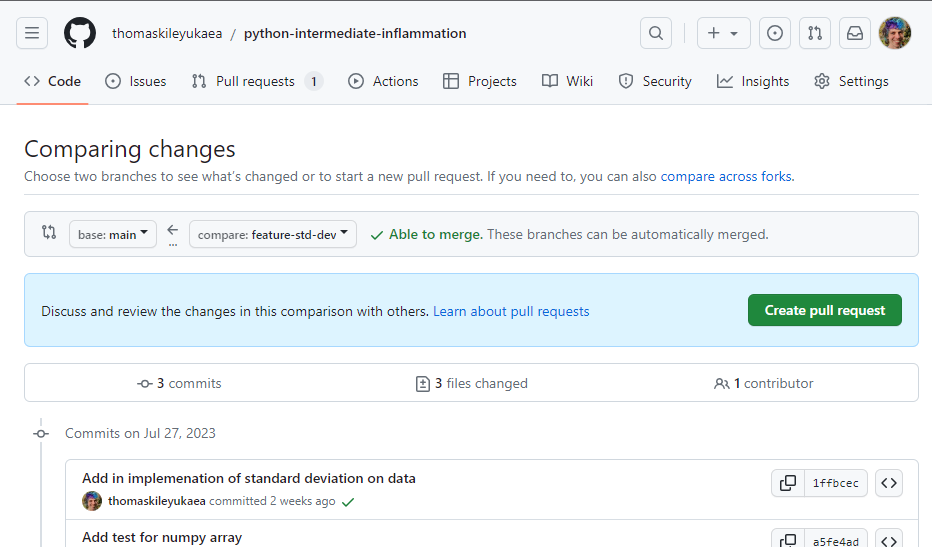

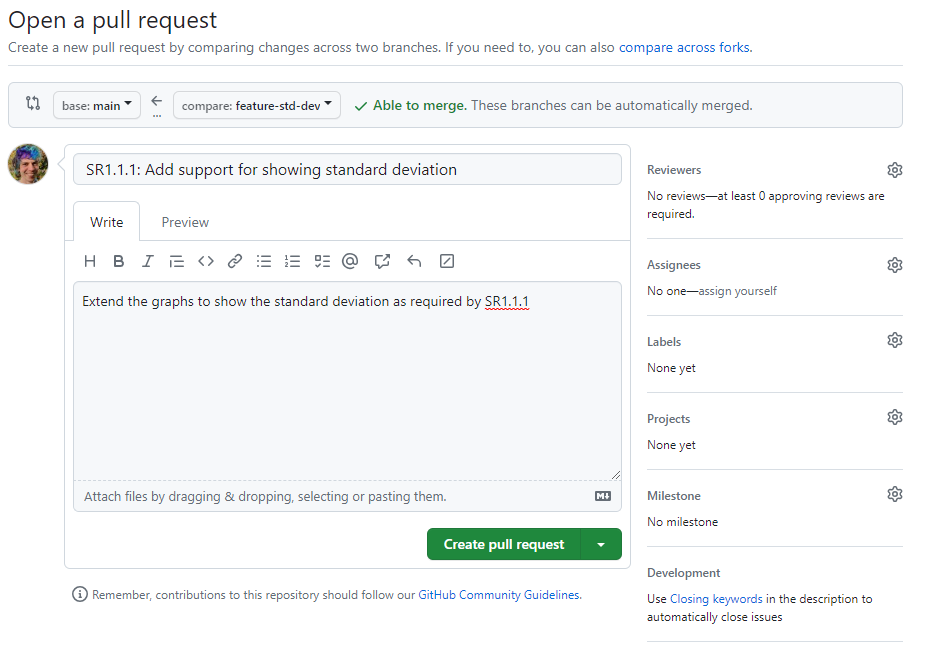

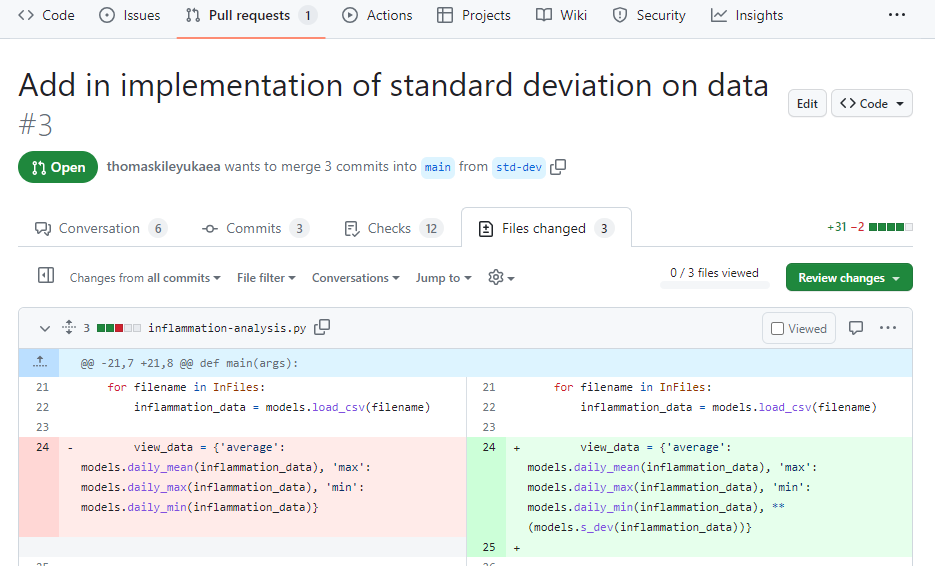

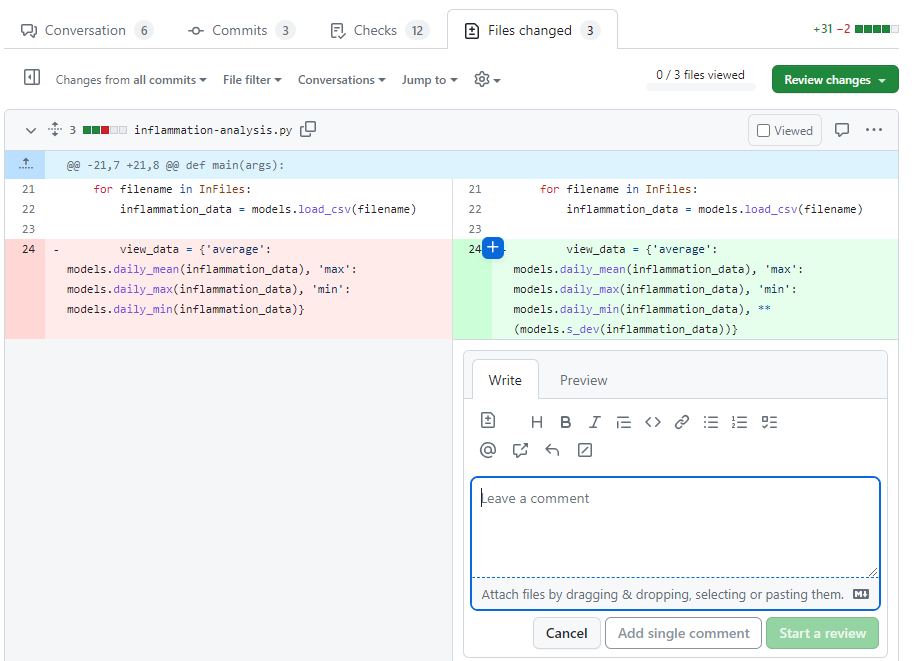

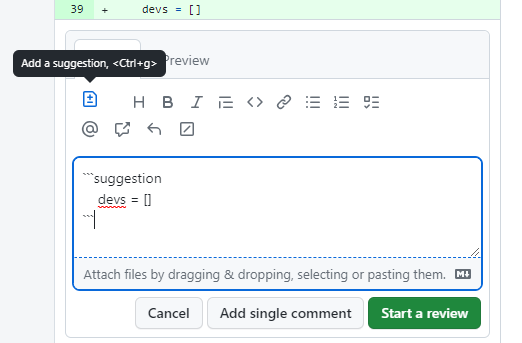

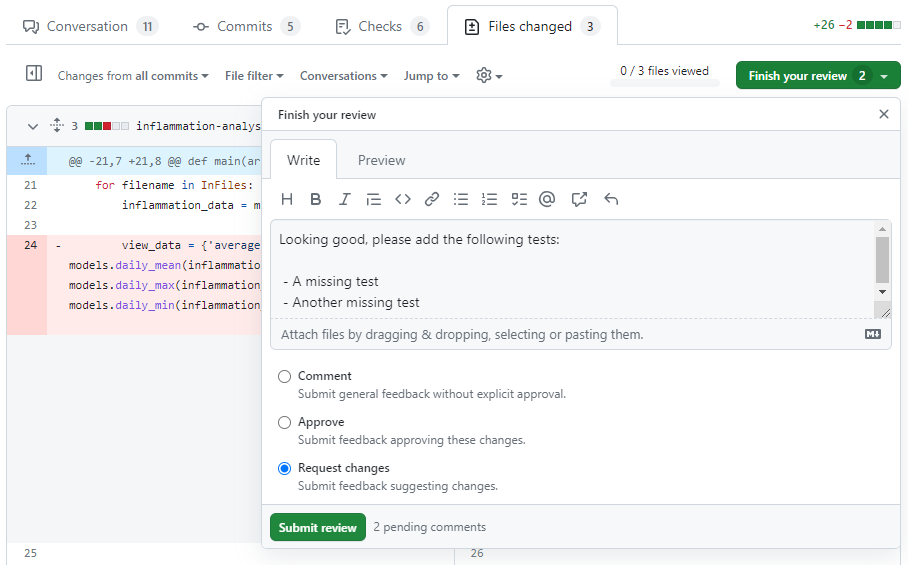

Advancing from developing code as an individual, in this section you will start working with your fellow learners on a group project (as you would do when collaborating on a software project in a team), and learn:

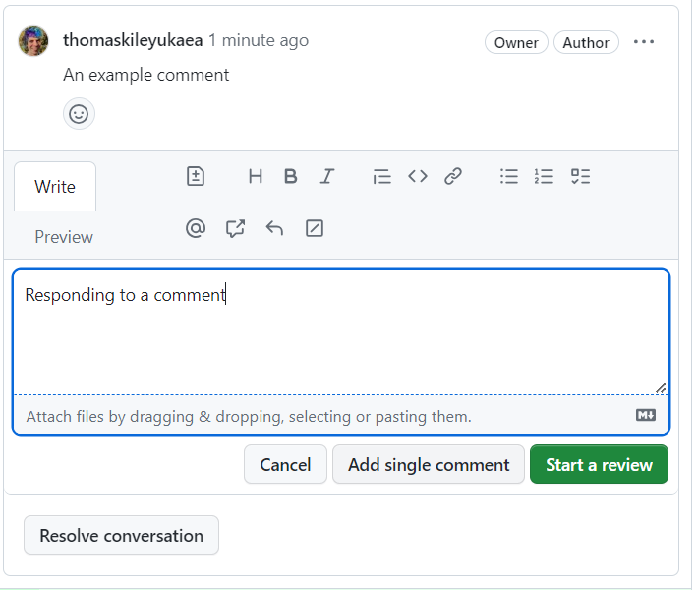

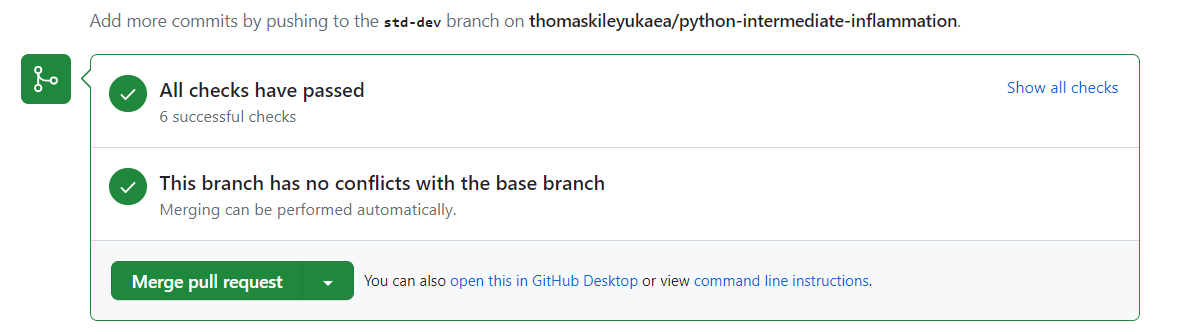

- how code review can help improve team software contributions, identify wider codebase issues, and increase codebase knowledge across a team.

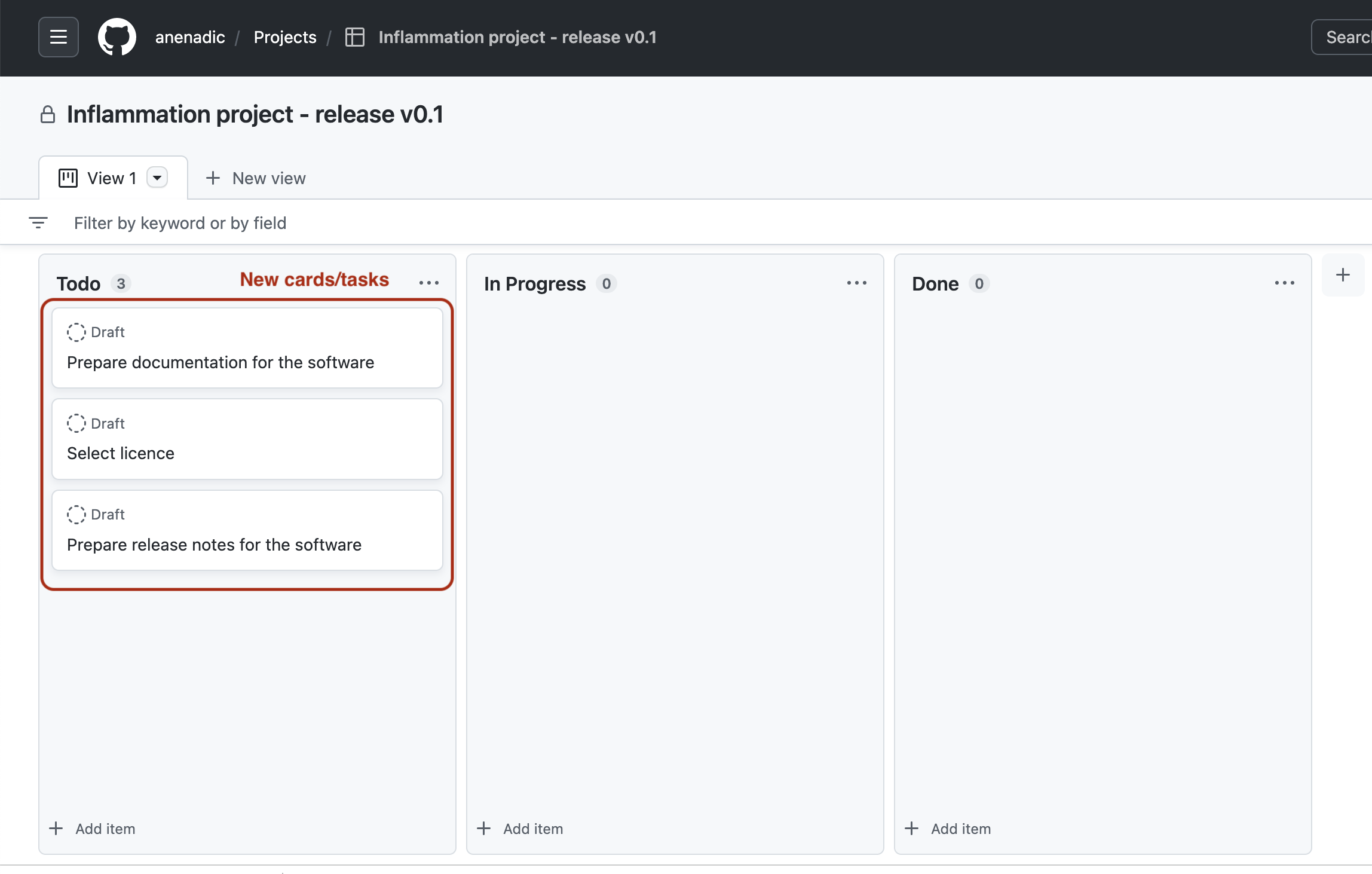

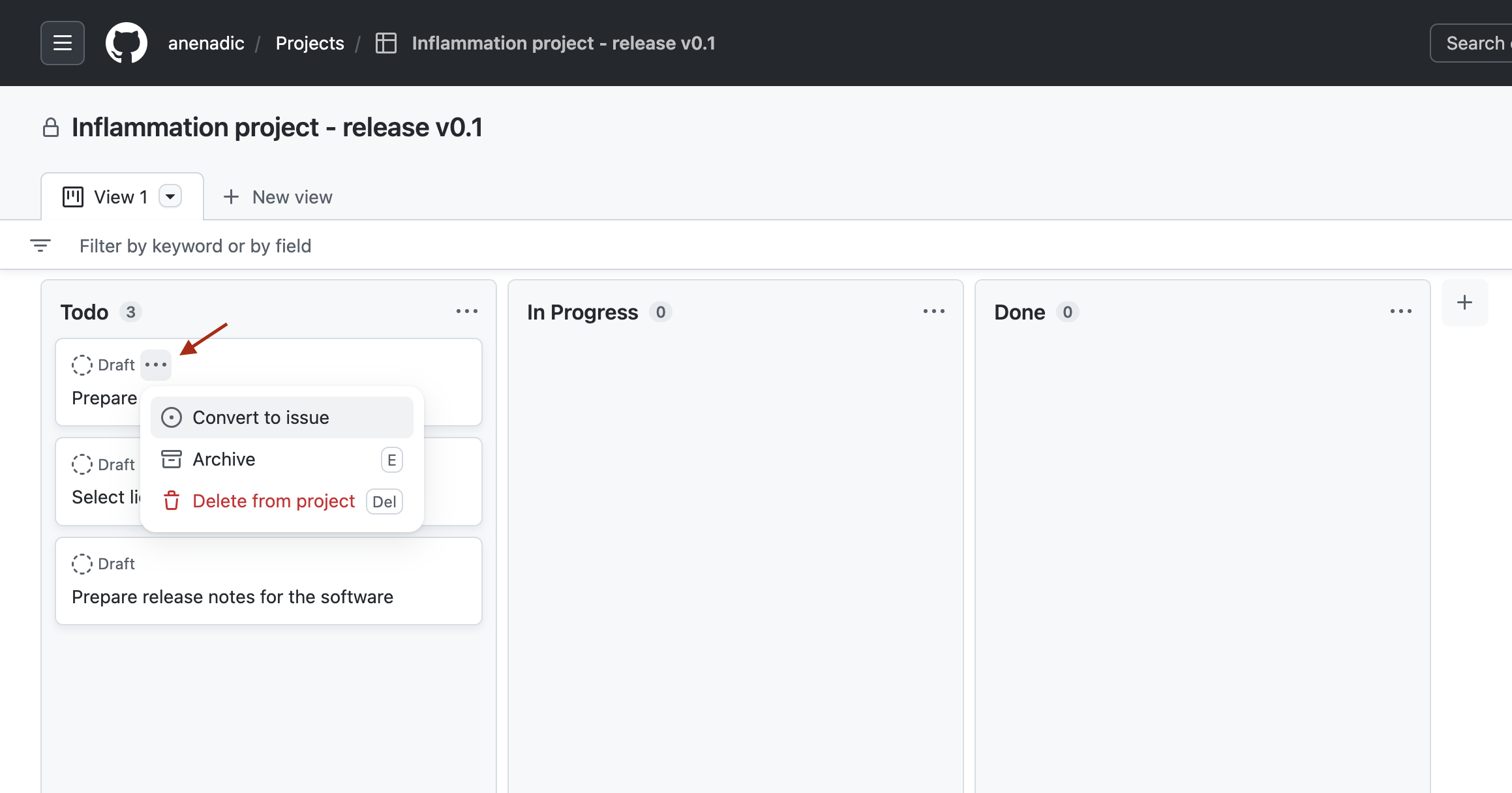

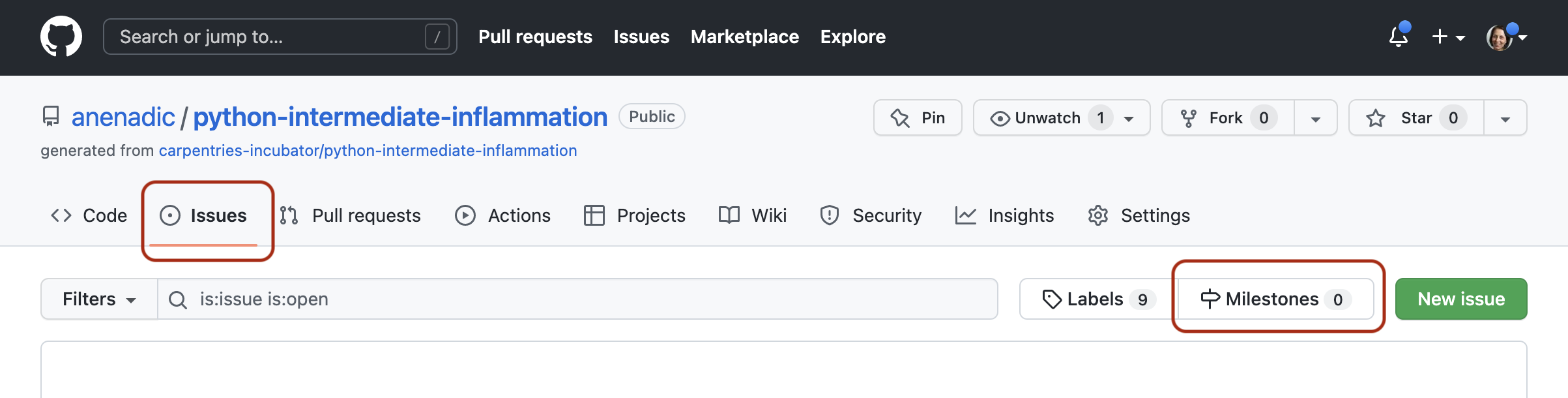

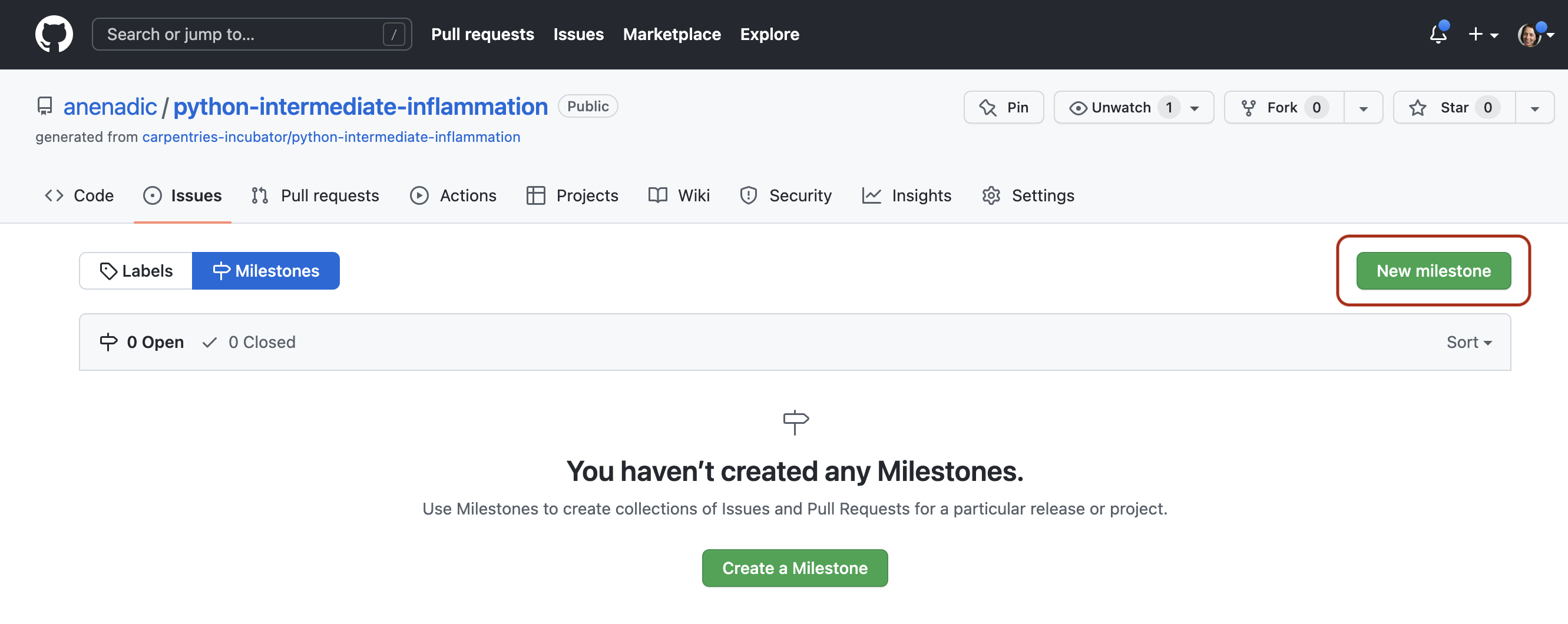

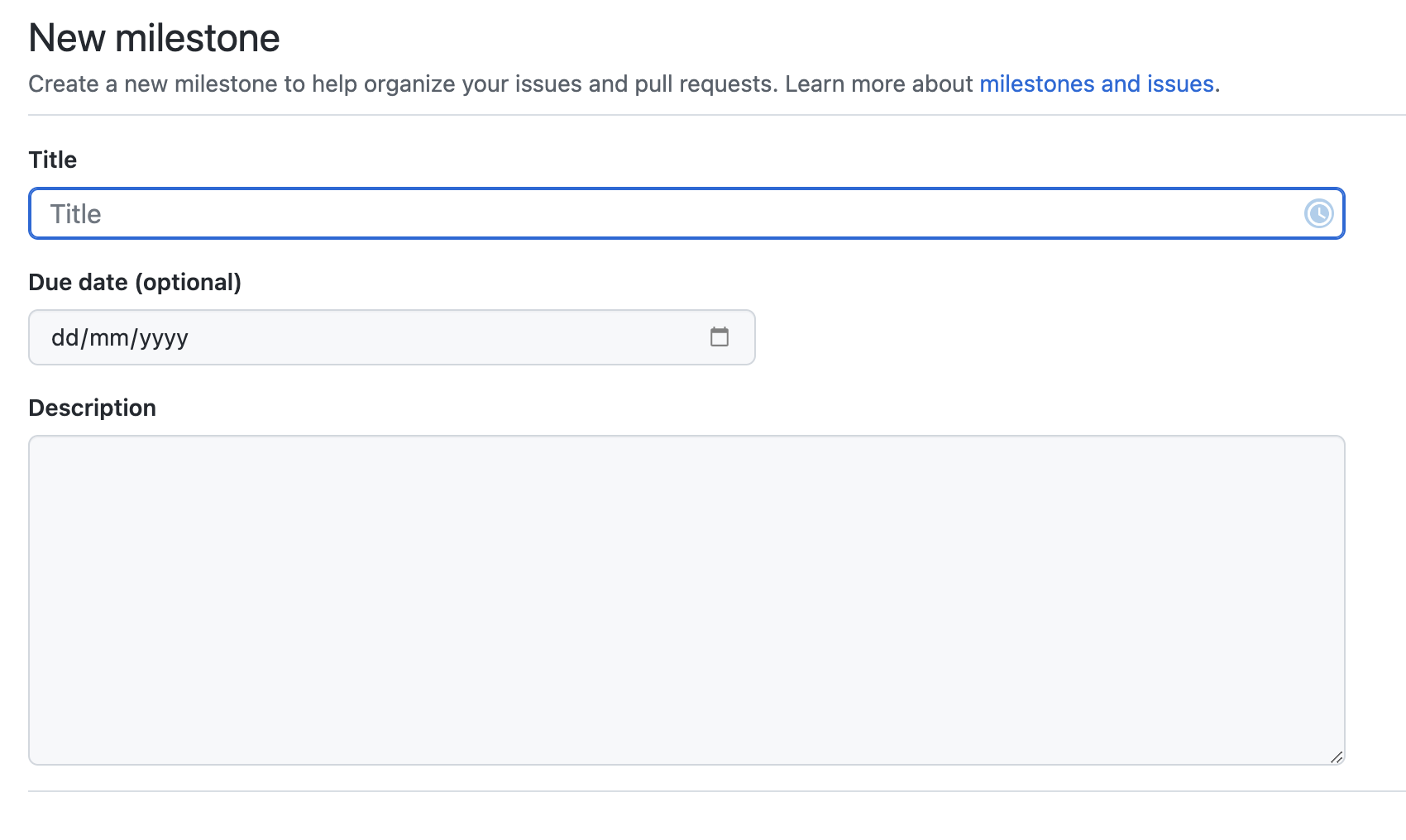

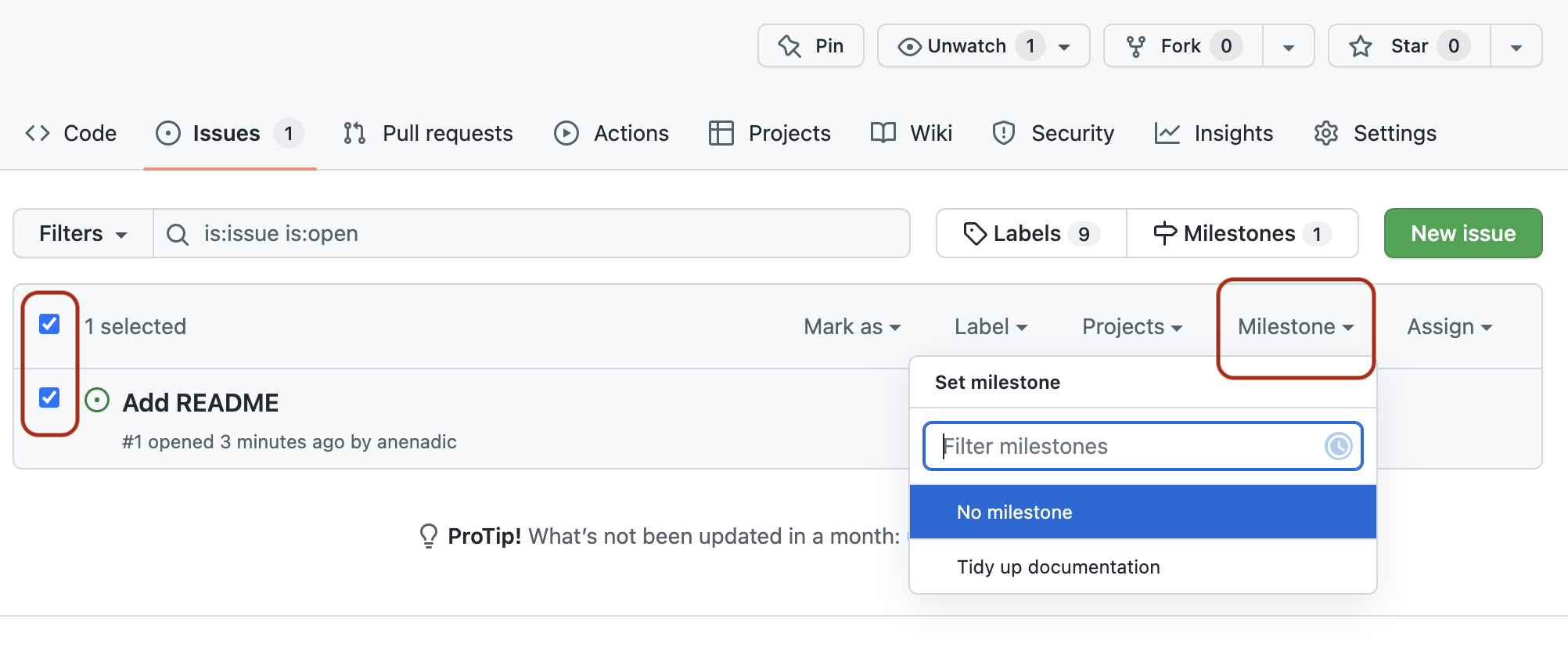

- what we can do to prepare our software for further development and reuse, by adopting best practices in documenting, licencing, tracking issues, supporting your software, and packaging software for release to others.

Section 5: Managing and Improving Software Over Its Lifetime

Finally, we move beyond just software development to managing a collaborative software project and will look into:

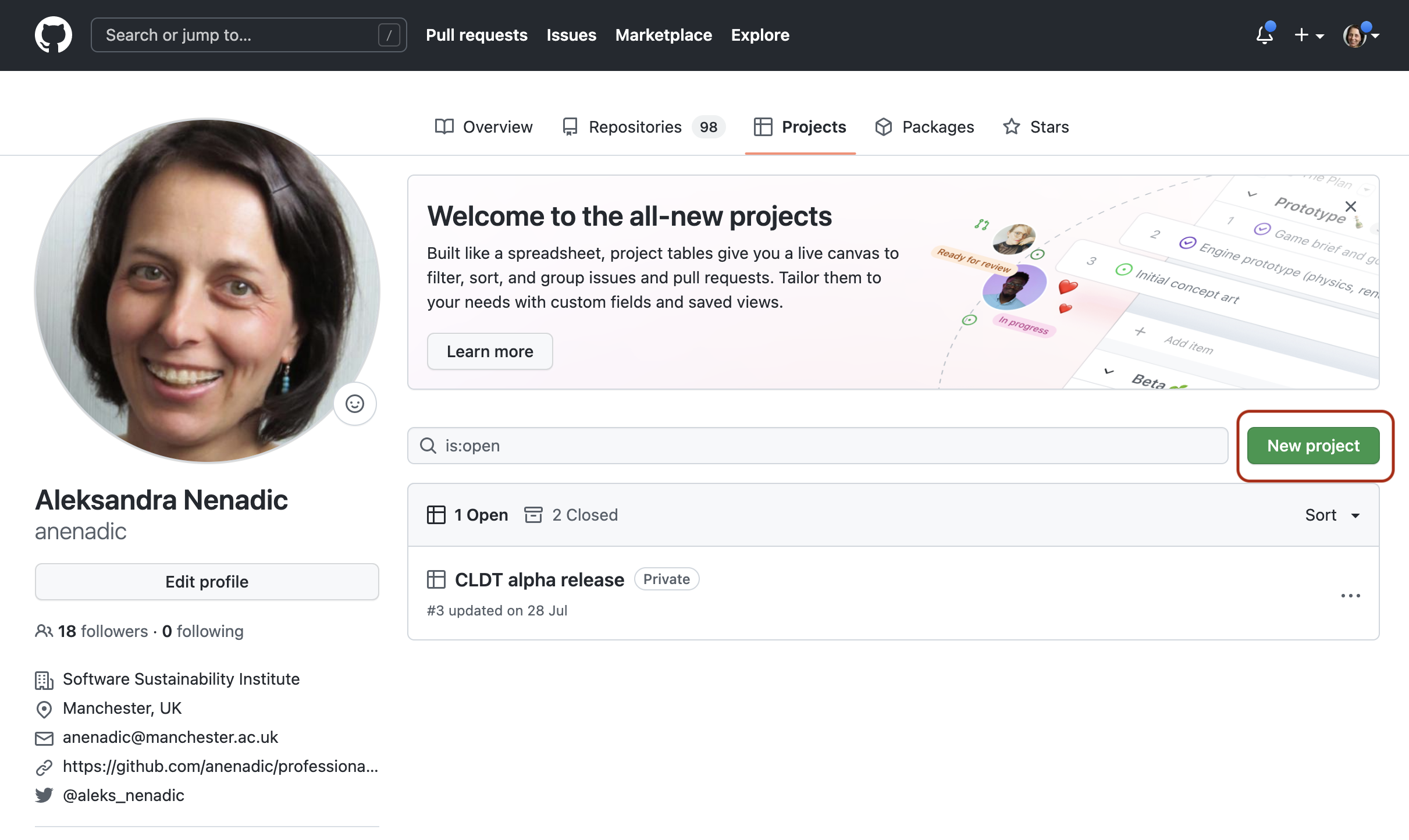

- internal planning and prioritising tasks for future development using agile techniques and effort estimation, management of internal and external communication, and software improvement through feedback.

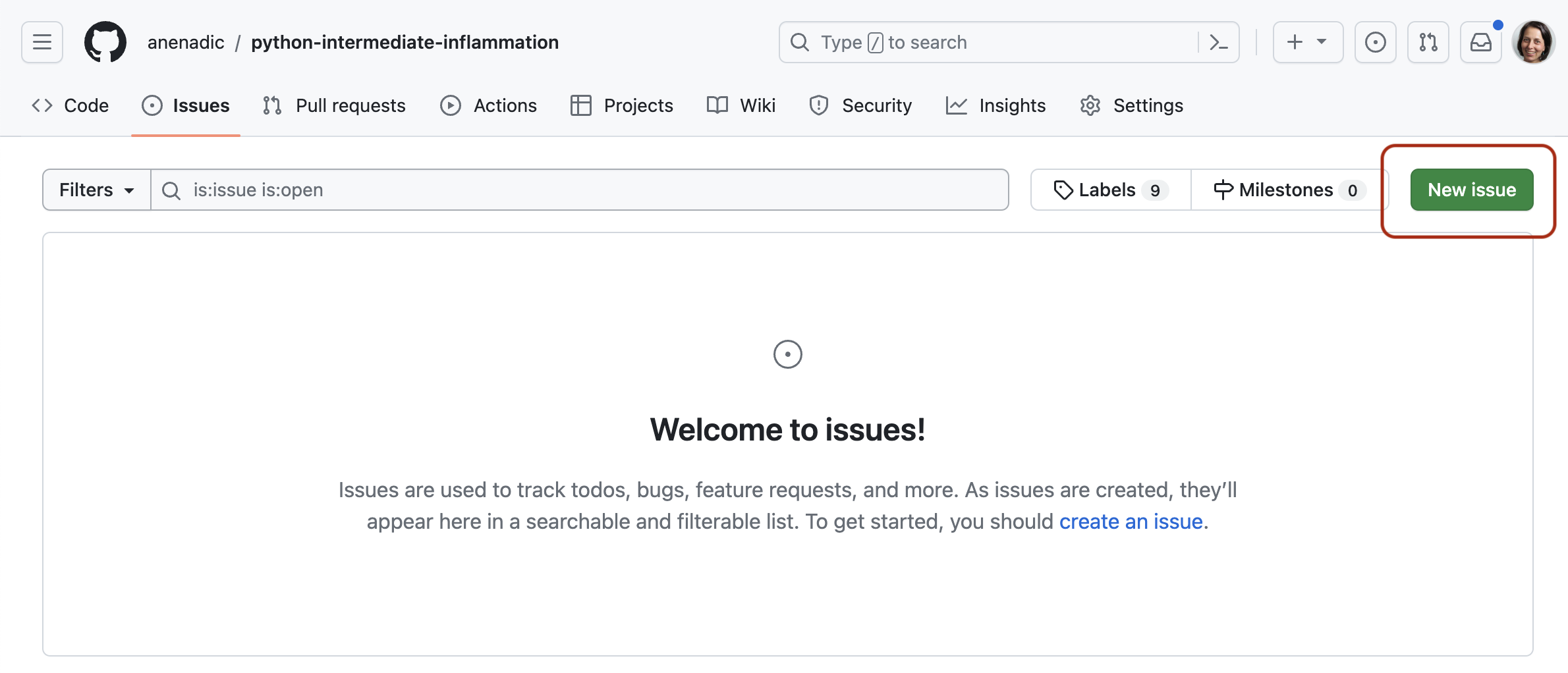

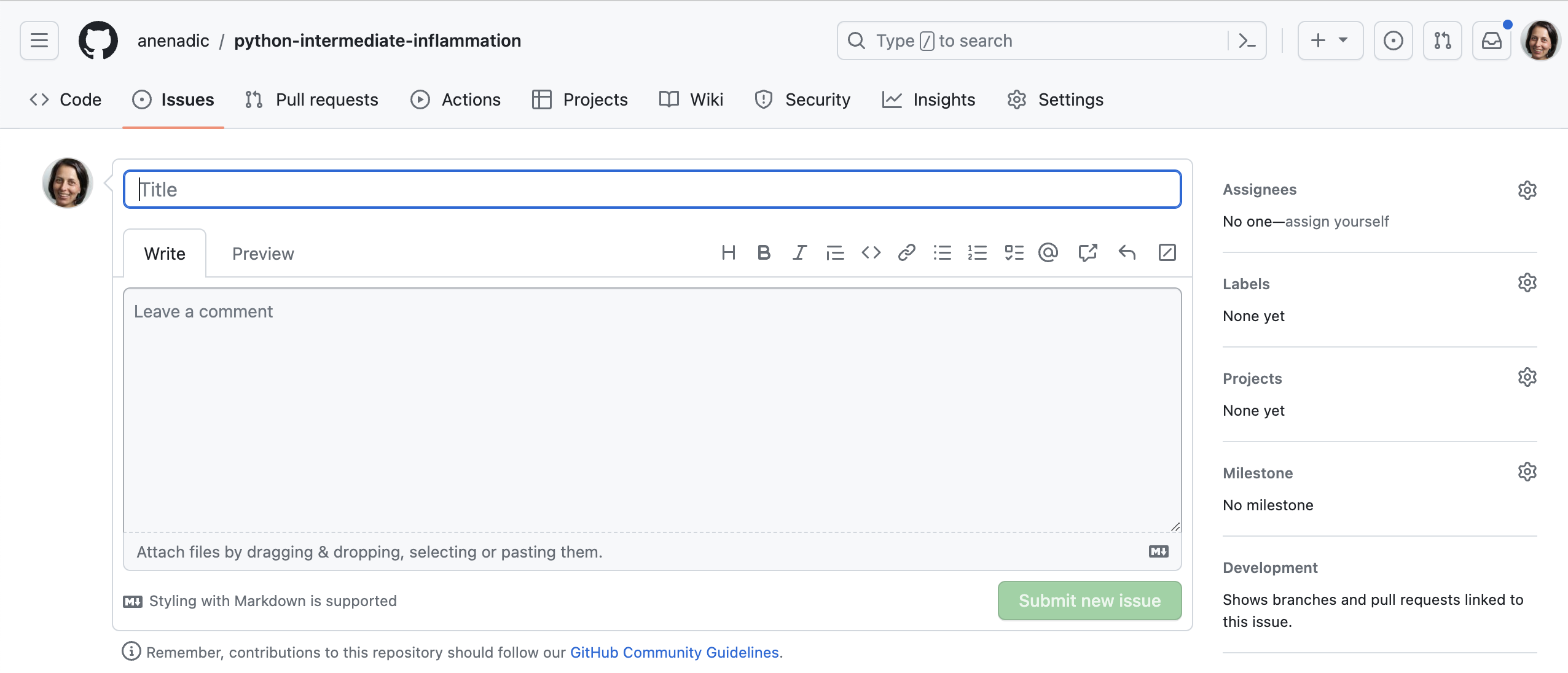

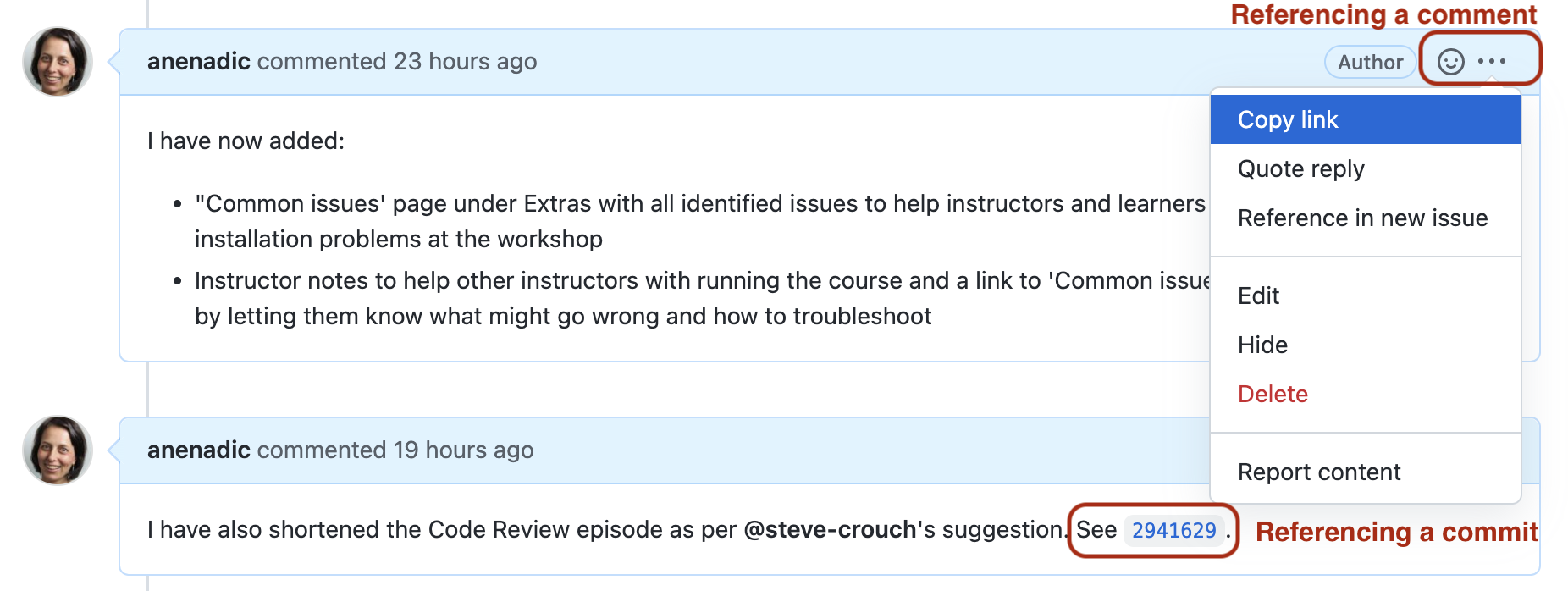

- how to adopt a critical mindset not just towards our own software project but also to assess other people’s software to ensure it is suitable for us to reuse, identify areas for improvement, and how to use GitHub to register good quality issues with a particular code repository.

Before We Start

A few notes before we start.

Prerequisite Knowledge

This is an intermediate-level software development course intended for people who have already been developing code in Python (or other languages) and applying it to their own problems after gaining basic software development skills. So, it is expected for you to have some prerequisite knowledge on the topics covered, as outlined at the beginning of the lesson. Check out this quiz to help you test your prior knowledge and determine if this course is for you.

Setup, Common Issues & Fixes

Have you setup and installed all the tools and accounts required for this course? Check the list of common issues, fixes & tips if you experience any problems running any of the tools you installed - your issue may be solved there.

Compulsory and Optional Exercises

Exercises are a crucial part of this course and the narrative. They are used to reinforce the points taught and give you an opportunity to practice things on your own. Please do not be tempted to skip exercises as that will get your local software project out of sync with the course and break the narrative. Exercises that are clearly marked as “optional” can be skipped without breaking things but we advise you to go through them too, if time allows. All exercises contain solutions but, wherever possible, try and work out a solution on your own.

Outdated Screenshots

Throughout this lesson we will make use and show content from Graphical User Interface (GUI) tools (PyCharm and GitHub). These are evolving tools and platforms, always adding new features and new visual elements. Screenshots in the lesson may then become out-of-sync, refer to or show content that no longer exists or is different to what you see on your machine. If during the lesson you find screenshots that no longer match what you see or have a big discrepancy with what you see, please open an issue describing what you see and how it differs from the lesson content. Feel free to add as many screenshots as necessary to clarify the issue.

Key Points

This lesson focuses on core, intermediate skills covering the whole software development life-cycle that will be of most use to anyone working collaboratively on code.

For code development in teams - you need more than just the right tools and languages. You need a strategy (best practices) for how you’ll use these tools as a team.

The lesson follows on from the novice Software Carpentry lesson, but this is not a prerequisite for attending as long as you have some basic Python, command line and Git skills and you have been using them for a while to write code to help with your work.

Section 1: Setting Up Environment For Collaborative Code Development

Overview

Teaching: 10 min

Exercises: 0 minQuestions

What tools are needed to collaborate on code development effectively?

Objectives

Provide an overview of all the different tools that will be used in this course.

The first section of the course is dedicated to setting up your environment for collaborative software development and introducing the project that we will be working on throughout the course. In order to build working (research) software efficiently and to do it in collaboration with others rather than in isolation, you will have to get comfortable with using a number of different tools interchangeably as they’ll make your life a lot easier. There are many options when it comes to deciding which software development tools to use for your daily tasks - we will use a few of them in this course that we believe make a difference. There are sometimes multiple tools for the job - we select one to use but mention alternatives too. As you get more comfortable with different tools and their alternatives, you will select the one that is right for you based on your personal preferences or based on what your collaborators are using.

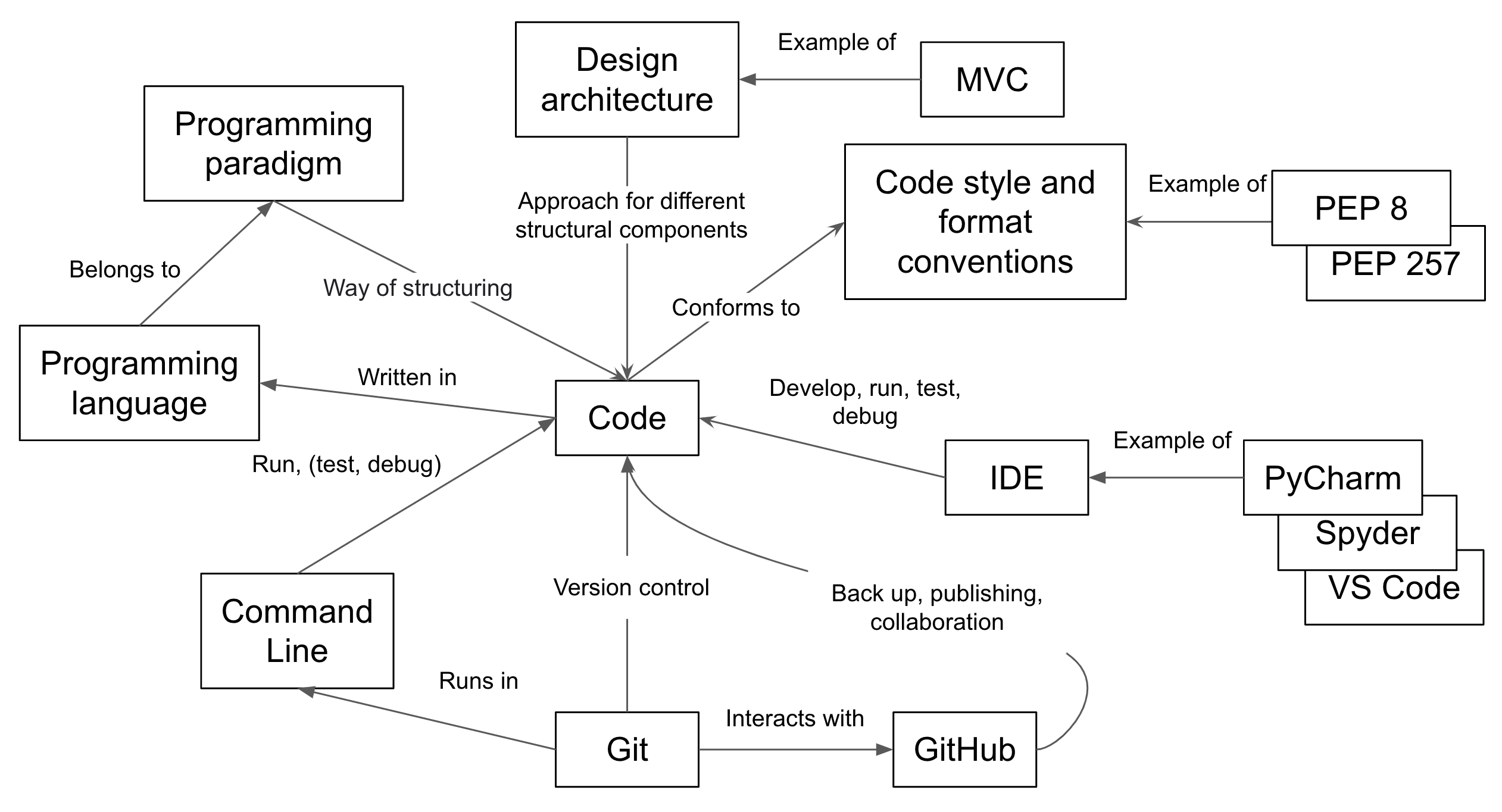

Here is an overview of the tools we will be using.

Setup, Common Issues & Fixes

Have you setup and installed all the tools and accounts required for this course? Check the list of common issues, fixes & tips if you experience any problems running any of the tools you installed - your issue may be solved there.

Command Line & Python Virtual Development Environment

We will use the command line

(also known as the command line shell/prompt/console)

to run our Python code

and interact with the version control tool Git and software sharing platform GitHub.

We will also use command line tools

venv

and pip

to set up a Python virtual development environment

and isolate our software project from other Python projects we may work on.

Note: some Windows users experience the issue where Python hangs from Git Bash

(i.e. typing python causes it to just hang with no error message or output) -

see the solution to this issue.

Integrated Development Environment (IDE)

An IDE integrates a number of tools that we need to develop a software project that goes beyond a single script - including a smart code editor, a code compiler/interpreter, a debugger, etc. It will help you write well-formatted and readable code that conforms to code style guides (such as PEP8 for Python) more efficiently by giving relevant and intelligent suggestions for code completion and refactoring. IDEs often integrate command line console and version control tools - we teach them separately in this course as this knowledge can be ported to other programming languages and command line tools you may use in the future (but is applicable to the integrated versions too).

We will use PyCharm in this course - a free, open source IDE.

Git & GitHub

Git is a free and open source distributed version control system designed to save every change made to a (software) project, allowing others to collaborate and contribute. In this course, we use Git to version control our code in conjunction with GitHub for code backup and sharing. GitHub is one of the leading integrated products and social platforms for modern software development, monitoring and management - it will help us with version control, issue management, code review, code testing/Continuous Integration, and collaborative development. An important concept in collaborative development is version control workflows (i.e. how to effectively use version control on a project with others).

Python Coding Style

Most programming languages will have associated standards and conventions for how the source code should be formatted and styled. Although this sounds pedantic, it is important for maintaining the consistency and readability of code across a project. Therefore, one should be aware of these guidelines and adhere to whatever the project you are working on has specified. In Python, we will be looking at a convention called PEP8.

Let’s get started with setting up our software development environment!

Key Points

In order to develop (write, test, debug, backup) code efficiently, you need to use a number of different tools.

When there is a choice of tools for a task you will have to decide which tool is right for you, which may be a matter of personal preference or what the team or community you belong to is using.

Introduction to Our Software Project

Overview

Teaching: 20 min

Exercises: 10 minQuestions

What is the design architecture of our example software project?

Why is splitting code into smaller functional units (modules) good when designing software?

Objectives

Use Git to obtain a working copy of our software project from GitHub.

Inspect the structure and architecture of our software project.

Understand Model-View-Controller (MVC) architecture in software design and its use in our project.

Patient Inflammation Study Project

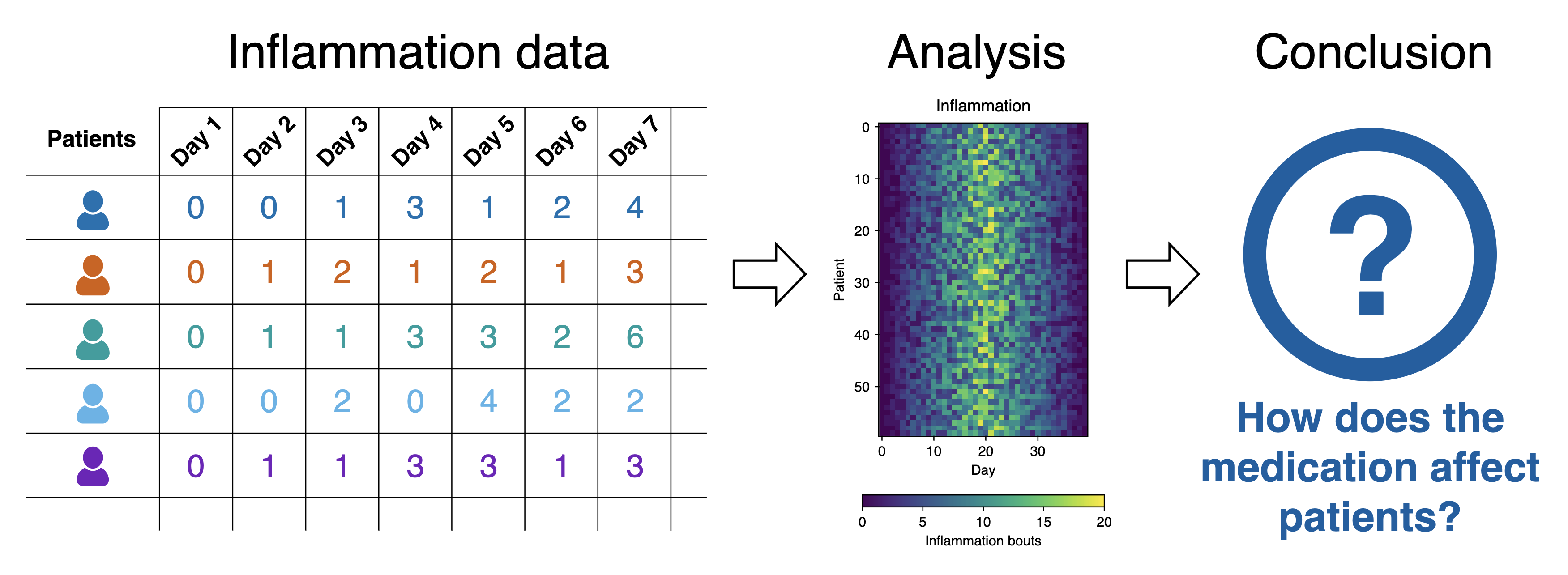

So, you have joined a software development team that has been working on the patient inflammation study project developed in Python and stored on GitHub. The project analyses the data to study the effect of a new treatment for arthritis by analysing the inflammation levels in patients who have been given this treatment. It reuses the inflammation datasets from the Software Carpentry Python novice lesson.

Inflammation study pipeline from the Software Carpentry Python novice lesson

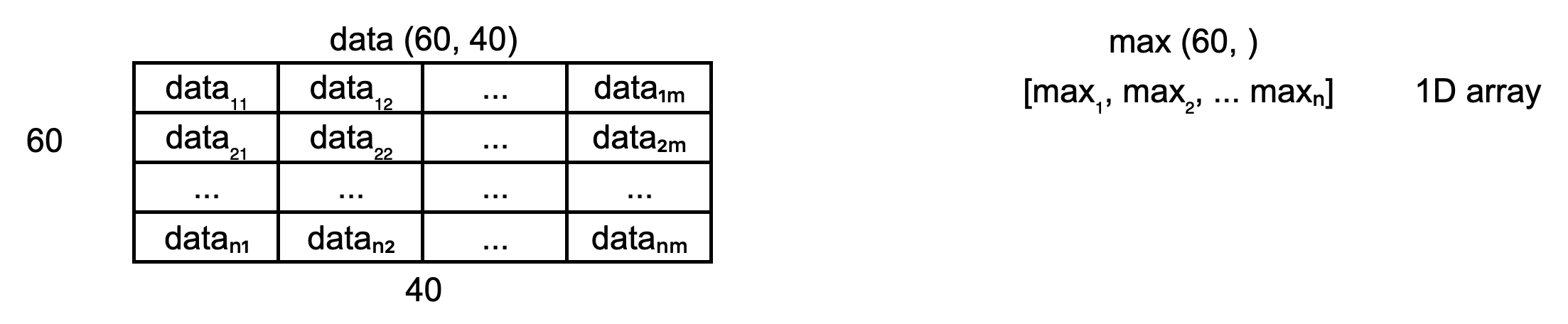

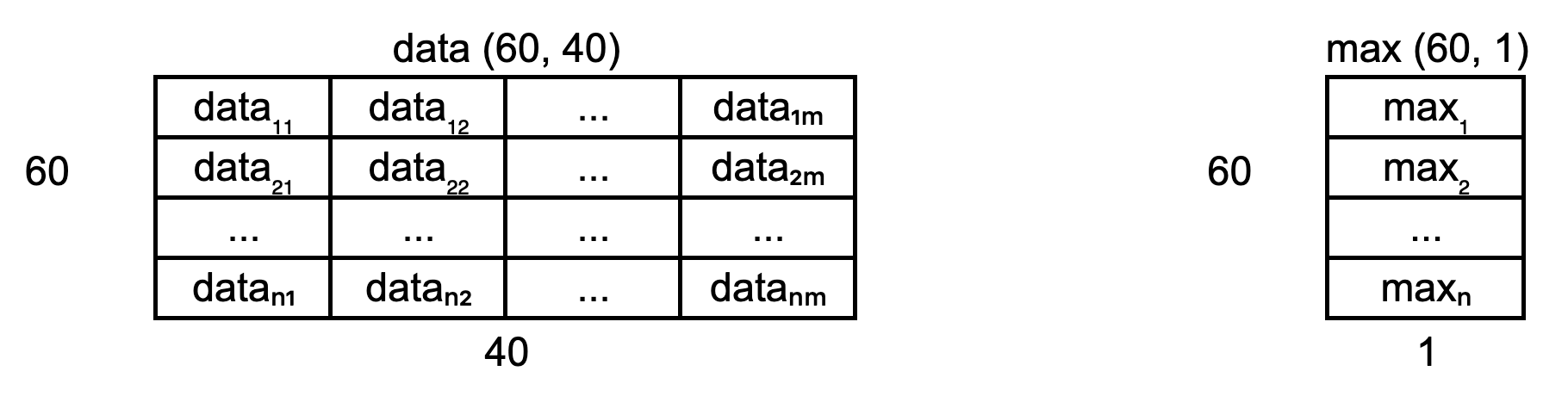

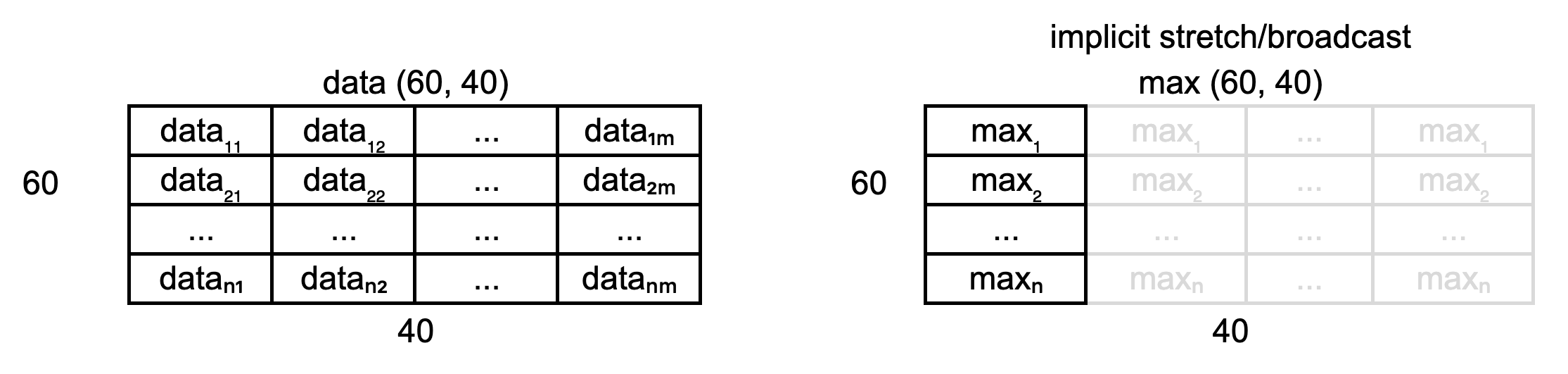

What Does Patient Inflammation Data Contain?

Each dataset records inflammation measurements from a separate clinical trial of the drug, and each dataset contains information for 60 patients, who had their inflammation levels recorded for 40 days whilst participating in the trial (a snapshot of one of the data files is shown in diagram above).

Each of the data files uses the popular comma-separated (CSV) format to represent the data, where:

- Each row holds inflammation measurements for a single patient,

- Each column represents a successive day in the trial,

- Each cell represents an inflammation reading on a given day for a patient (in some arbitrary units of inflammation measurement).

The project is not finished and contains some errors. You will be working on your own and in collaboration with others to fix and build on top of the existing code during the course.

Downloading Our Software Project

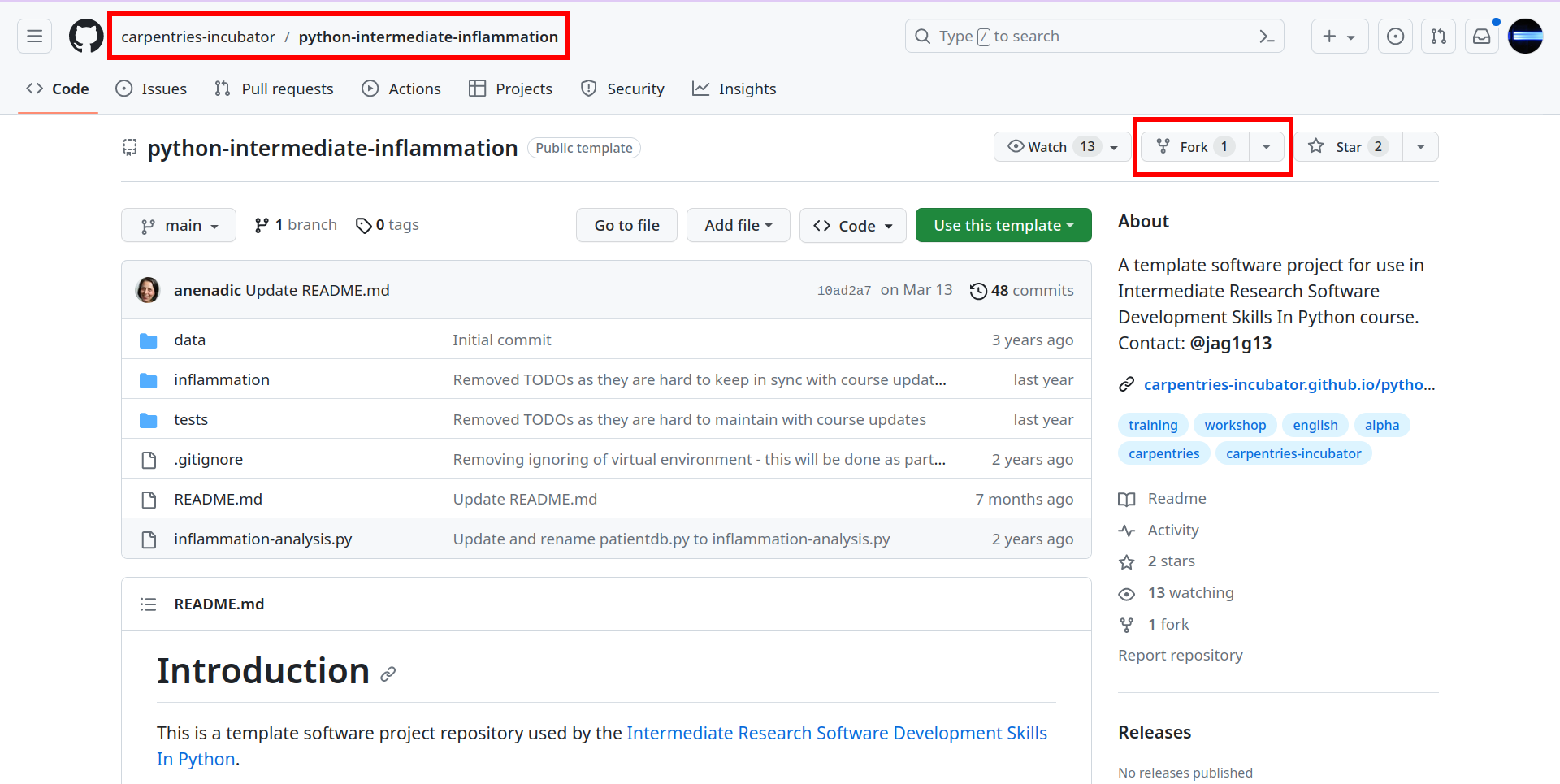

To start working on the project, you will first create a fork of the software project repository from GitHub within your own GitHub account and then obtain a local copy of that project (from your GitHub) on your machine.

- Make sure you have a GitHub account and that you have set up your SSH key pair for authentication with GitHub, as explained in Setup.

- Log into your GitHub account.

-

Go to the software project repository in GitHub.

- Click the

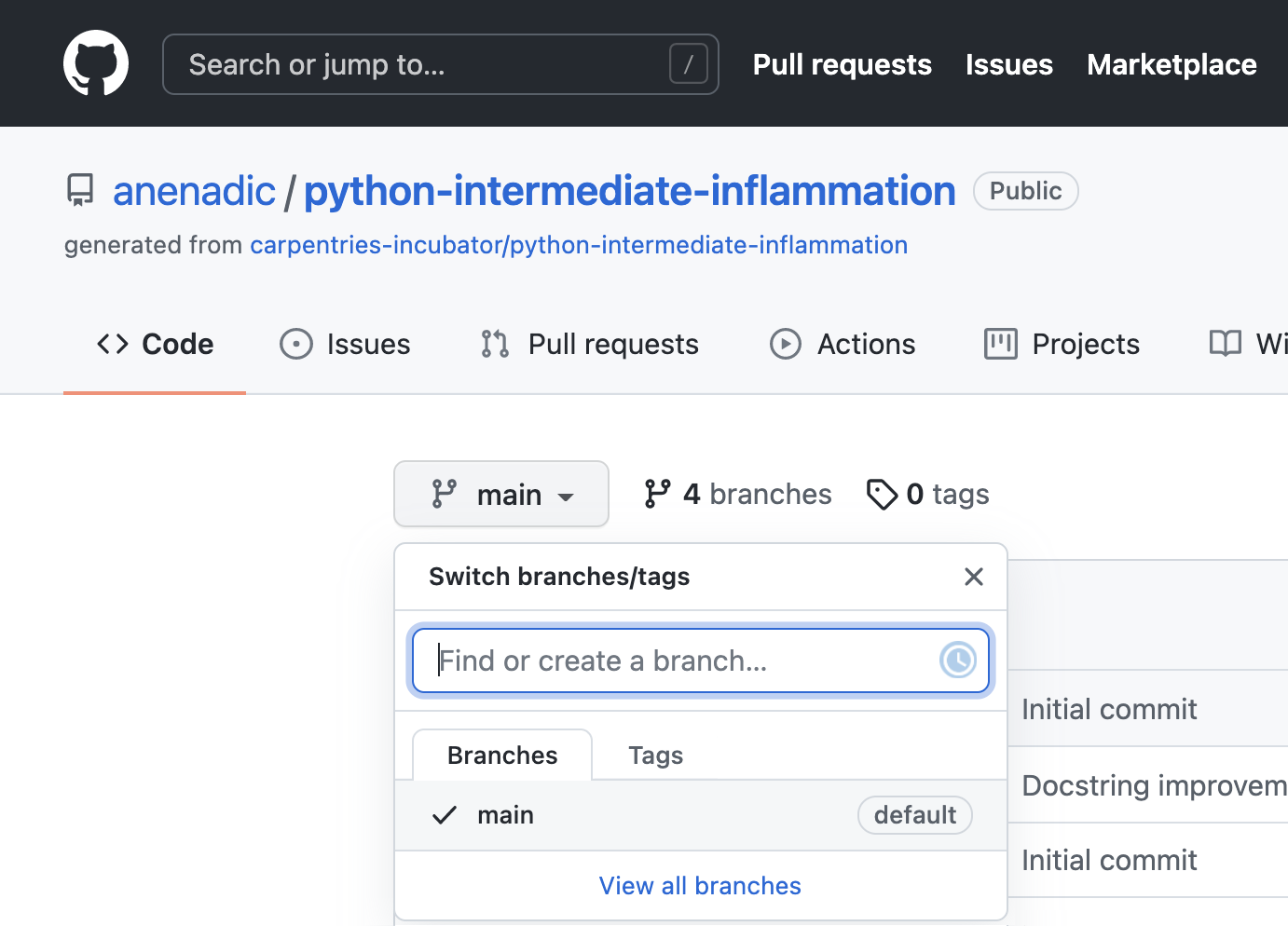

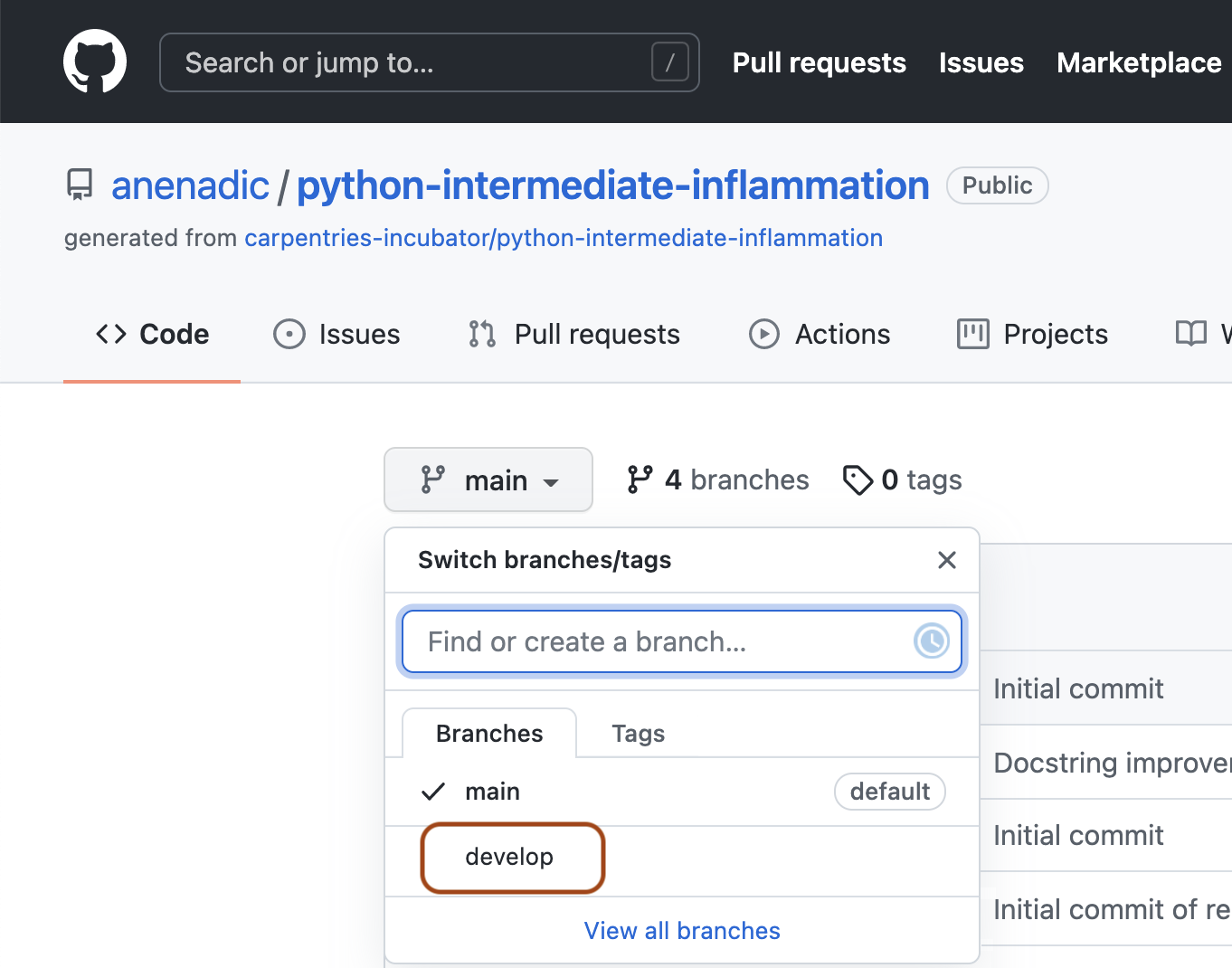

Forkbutton towards the top right of the repository’s GitHub page to create a fork of the repository under your GitHub account (you will need to be signed into GitHub for theForkbutton to work). Note that each participant is creating their own fork to work on. Also, we are not copying from a template but creating a fork (remember you can have only one fork but can have multiple copies of a repository in GitHub). -

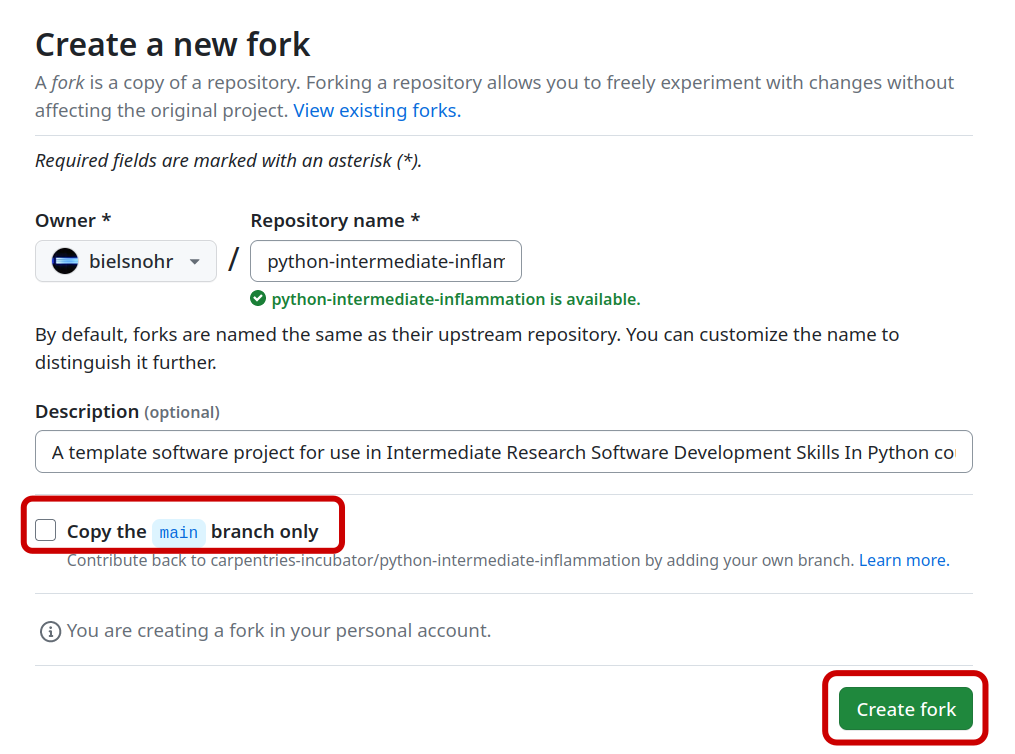

Make sure to select your personal account and set the name of the project to

python-intermediate-inflammation(you can call it anything you like, but it may be easier for future group exercises if everyone uses the same name). Ensure that you uncheck theCopy the main branch onlybutton. This will guarantee we get some other branches needed for later exercises, but for the minute you can ignore them.

- Click the

Create forkbutton and wait for GitHub to import the copy of the repository under your account. -

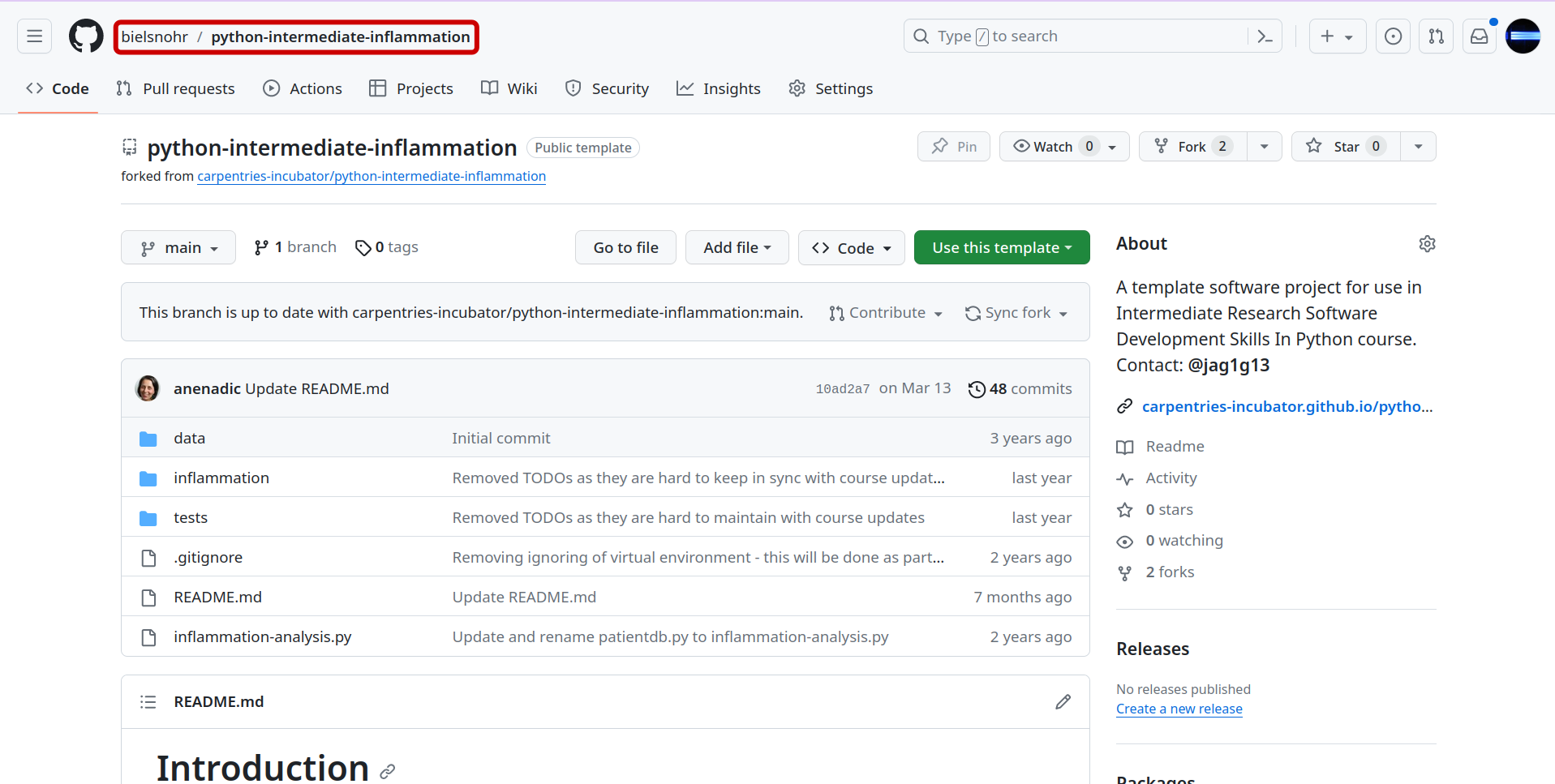

Locate the forked repository under your own GitHub account. You should be taken there automatically after confirming the fork operation, but if not, you can click your username top left to be taken to your user page, and then select the

Repositoriestab, where you can search for this new fork.

Exercise: Obtain the Software Project Locally

Using the command line, clone the copied repository from your GitHub account into the home directory on your computer using SSH. Which command(s) would you use to get a detailed list of contents of the directory you have just cloned?

Solution

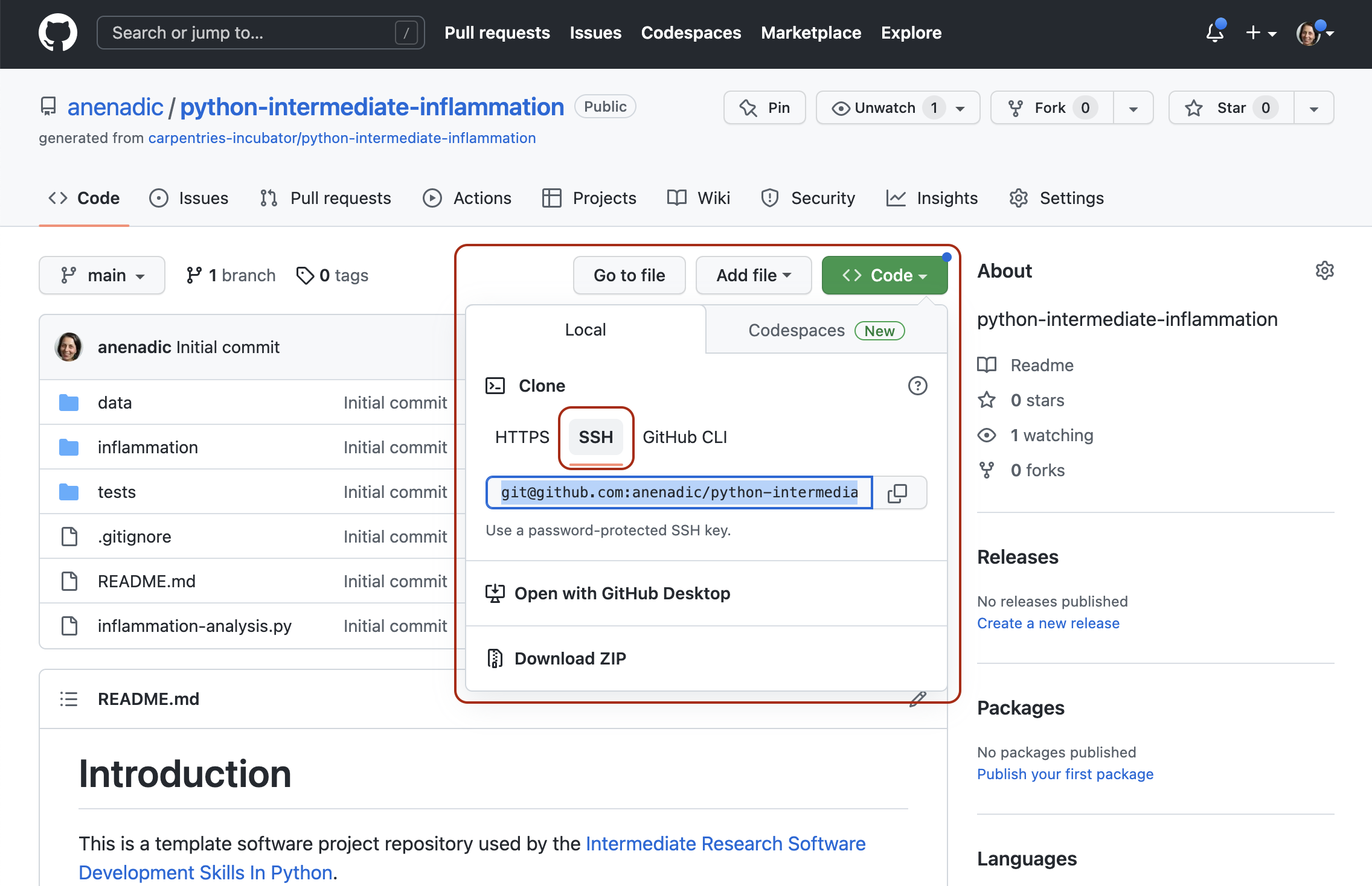

- Find the SSH URL of the software project repository to clone from your GitHub account. Make sure you do not clone the original repository but rather your own fork, as you should be able to push commits to it later on. Also make sure you select the SSH tab and not the HTTPS one - you’ll be able to clone with HTTPS, but not to send your changes back to GitHub!

- Make sure you are located in your home directory in the command line with:

$ cd ~- From your home directory in the command line, do:

$ git clone git@github.com:<YOUR_GITHUB_USERNAME>/python-intermediate-inflammation.gitMake sure you are cloning your fork of the software project and not the original repository.

- Navigate into the cloned repository folder in your command line with:

$ cd python-intermediate-inflammationNote: If you have accidentally copied the HTTPS URL of your repository instead of the SSH one, you can easily fix that from your project folder in the command line with:

$ git remote set-url origin git@github.com:<YOUR_GITHUB_USERNAME>/python-intermediate-inflammation.git

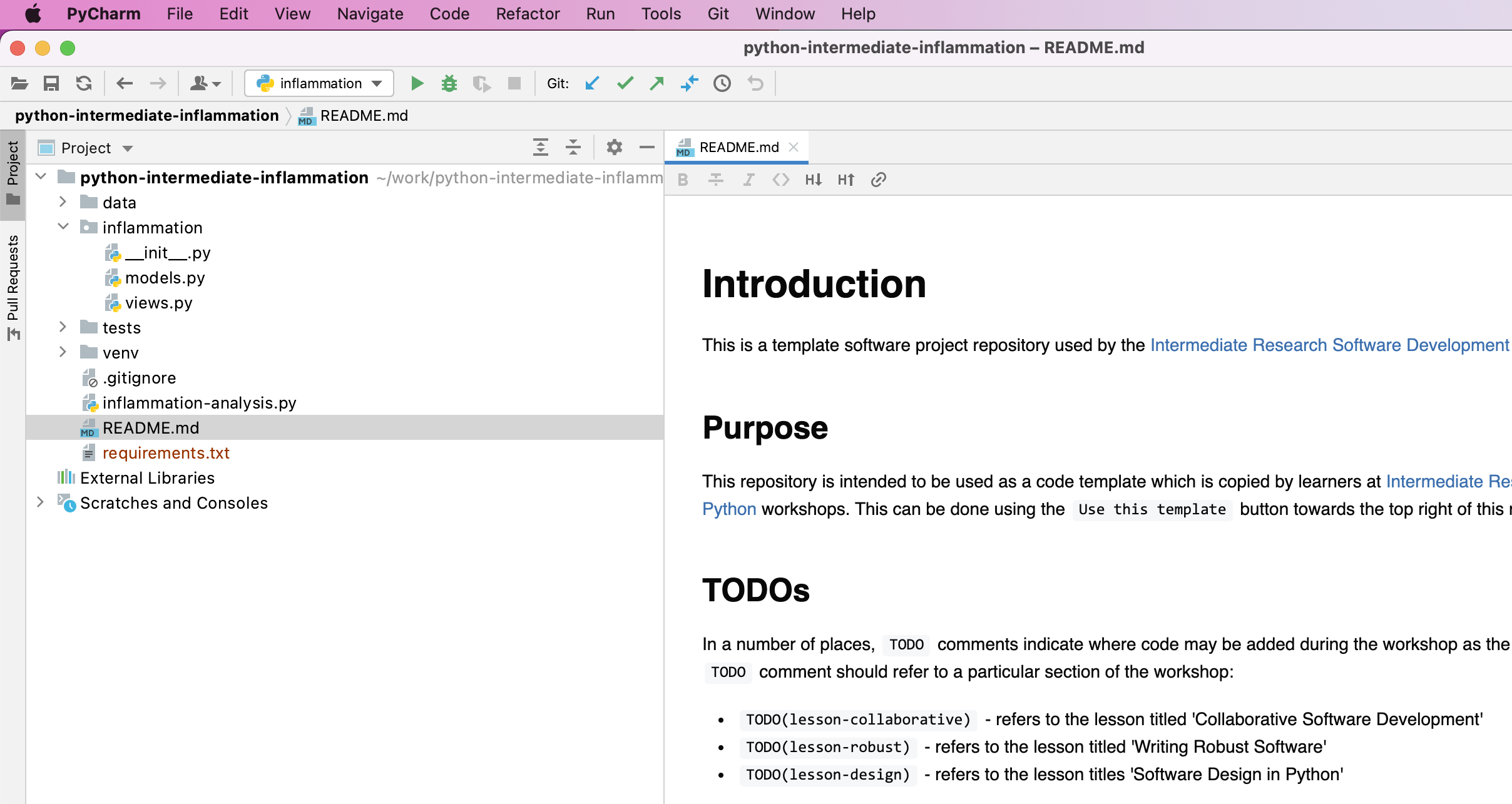

Our Software Project Structure

Let’s inspect the content of the software project from the command line.

From the root directory of the project,

you can use the command ls -l to get a more detailed list of the contents.

You should see something similar to the following.

$ cd ~/python-intermediate-inflammation

$ ls -l

total 24

-rw-r--r-- 1 carpentry users 1055 20 Apr 15:41 README.md

drwxr-xr-x 18 carpentry users 576 20 Apr 15:41 data

drwxr-xr-x 5 carpentry users 160 20 Apr 15:41 inflammation

-rw-r--r-- 1 carpentry users 1122 20 Apr 15:41 inflammation-analysis.py

drwxr-xr-x 4 carpentry users 128 20 Apr 15:41 tests

As can be seen from the above, our software project contains the README file

(that typically describes the project, its usage, installation, authors and how to contribute),

Python script inflammation-analysis.py,

and three directories -

inflammation, data and tests.

The Python script inflammation-analysis.py provides

the main entry point in the application,

and on closer inspection,

we can see that the inflammation directory contains two more Python scripts -

views.py and models.py.

We will have a more detailed look into these shortly.

$ ls -l inflammation

total 24

-rw-r--r-- 1 alex staff 71 29 Jun 09:59 __init__.py

-rw-r--r-- 1 alex staff 838 29 Jun 09:59 models.py

-rw-r--r-- 1 alex staff 649 25 Jun 13:13 views.py

Directory data contains several files with patients’ daily inflammation information

(along with some other files):

$ ls -l data

total 264

-rw-r--r-- 1 alex staff 5365 25 Jun 13:13 inflammation-01.csv

-rw-r--r-- 1 alex staff 5314 25 Jun 13:13 inflammation-02.csv

-rw-r--r-- 1 alex staff 5127 25 Jun 13:13 inflammation-03.csv

-rw-r--r-- 1 alex staff 5367 25 Jun 13:13 inflammation-04.csv

-rw-r--r-- 1 alex staff 5345 25 Jun 13:13 inflammation-05.csv

-rw-r--r-- 1 alex staff 5330 25 Jun 13:13 inflammation-06.csv

-rw-r--r-- 1 alex staff 5342 25 Jun 13:13 inflammation-07.csv

-rw-r--r-- 1 alex staff 5127 25 Jun 13:13 inflammation-08.csv

-rw-r--r-- 1 alex staff 5327 25 Jun 13:13 inflammation-09.csv

-rw-r--r-- 1 alex staff 5342 25 Jun 13:13 inflammation-10.csv

-rw-r--r-- 1 alex staff 5127 25 Jun 13:13 inflammation-11.csv

-rw-r--r-- 1 alex staff 5340 25 Jun 13:13 inflammation-12.csv

-rw-r--r-- 1 alex staff 22554 25 Jun 13:13 python-novice-inflammation-data.zip

-rw-r--r-- 1 alex staff 12 25 Jun 13:13 small-01.csv

-rw-r--r-- 1 alex staff 15 25 Jun 13:13 small-02.csv

-rw-r--r-- 1 alex staff 12 25 Jun 13:13 small-03.csv

As previously mentioned, each of the inflammation data files contains separate trial data for 60 patients over 40 days.

Exercise: Have a Peek at the Data

Which command(s) would you use to list the contents or a first few lines of

data/inflammation-01.csvfile?Solution

- To list the entire content of a file from the project root do:

cat data/inflammation-01.csv.- To list the first 5 lines of a file from the project root do:

head -n 5 data/inflammation-01.csv.0,0,1,3,2,3,6,4,5,7,2,4,11,11,3,8,8,16,5,13,16,5,8,8,6,9,10,10,9,3,3,5,3,5,4,5,3,3,0,1 0,1,1,2,2,5,1,7,4,2,5,5,4,6,6,4,16,11,14,16,14,14,8,17,4,14,13,7,6,3,7,7,5,6,3,4,2,2,1,1 0,1,1,1,4,1,6,4,6,3,6,5,6,4,14,13,13,9,12,19,9,10,15,10,9,10,10,7,5,6,8,6,6,4,3,5,2,1,1,1 0,0,0,1,4,5,6,3,8,7,9,10,8,6,5,12,15,5,10,5,8,13,18,17,14,9,13,4,10,11,10,8,8,6,5,5,2,0,2,0 0,0,1,0,3,2,5,4,8,2,9,3,3,10,12,9,14,11,13,8,6,18,11,9,13,11,8,5,5,2,8,5,3,5,4,1,3,1,1,0

Directory tests contains several tests that have been implemented already.

We will be adding more tests during the course as our code grows.

An important thing to note here is that the structure of the project is not arbitrary. One of the big differences between novice and intermediate software development is planning the structure of your code. This structure includes software components and behavioural interactions between them (including how these components are laid out in a directory and file structure). A novice will often make up the structure of their code as they go along. However, for more advanced software development, we need to plan this structure - called a software architecture - beforehand.

Let’s have a more detailed look into what a software architecture is and which architecture is used by our software project before we start adding more code to it.

Software Architecture

A software architecture is the fundamental structure of a software system that is decided at the beginning of project development based on its requirements and cannot be changed that easily once implemented. It refers to a “bigger picture” of a software system that describes high-level components (modules) of the system and how they interact.

In software design and development,

large systems or programs are often decomposed into a set of smaller modules

each with a subset of functionality.

Typical examples of modules in programming are software libraries;

some software libraries, such as numpy and matplotlib in Python,

are bigger modules that contain several smaller sub-modules.

Another example of modules are classes in object-oriented programming languages.

Programming Modules and Interfaces

Although modules are self-contained and independent elements to a large extent (they can depend on other modules), there are well-defined ways of how they interact with one another. These rules of interaction are called programming interfaces - they define how other modules (clients) can use a particular module. Typically, an interface to a module includes rules on how a module can take input from and how it gives output back to its clients. A client can be a human, in which case we also call these user interfaces. Even smaller functional units such as functions/methods have clearly defined interfaces - a function/method’s definition (also known as a signature) states what parameters it can take as input and what it returns as an output.

There are various software architectures around defining different ways of dividing the code into smaller modules with well defined roles, for example:

- Model–View–Controller (MVC) architecture, which we will look into in detail and use for our software project,

- Service-oriented architecture (SOA), which separates code into distinct services, accessible over a network by consumers (users or other services) that communicate with each other by passing data in a well-defined, shared format (protocol),

- Client-server architecture, where clients request content or service from a server, initiating communication sessions with servers, which await incoming requests (e.g. email, network printing, the Internet),

- Multilayer architecture, is a type of architecture in which presentation, application processing and data management functions are split into distinct layers and may even be physically separated to run on separate machines - some more detail on this later in the course.

Model-View-Controller (MVC) Architecture

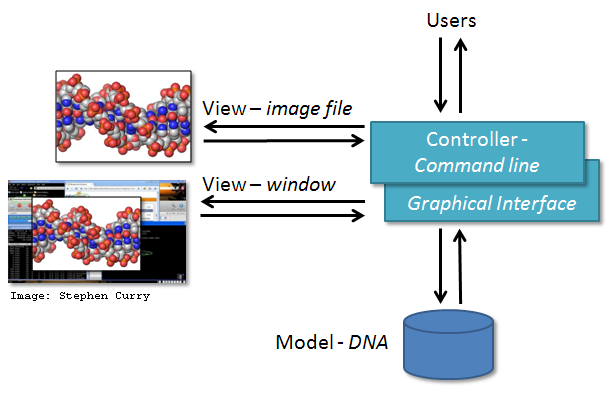

MVC architecture divides the related program logic into three interconnected modules:

- Model (data)

- View (client interface), and

- Controller (processes that handle input/output and manipulate the data).

Model represents the data used by a program and also contains operations/rules for manipulating and changing the data in the model. This may be a database, a file, a single data object or a series of objects - for example a table representing patients’ data.

View is the means of displaying data to users/clients within an application (i.e. provides visualisation of the state of the model). For example, displaying a window with input fields and buttons (Graphical User Interface, GUI) or textual options within a command line (Command Line Interface, CLI) are examples of Views. They include anything that the user can see from the application. While building GUIs is not the topic of this course, we will cover building CLIs in Python in later episodes.

Controller manipulates both the Model and the View. It accepts input from the View and performs the corresponding action on the Model (changing the state of the model) and then updates the View accordingly. For example, on user request, Controller updates a picture on a user’s GitHub profile and then modifies the View by displaying the updated profile back to the user.

MVC Examples

MVC architecture can be applied in scientific applications in the following manner. Model comprises those parts of the application that deal with some type of scientific processing or manipulation of the data, e.g. numerical algorithm, simulation, DNA. View is a visualisation, or format, of the output, e.g. graphical plot, diagram, chart, data table, file. Controller is the part that ties the scientific processing and output parts together, mediating input and passing it to the model or view, e.g. command line options, mouse clicks, input files. For example, the diagram below depicts the use of MVC architecture for the DNA Guide Graphical User Interface application.

Exercise: MVC Application Examples From your Work

Think of some other examples from your work or life where MVC architecture may be suitable or have a discussion with your fellow learners.

Solution

MVC architecture is a popular choice when designing web and mobile applications. Users interact with a web/mobile application by sending various requests to it. Forms to collect users inputs/requests together with the info returned and displayed to the user as a result represent the View. Requests are processed by the Controller, which interacts with the Model to retrieve or update the underlying data. For example, a user may request to view its profile. The Controller retrieves the account information for the user from the Model and passes it to the View for rendering. The user may further interact with the application by asking it to update its personal information. Controller verifies the correctness of the information (e.g. the password satisfies certain criteria, postal address and phone number are in the correct format, etc.) and passes it to the Model for permanent storage. The View is then updated accordingly and the user sees its updated profile details.

Note that not everything fits into the MVC architecture but it is still good to think about how things could be split into smaller units. For a few more examples, have a look at this short article on MVC from CodeAcademy.

Separation of Concerns

Separation of concerns is important when designing software architectures in order to reduce the code’s complexity. Note, however, there are limits to everything - and MVC architecture is no exception. Controller often transcends into Model and View and a clear separation is sometimes difficult to maintain. For example, the Command Line Interface provides both the View (what user sees and how they interact with the command line) and the Controller (invoking of a command) aspects of a CLI application. In Web applications, Controller often manipulates the data (received from the Model) before displaying it to the user or passing it from the user to the Model.

Our Project’s MVC Architecture

Our software project uses the MVC architecture.

The file inflammation-analysis.py is the Controller module

that performs basic statistical analysis over patient data

and provides the main entry point into the application.

The View and Model modules are contained in the files views.py and models.py, respectively,

and are conveniently named.

Data underlying the Model is contained within the directory data -

as we have seen already it contains several files with patients’ daily inflammation information.

We will revisit the software architecture and MVC topics once again in later episodes when we talk in more detail about software’s business/user/solution requirements and software design. We now proceed to set up our virtual development environment and start working with the code using a more convenient graphical tool - IDE PyCharm.

Key Points

Programming interfaces define how individual modules within a software application interact among themselves or how the application itself interacts with its users.

MVC is a software design architecture which divides the application into three interconnected modules: Model (data), View (user interface), and Controller (input/output and data manipulation).

The software project we use throughout this course is an example of an MVC application that manipulates patients’ inflammation data and performs basic statistical analysis using Python.

Virtual Environments For Software Development

Overview

Teaching: 30 min

Exercises: 0 minQuestions

What are virtual environments in software development and why you should use them?

How can we manage Python virtual environments and external (third-party) libraries?

Objectives

Set up a Python virtual environment for our software project using

venvandpip.Run our software from the command line.

Introduction

So far we have cloned our software project from GitHub and inspected its contents and architecture a bit. We now want to run our code to see what it does - let’s do that from the command line. For the most part of the course we will run our code and interact with Git from the command line. While we will develop and debug our code using the PyCharm IDE and it is possible to use Git from PyCharm too, typing commands in the command line allows you to familiarise yourself and learn it well. A bonus is that this knowledge is transferable to running code in other programming languages and is independent from any IDE you may use in the future.

If you have a little peek into our code

(e.g. run cat inflammation/views.py from the project root),

you will see the following two lines somewhere at the top.

from matplotlib import pyplot as plt

import numpy as np

This means that our code requires two external libraries

(also called third-party packages or dependencies) -

numpy and matplotlib.

Python applications often use external libraries that don’t come as part of the standard Python distribution.

This means that you will have to use a package manager tool to install them on your system.

Applications will also sometimes need a

specific version of an external library

(e.g. because they were written to work with feature, class,

or function that may have been updated in more recent versions),

or a specific version of Python interpreter.

This means that each Python application you work with may require a different setup

and a set of dependencies so it is useful to be able to keep these configurations

separate to avoid confusion between projects.

The solution for this problem is to create a self-contained

virtual environment per project,

which contains a particular version of Python installation

plus a number of additional external libraries.

Virtual environments are not just a feature of Python - most modern programming languages use them to isolate libraries for a specific project and make it easier to develop, run, test and share code with others. Even languages that don’t explicitly have virtual environments have other mechanisms that promote per-project library collections. In this episode, we learn how to set up a virtual environment to develop our code and manage our external dependencies.

Virtual Environments

So what exactly are virtual environments, and why use them?

A Python virtual environment helps us create an isolated working copy of a software project that uses a specific version of Python interpreter together with specific versions of a number of external libraries installed into that virtual environment. Python virtual environments are implemented as directories with a particular structure within software projects, containing links to specified dependencies allowing isolation from other software projects on your machine that may require different versions of Python or external libraries.

As more external libraries are added to your Python project over time, you can add them to its specific virtual environment and avoid a great deal of confusion by having separate (smaller) virtual environments for each project rather than one huge global environment with potential package version clashes. Another big motivator for using virtual environments is that they make sharing your code with others much easier (as we will see shortly). Here are some typical scenarios where the use of virtual environments is highly recommended (almost unavoidable):

- You have an older project that only works under Python 2. You do not have the time to migrate the project to Python 3 or it may not even be possible as some of the third party dependencies are not available under Python 3. You have to start another project under Python 3. The best way to do this on a single machine is to set up two separate Python virtual environments.

- One of your Python 3 projects is locked to use a particular older version of a third party dependency. You cannot use the latest version of the dependency as it breaks things in your project. In a separate branch of your project, you want to try and fix problems introduced by the new version of the dependency without affecting the working version of your project. You need to set up a separate virtual environment for your branch to ‘isolate’ your code while testing the new feature.

You do not have to worry too much about specific versions of external libraries that your project depends on most of the time. Virtual environments also enable you to always use the latest available version without specifying it explicitly. They also enable you to use a specific older version of a package for your project, should you need to.

A Specific Python or Package Version is Only Ever Installed Once

Note that you will not have a separate Python or package installations for each of your projects - they will only ever be installed once on your system but will be referenced from different virtual environments.

Managing Python Virtual Environments

There are several commonly used command line tools for managing Python virtual environments:

venv, available by default from the standardPythondistribution fromPython 3.3+virtualenv, needs to be installed separately but supports bothPython 2.7+andPython 3.3+versionspipenv, created to fix certain shortcomings ofvirtualenvconda, package and environment management system (also included as part of the Anaconda Python distribution often used by the scientific community)poetry, a modern Python packaging tool which handles virtual environments automatically

While there are pros and cons for using each of the above,

all will do the job of managing Python virtual environments for you

and it may be a matter of personal preference which one you go for.

In this course, we will use venv to create and manage our virtual environment

(which is the preferred way for Python 3.3+).

The upside is that venv virtual environments created from the command line are

also recognised and picked up automatically by PyCharm IDE,

as we will see in the next episode.

Managing External Packages

Part of managing your (virtual) working environment involves

installing, updating and removing external packages on your system.

The Python package manager tool pip is most commonly used for this -

it interacts and obtains the packages from the central repository called

Python Package Index (PyPI).

pip can now be used with all Python distributions (including Anaconda).

A Note on Anaconda and

condaAnaconda is an open source Python distribution commonly used for scientific programming - it conveniently installs Python, package and environment management

conda, and a number of commonly used scientific computing packages so you do not have to obtain them separately.condais an independent command line tool (available separately from the Anaconda distribution too) with dual functionality: (1) it is a package manager that helps you find Python packages from remote package repositories and install them on your system, and (2) it is also a virtual environment manager. So, you can usecondafor both tasks instead of usingvenvandpip.

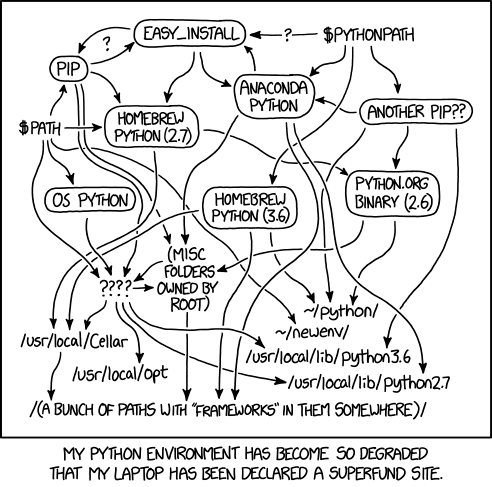

Many Tools for the Job

Installing and managing Python distributions,

external libraries and virtual environments is, well, complex.

There is an abundance of tools for each task,

each with its advantages and disadvantages,

and there are different ways to achieve the same effect

(and even different ways to install the same tool!).

Note that each Python distribution comes with its own version of pip -

and if you have several Python versions installed you have to be extra careful to

use the correct pip to manage external packages for that Python version.

venv and pip are considered the de facto standards for virtual environment

and package management for Python 3.

However, the advantages of using Anaconda and conda are that

you get (most of the) packages needed for scientific code development included with the distribution.

If you are only collaborating with others who are also using Anaconda,

you may find that conda satisfies all your needs.

It is good, however, to be aware of all these tools, and use them accordingly.

As you become more familiar with them you will realise that

equivalent tools work in a similar way even though the command syntax may be different

(and that there are equivalent tools for other programming languages too

to which your knowledge can be ported).

Python Environment Hell

From XKCD (Creative Commons Attribution-NonCommercial 2.5 License)

Let us have a look at how we can create and manage virtual environments from the command line

using venv and manage packages using pip.

Creating Virtual Environments Using venv

Creating a virtual environment with venv is done by executing the following command:

$ python3 -m venv /path/to/new/virtual/environment

where /path/to/new/virtual/environment is a path to a directory where you want to place it -

conventionally within your software project so they are co-located.

This will create the target directory for the virtual environment

(and any parent directories that don’t exist already).

For our project let’s create a virtual environment called “venv”. First, ensure you are within the project root directory, then:

$ python3 -m venv venv

If you list the contents of the newly created directory “venv”, on a Mac or Linux system (slightly different on Windows as explained below) you should see something like:

$ ls -l venv

total 8

drwxr-xr-x 12 alex staff 384 5 Oct 11:47 bin

drwxr-xr-x 2 alex staff 64 5 Oct 11:47 include

drwxr-xr-x 3 alex staff 96 5 Oct 11:47 lib

-rw-r--r-- 1 alex staff 90 5 Oct 11:47 pyvenv.cfg

So, running the python3 -m venv venv command created the target directory called “venv”

containing:

pyvenv.cfgconfiguration file with a home key pointing to the Python installation from which the command was run,binsubdirectory (calledScriptson Windows) containing a symlink of the Python interpreter binary used to create the environment and the standard Python library,lib/pythonX.Y/site-packagessubdirectory (calledLib\site-packageson Windows) to contain its own independent set of installed Python packages isolated from other projects,- various other configuration and supporting files and subdirectories.

Naming Virtual Environments

What is a good name to use for a virtual environment? Using “venv” or “.venv” as the name for an environment and storing it within the project’s directory seems to be the recommended way - this way when you come across such a subdirectory within a software project, by convention you know it contains its virtual environment details. A slight downside is that all different virtual environments on your machine then use the same name and the current one is determined by the context of the path you are currently located in. A (non-conventional) alternative is to use your project name for the name of the virtual environment, with the downside that there is nothing to indicate that such a directory contains a virtual environment. In our case, we have settled to use the name “venv” instead of “.venv” since it is not a hidden directory and we want it to be displayed by the command line when listing directory contents (the “.” in its name that would, by convention, make it hidden). In the future, you will decide what naming convention works best for you. Here are some references for each of the naming conventions:

- The Hitchhiker’s Guide to Python notes that “venv” is the general convention used globally

- The Python Documentation indicates that “.venv” is common

- “venv” vs “.venv” discussion

Once you’ve created a virtual environment, you will need to activate it.

On Mac or Linux, it is done as:

$ source venv/bin/activate

(venv) $

On Windows, recall that we have Scripts directory instead of bin

and activating a virtual environment is done as:

$ source venv/Scripts/activate

(venv) $

Activating the virtual environment will change your command line’s prompt to show what virtual environment you are currently using (indicated by its name in round brackets at the start of the prompt), and modify the environment so that running Python will get you the particular version of Python configured in your virtual environment.

You can verify you are using your virtual environment’s version of Python

by checking the path using the command which:

(venv) $ which python3

/home/alex/python-intermediate-inflammation/venv/bin/python3

When you’re done working on your project, you can exit the environment with:

(venv) $ deactivate

If you’ve just done the deactivate,

ensure you reactivate the environment ready for the next part:

$ source venv/bin/activate

(venv) $

Python Within A Virtual Environment

Within a virtual environment, commands

pythonandpipwill refer to the version of Python you created the environment with. If you create a virtual environment withpython3 -m venv venv,pythonwill refer topython3andpipwill refer topip3.On some machines with Python 2 installed,

pythoncommand may refer to the copy of Python 2 installed outside of the virtual environment instead, which can cause confusion. You can always check which version of Python you are using in your virtual environment with the commandwhich pythonto be absolutely sure. We continue usingpython3andpip3in this material to avoid confusion for those users, but commandspythonandpipmay work for you as expected.

Note that, since our software project is being tracked by Git, the newly created virtual environment will show up in version control - we will see how to handle it using Git in one of the subsequent episodes.

Installing External Packages Using pip

We noticed earlier that our code depends on two external packages/libraries -

numpy and matplotlib.

In order for the code to run on your machine,

you need to install these two dependencies into your virtual environment.

To install the latest version of a package with pip

you use pip’s install command and specify the package’s name, e.g.:

(venv) $ pip3 install numpy

(venv) $ pip3 install matplotlib

or like this to install multiple packages at once for short:

(venv) $ pip3 install numpy matplotlib

How About

python3 -m pip install?Why are we not using

pipas an argument topython3command, in the same way we did withvenv(i.e.python3 -m venv)?python3 -m pip installshould be used according to the official Pip documentation; other official documentation still seems to have a mixture of usages. Core Python developer Brett Cannon offers a more detailed explanation of edge cases when the two options may produce different results and recommendspython3 -m pip install. We kept the old-style command (pip3 install) as it seems more prevalent among developers at the moment - but it may be a convention that will soon change and certainly something you should consider.

If you run the pip3 install command on a package that is already installed,

pip will notice this and do nothing.

To install a specific version of a Python package

give the package name followed by == and the version number,

e.g. pip3 install numpy==1.21.1.

To specify a minimum version of a Python package,

you can do pip3 install numpy>=1.20.

To upgrade a package to the latest version, e.g. pip3 install --upgrade numpy.

To display information about a particular installed package do:

(venv) $ pip3 show numpy

Name: numpy

Version: 1.21.2

Summary: NumPy is the fundamental package for array computing with Python.

Home-page: https://www.numpy.org

Author: Travis E. Oliphant et al.

Author-email: None

License: BSD

Location: /Users/alex/work/SSI/Carpentries/python-intermediate-inflammation/inflammation/lib/python3.9/site-packages

Requires:

Required-by: matplotlib

To list all packages installed with pip (in your current virtual environment):

(venv) $ pip3 list

Package Version

--------------- -------

cycler 0.11.0

fonttools 4.28.1

kiwisolver 1.3.2

matplotlib 3.5.0

numpy 1.21.4

packaging 21.2

Pillow 8.4.0

pip 21.1.3

pyparsing 2.4.7

python-dateutil 2.8.2

setuptools 57.0.0

setuptools-scm 6.3.2

six 1.16.0

tomli 1.2.2

To uninstall a package installed in the virtual environment do: pip3 uninstall package-name.

You can also supply a list of packages to uninstall at the same time.

Exporting/Importing Virtual Environments Using pip

You are collaborating on a project with a team so, naturally,

you will want to share your environment with your collaborators

so they can easily ‘clone’ your software project with all of its dependencies

and everyone can replicate equivalent virtual environments on their machines.

pip has a handy way of exporting, saving and sharing virtual environments.

To export your active environment -

use pip3 freeze command to produce a list of packages installed in the virtual environment.

A common convention is to put this list in a requirements.txt file:

(venv) $ pip3 freeze > requirements.txt

(venv) $ cat requirements.txt

cycler==0.11.0

fonttools==4.28.1

kiwisolver==1.3.2

matplotlib==3.5.0

numpy==1.21.4

packaging==21.2

Pillow==8.4.0

pyparsing==2.4.7

python-dateutil==2.8.2

setuptools-scm==6.3.2

six==1.16.0

tomli==1.2.2

The first of the above commands will create a requirements.txt file in your current directory.

Yours may look a little different,

depending on the version of the packages you have installed,

as well as any differences in the packages that they themselves use.

The requirements.txt file can then be committed to a version control system

(we will see how to do this using Git in one of the following episodes)

and get shipped as part of your software and shared with collaborators and/or users.

They can then replicate your environment

and install all the necessary packages from the project root as follows:

(venv) $ pip3 install -r requirements.txt

As your project grows - you may need to update your environment for a variety of reasons.

For example, one of your project’s dependencies has just released a new version

(dependency version number update),

you need an additional package for data analysis (adding a new dependency)

or you have found a better package and no longer need the older package

(adding a new and removing an old dependency).

What you need to do in this case

(apart from installing the new and removing the packages that are no longer needed

from your virtual environment)

is update the contents of the requirements.txt file accordingly

by re-issuing pip freeze command

and propagate the updated requirements.txt file to your collaborators

via your code sharing platform (e.g. GitHub).

Official Documentation

For a full list of options and commands, consult the official

venvdocumentation and the Installing Python Modules withpipguide. Also check out the guide “Installing packages usingpipand virtual environments”.

Running Python Scripts From Command Line

Congratulations!

Your environment is now activated and set up

to run our inflammation-analysis.py script from the command line.

You should already be located in the root of the python-intermediate-inflammation directory

(if not, please navigate to it from the command line now).

To run the script, type the following command:

(venv) $ python3 inflammation-analysis.py

usage: inflammation-analysis.py [-h] infiles [infiles ...]

inflammation-analysis.py: error: the following arguments are required: infiles

In the above command, we tell the command line two things:

- to find a Python interpreter (in this case, the one that was configured via the virtual environment), and

- to use it to run our script

inflammation-analysis.py, which resides in the current directory.

As we can see, the Python interpreter ran our script, which threw an error -

inflammation-analysis.py: error: the following arguments are required: infiles.

It looks like the script expects a list of input files to process,

so this is expected behaviour since we don’t supply any.

We will fix this error in a moment.

Key Points

Virtual environments keep Python versions and dependencies required by different projects separate.

A virtual environment is itself a directory structure.

Use

venvto create and manage Python virtual environments.Use

pipto install and manage Python external (third-party) libraries.

pipallows you to declare all dependencies for a project in a separate file (by convention calledrequirements.txt) which can be shared with collaborators/users and used to replicate a virtual environment.Use

pip3 freeze > requirements.txtto take snapshot of your project’s dependencies.Use

pip3 install -r requirements.txtto replicate someone else’s virtual environment on your machine from therequirements.txtfile.

Integrated Software Development Environments

Overview

Teaching: 25 min

Exercises: 15 minQuestions

What are Integrated Development Environments (IDEs)?

What are the advantages of using IDEs for software development?

Objectives

Set up a (virtual) development environment in PyCharm

Use PyCharm to run a Python script

Introduction

As we have seen in the previous episode - even a simple software project is typically split into smaller functional units and modules, which are kept in separate files and subdirectories. As your code starts to grow and becomes more complex, it will involve many different files and various external libraries. You will need an application to help you manage all the complexities of, and provide you with some useful (visual) facilities for, the software development process. Such clever and useful graphical software development applications are called Integrated Development Environments (IDEs).

Integrated Development Environments

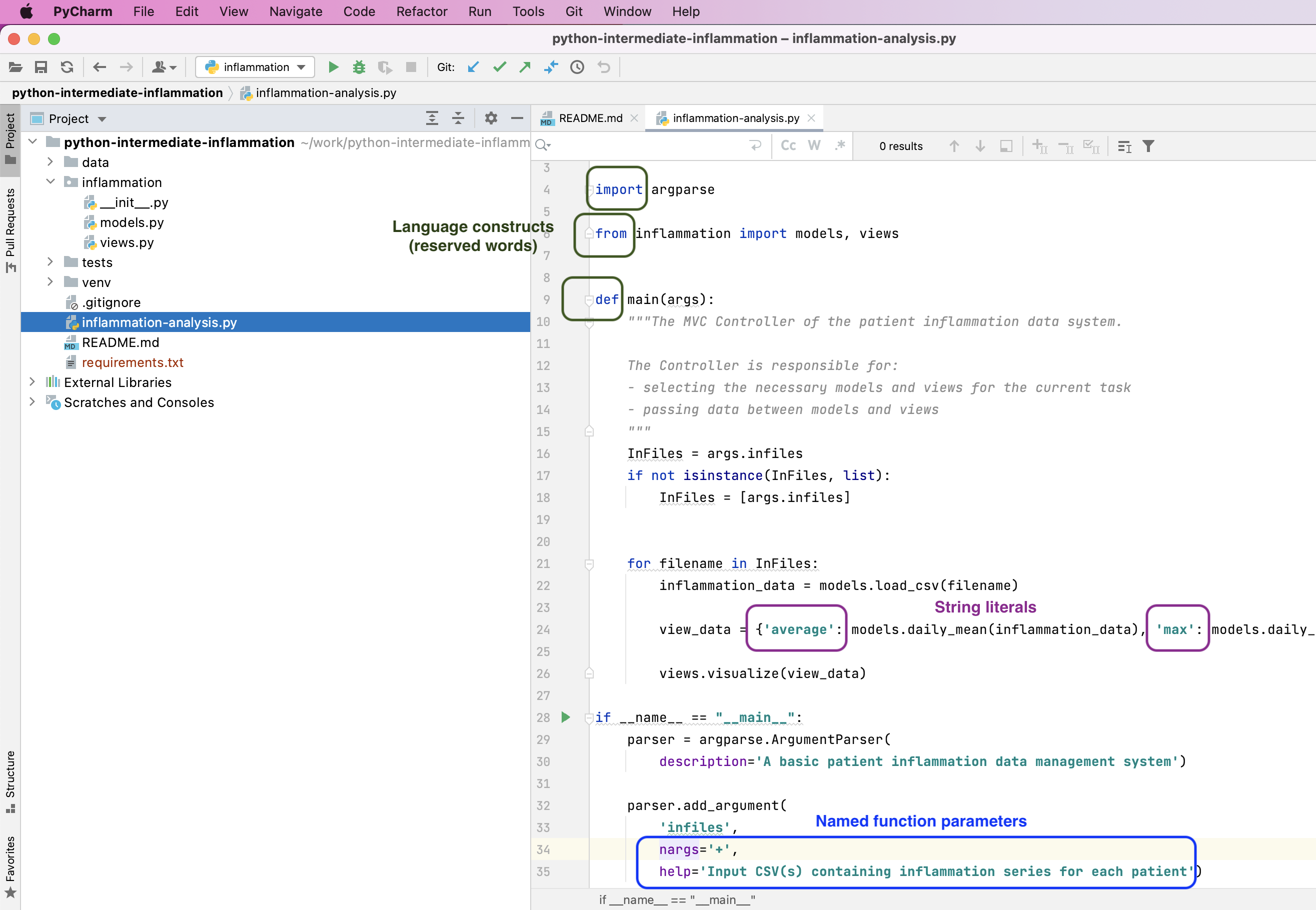

An IDE normally consists of at least a source code editor, build automation tools and a debugger. The boundaries between modern IDEs and other aspects of the broader software development process are often blurred. Nowadays IDEs also offer version control support, tools to construct graphical user interfaces (GUI) and web browser integration for web app development, source code inspection for dependencies and many other useful functionalities. The following is a list of the most commonly seen IDE features:

- syntax highlighting - to show the language constructs, keywords and the syntax errors with visually distinct colours and font effects

- code completion - to speed up programming by offering a set of possible (syntactically correct) code options

- code search - finding package, class, function and variable declarations, their usages and referencing

- version control support - to interact with source code repositories

- debugging - for setting breakpoints in the code editor, step-by-step execution of code and inspection of variables

IDEs are extremely useful and modern software development would be very hard without them. There are a number of IDEs available for Python development; a good overview is available from the Python Project Wiki. In addition to IDEs, there are also a number of code editors that have Python support. Code editors can be as simple as a text editor with syntax highlighting and code formatting capabilities (e.g. GNU EMACS, Vi/Vim). Most good code editors can also execute code and control a debugger, and some can also interact with a version control system. Compared to an IDE, a good dedicated code editor is usually smaller and quicker, but often less feature-rich. You will have to decide which one is the best for you - in this course we will learn how to use PyCharm, a free, open source Python IDE. Some popular alternatives include free and open source IDE Spyder and Microsoft’s free Visual Studio Code.

Using the PyCharm IDE

Let’s open our project in PyCharm now and familiarise ourselves with some commonly used features.

Opening a Software Project

If you don’t have PyCharm running yet, start it up now.

You can skip the initial configuration steps which just go through

selecting a theme and other aspects.

You should be presented with a dialog box that asks you what you want to do,

e.g. Create New Project, Open, or Check out from Version Control.

Select Open and find the software project directory

python-intermediate-inflammation you cloned earlier.

This directory is now the current working directory for PyCharm,

so when we run scripts from PyCharm, this is the directory they will run from.

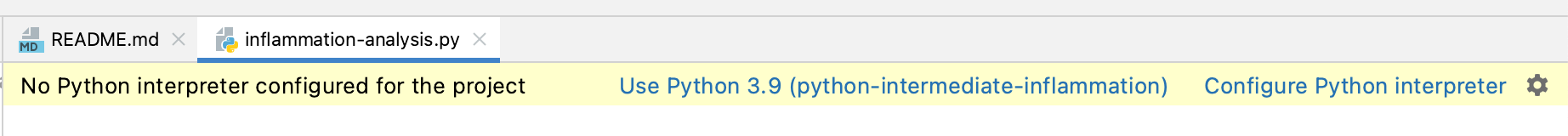

PyCharm will show you a ‘Tip of the Day’ window which you can safely ignore and close for now. You may also get a warning ‘No Python interpreter configured for the project’ - we will deal with this shortly after we familiarise ourselves with the PyCharm environment. You will notice the IDE shows you a project/file navigator window on the left hand side, to traverse and select the files (and any subdirectories) within the working directory, and an editor window on the right. At the bottom, you would typically have a panel for version control, terminal (the command line within PyCharm) and a TODO list.

Select the inflammation-analysis.py file in the project navigator on the left

so that its contents are displayed in the editor window.

You may notice a warning about the missing Python interpreter

at the top of the editor panelshowing inflammation-analysis.py file -

this is one of the first things you will have to configure for your project

before you can do any work.

You may take the shortcut and click on one of the offered options above but we want to take you through the whole process of setting up your environment in PyCharm as this is important conceptually.

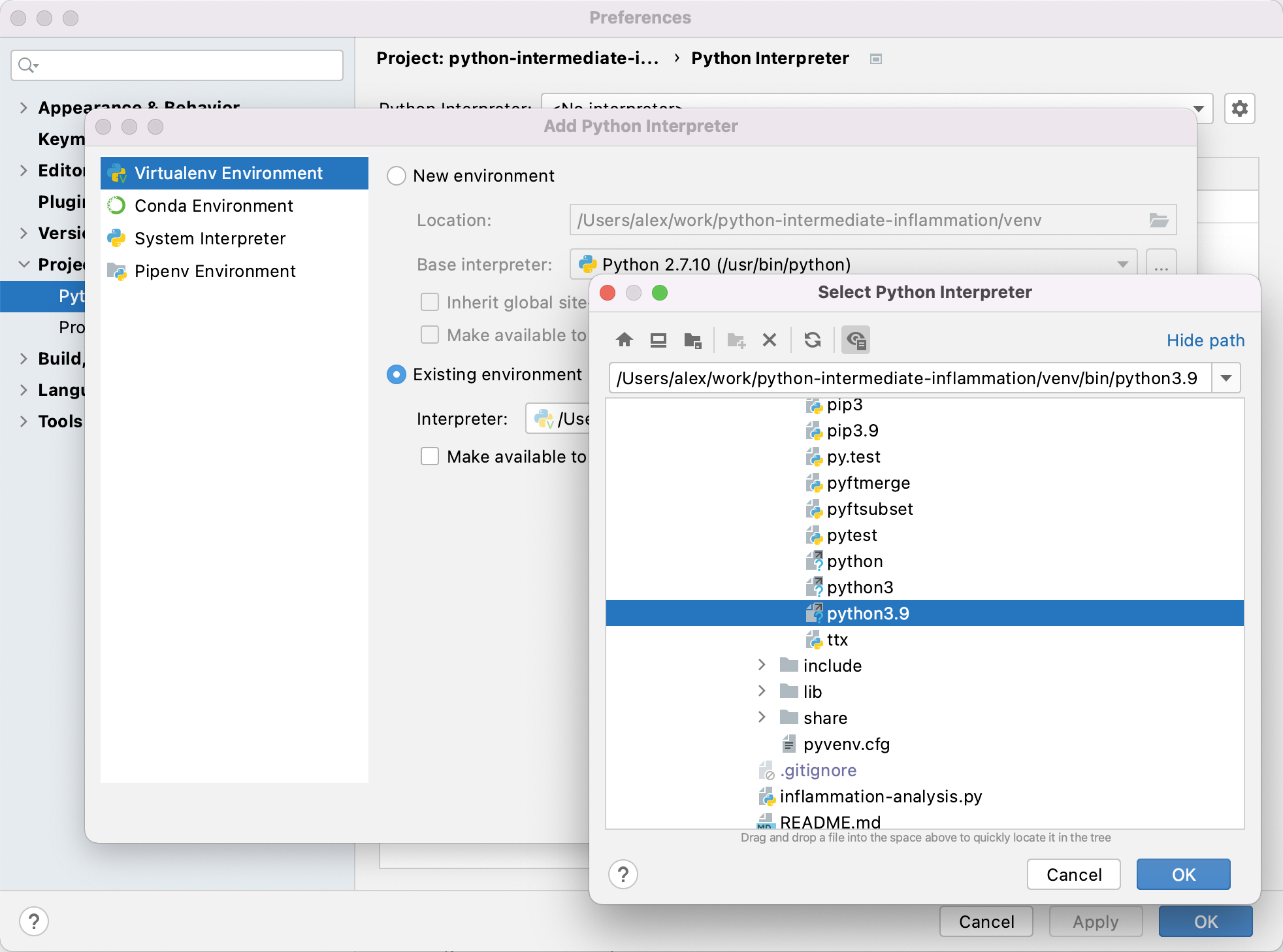

Configuring a Virtual Environment in PyCharm

Before you can run the code from PyCharm, you need to explicitly specify the path to the Python interpreter on your system. The same goes for any dependencies your code may have - you need to tell PyCharm where to find them - much like we did from the command line in the previous episode. Luckily for us, we have already set up a virtual environment for our project from the command line and PyCharm is clever enough to understand it.

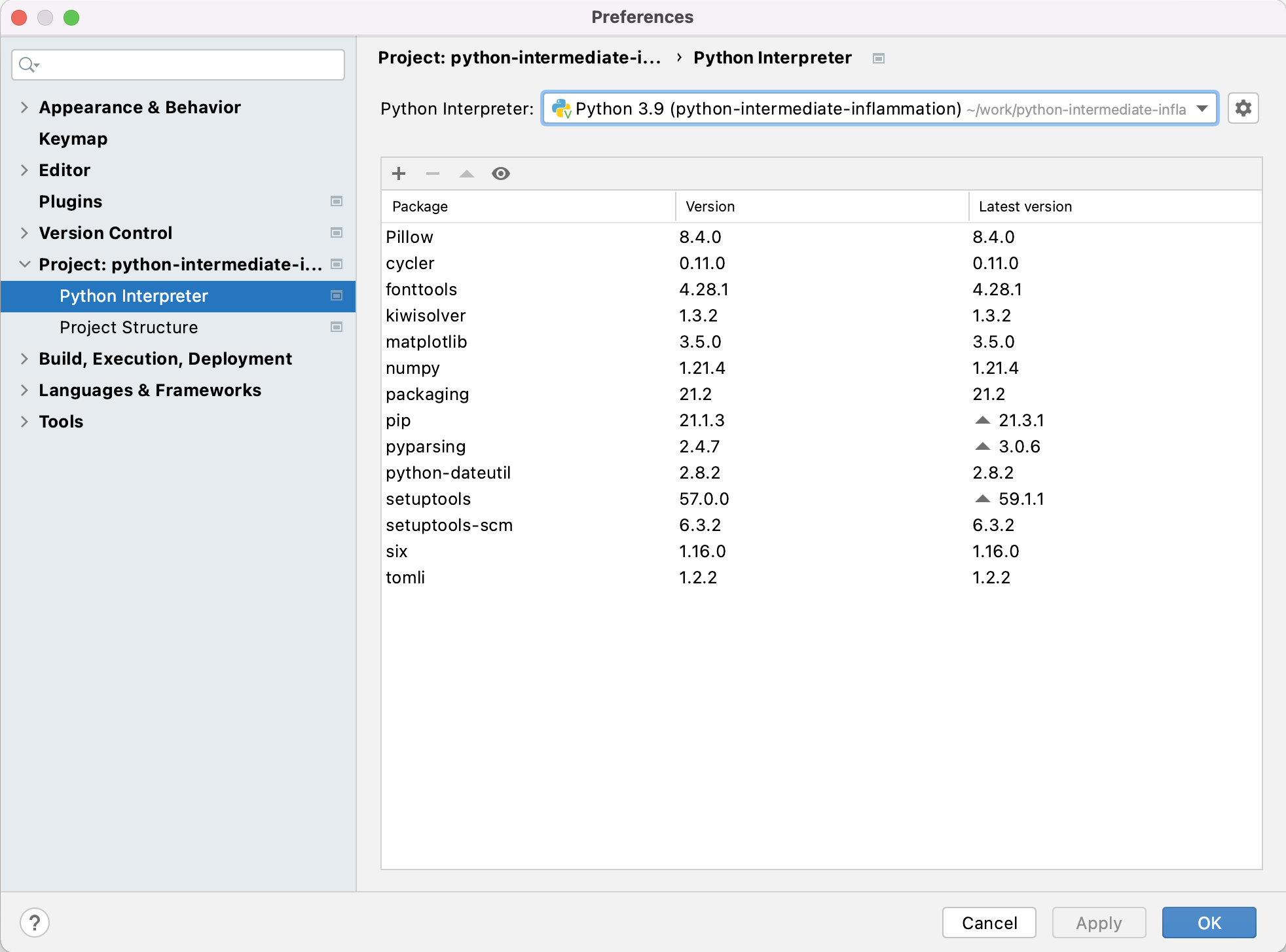

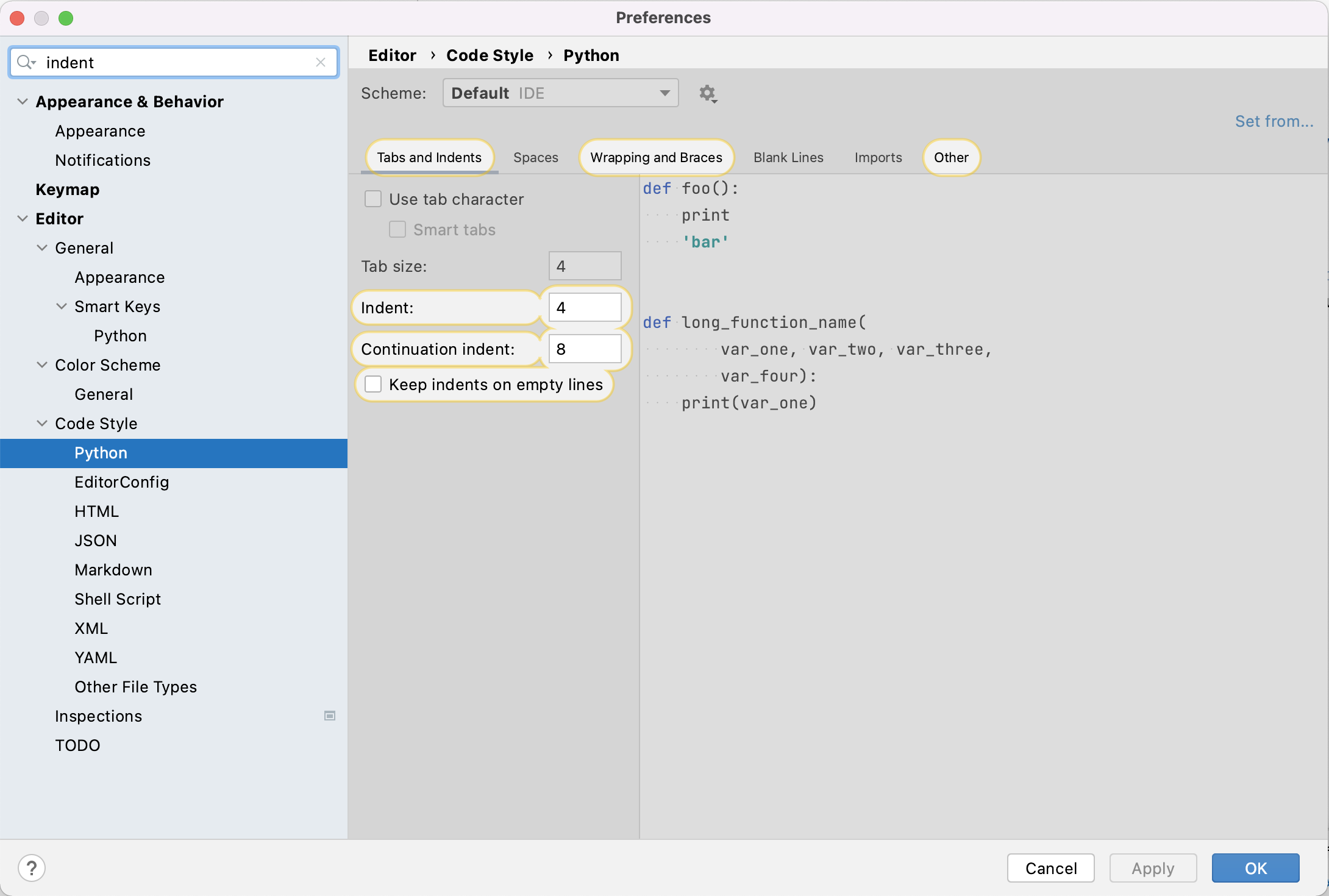

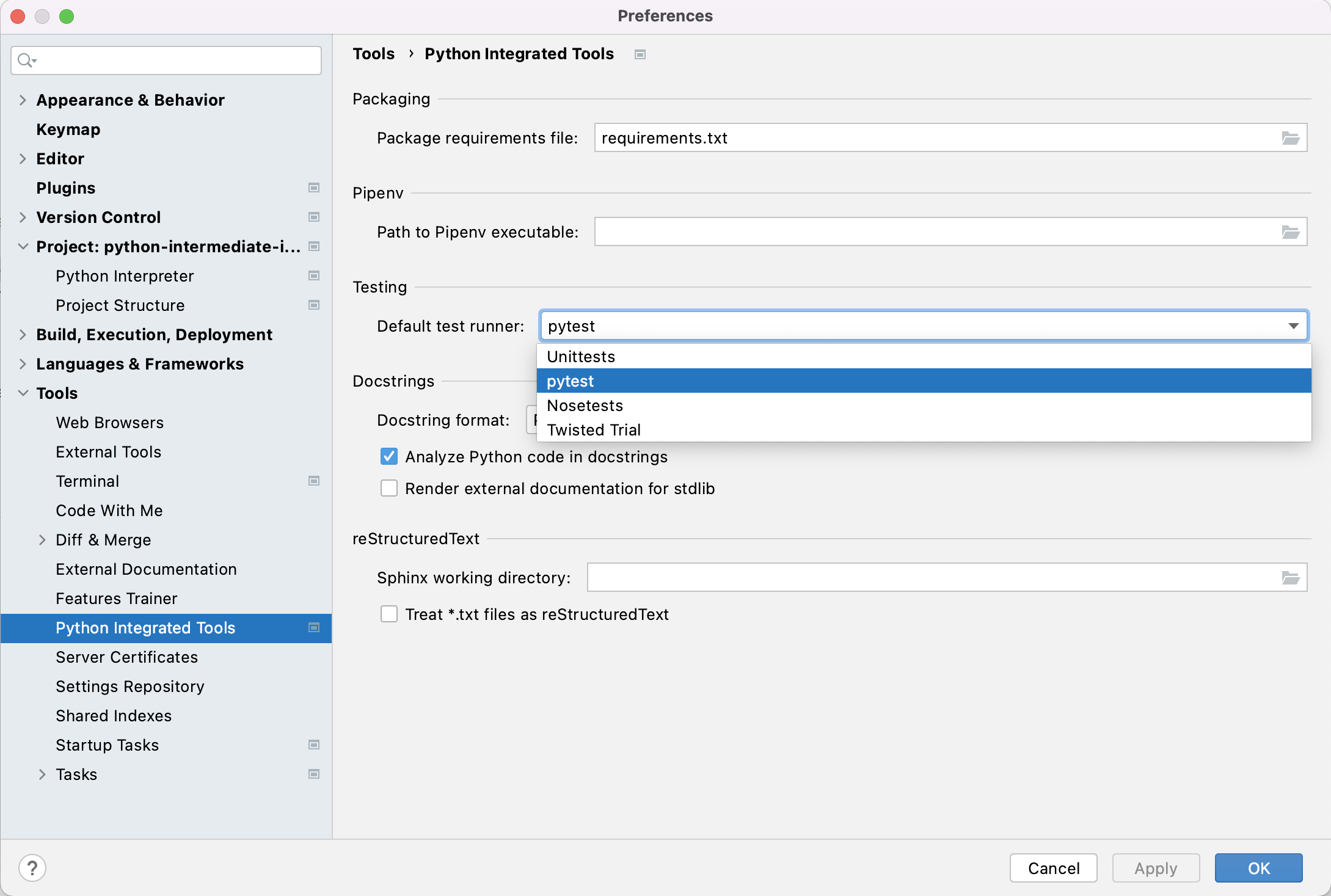

Adding a Python Interpreter

- Select either

PyCharm>Preferences(Mac) orFile>Settings(Linux, Windows). - In the preferences window that appears,

select

Project: python-intermediate-inflammation>Python Interpreterfrom the left. You’ll see a number of Python packages displayed as a list, and importantly above that, the current Python interpreter that is being used. These may be blank or set to<No interpreter>, or possibly the default version of Python installed on your system, e.g.Python 2.7 /usr/bin/python2.7, which we do not want to use in this instance. - Select the cog-like button in the top right, then

Add Local...(orAdd...depending on your PyCharm version). AnAdd Python Interpreterwindow will appear. - Select

Virtualenv Environmentfrom the list on the left and ensure thatExisting environmentcheckbox is selected within the popup window. In theInterpreterfield point to the Python 3 executable inside your virtual environment’sbindirectory (make sure you navigate to it and select it from the file browser rather than just accept the default offered by PyCharm). Note that there is also an option to create a new virtual environment, but we are not using that option as we want to reuse the one we created from the command line in the previous episode.

- Select

Make available to all projectscheckbox so we can also use this environment for other projects if we wish. - Select

OKin theAdd Python Interpreterwindow. Back in thePreferenceswindow, you should select “Python 3.9 (python-intermediate-inflammation)” or similar (that you’ve just added) from theProject Interpreterdrop-down list.

Note that a number of external libraries have magically appeared under the

“Python 3.9 (python-intermediate-inflammation)” interpreter,

including numpy and matplotlib.

PyCharm has recognised the virtual environment we created from the command line using venv

and has added these libraries effectively replicating our virtual environment in PyCharm

(referred to as “Python 3.9 (python-intermediate-inflammation)”).

Also note that, although the names are not the same - this is one and the same virtual environment and changes done to it in PyCharm will propagate to the command line and vice versa. Let’s see this in action through the following exercise.

Exercise: Compare External Libraries in the Command Line and PyCharm

Can you recall two places where information about our project’s dependencies can be found from the command line? Compare that information with the equivalent configuration in PyCharm.

Hint: We can use an argument to

pip, or find the packages directly in a subdirectory of our virtual environment directory “venv”.Solution

From the previous episode, you may remember that we can get the list of packages in the current virtual environment using the

pip3 listcommand:(venv) $ pip3 listPackage Version --------------- ------- cycler 0.11.0 fonttools 4.28.1 kiwisolver 1.3.2 matplotlib 3.5.0 numpy 1.21.4 packaging 21.2 Pillow 8.4.0 pip 21.1.3 pyparsing 2.4.7 python-dateutil 2.8.2 setuptools 57.0.0 setuptools-scm 6.3.2 six 1.16.0 tomli 1.2.2However,

pip3 listshows all the packages in the virtual environment - if we want to see only the list of packages that we installed, we can use thepip3 freezecommand instead:(venv) $ pip3 freezecycler==0.11.0 fonttools==4.28.1 kiwisolver==1.3.2 matplotlib==3.5.0 numpy==1.21.4 packaging==21.2 Pillow==8.4.0 pyparsing==2.4.7 python-dateutil==2.8.2 setuptools-scm==6.3.2 six==1.16.0 tomli==1.2.2We see

pipinpip3 listbut not inpip3 freezeas we did not install it usingpip. Remember that we usepip3 freezeto update ourrequirements.txtfile, to keep a list of the packages our virtual environment includes. Python will not do this automatically; we have to manually update the file when our requirements change using:pip3 freeze > requirements.txtIf we want, we can also see the list of packages directly in the following subdirectory of

venv:(venv) $ ls -l venv/lib/python3.9/site-packagestotal 1088 drwxr-xr-x 103 alex staff 3296 17 Nov 11:55 PIL drwxr-xr-x 9 alex staff 288 17 Nov 11:55 Pillow-8.4.0.dist-info drwxr-xr-x 6 alex staff 192 17 Nov 11:55 __pycache__ drwxr-xr-x 5 alex staff 160 17 Nov 11:53 _distutils_hack drwxr-xr-x 8 alex staff 256 17 Nov 11:55 cycler-0.11.0.dist-info -rw-r--r-- 1 alex staff 14519 17 Nov 11:55 cycler.py drwxr-xr-x 14 alex staff 448 17 Nov 11:55 dateutil -rw-r--r-- 1 alex staff 152 17 Nov 11:53 distutils-precedence.pth drwxr-xr-x 31 alex staff 992 17 Nov 11:55 fontTools drwxr-xr-x 9 alex staff 288 17 Nov 11:55 fonttools-4.28.1.dist-info drwxr-xr-x 8 alex staff 256 17 Nov 11:55 kiwisolver-1.3.2.dist-info -rwxr-xr-x 1 alex staff 216968 17 Nov 11:55 kiwisolver.cpython-39-darwin.so drwxr-xr-x 92 alex staff 2944 17 Nov 11:55 matplotlib -rw-r--r-- 1 alex staff 569 17 Nov 11:55 matplotlib-3.5.0-py3.9-nspkg.pth drwxr-xr-x 20 alex staff 640 17 Nov 11:55 matplotlib-3.5.0.dist-info drwxr-xr-x 7 alex staff 224 17 Nov 11:55 mpl_toolkits drwxr-xr-x 39 alex staff 1248 17 Nov 11:55 numpy drwxr-xr-x 11 alex staff 352 17 Nov 11:55 numpy-1.21.4.dist-info drwxr-xr-x 15 alex staff 480 17 Nov 11:55 packaging drwxr-xr-x 10 alex staff 320 17 Nov 11:55 packaging-21.2.dist-info drwxr-xr-x 8 alex staff 256 17 Nov 11:53 pip drwxr-xr-x 10 alex staff 320 17 Nov 11:53 pip-21.1.3.dist-info drwxr-xr-x 7 alex staff 224 17 Nov 11:53 pkg_resources -rw-r--r-- 1 alex staff 90 17 Nov 11:55 pylab.py drwxr-xr-x 8 alex staff 256 17 Nov 11:55 pyparsing-2.4.7.dist-info -rw-r--r-- 1 alex staff 273365 17 Nov 11:55 pyparsing.py drwxr-xr-x 9 alex staff 288 17 Nov 11:55 python_dateutil-2.8.2.dist-info drwxr-xr-x 41 alex staff 1312 17 Nov 11:53 setuptools drwxr-xr-x 11 alex staff 352 17 Nov 11:53 setuptools-57.0.0.dist-info drwxr-xr-x 19 alex staff 608 17 Nov 11:55 setuptools_scm drwxr-xr-x 10 alex staff 320 17 Nov 11:55 setuptools_scm-6.3.2.dist-info drwxr-xr-x 8 alex staff 256 17 Nov 11:55 six-1.16.0.dist-info -rw-r--r-- 1 alex staff 34549 17 Nov 11:55 six.py drwxr-xr-x 8 alex staff 256 17 Nov 11:55 tomli drwxr-xr-x 7 alex staff 224 17 Nov 11:55 tomli-1.2.2.dist-infoFinally, if you look at both the contents of

venv/lib/python3.9/site-packagesandrequirements.txtand compare that with the packages shown in PyCharm’s Python Interpreter Configuration - you will see that they all contain equivalent information.

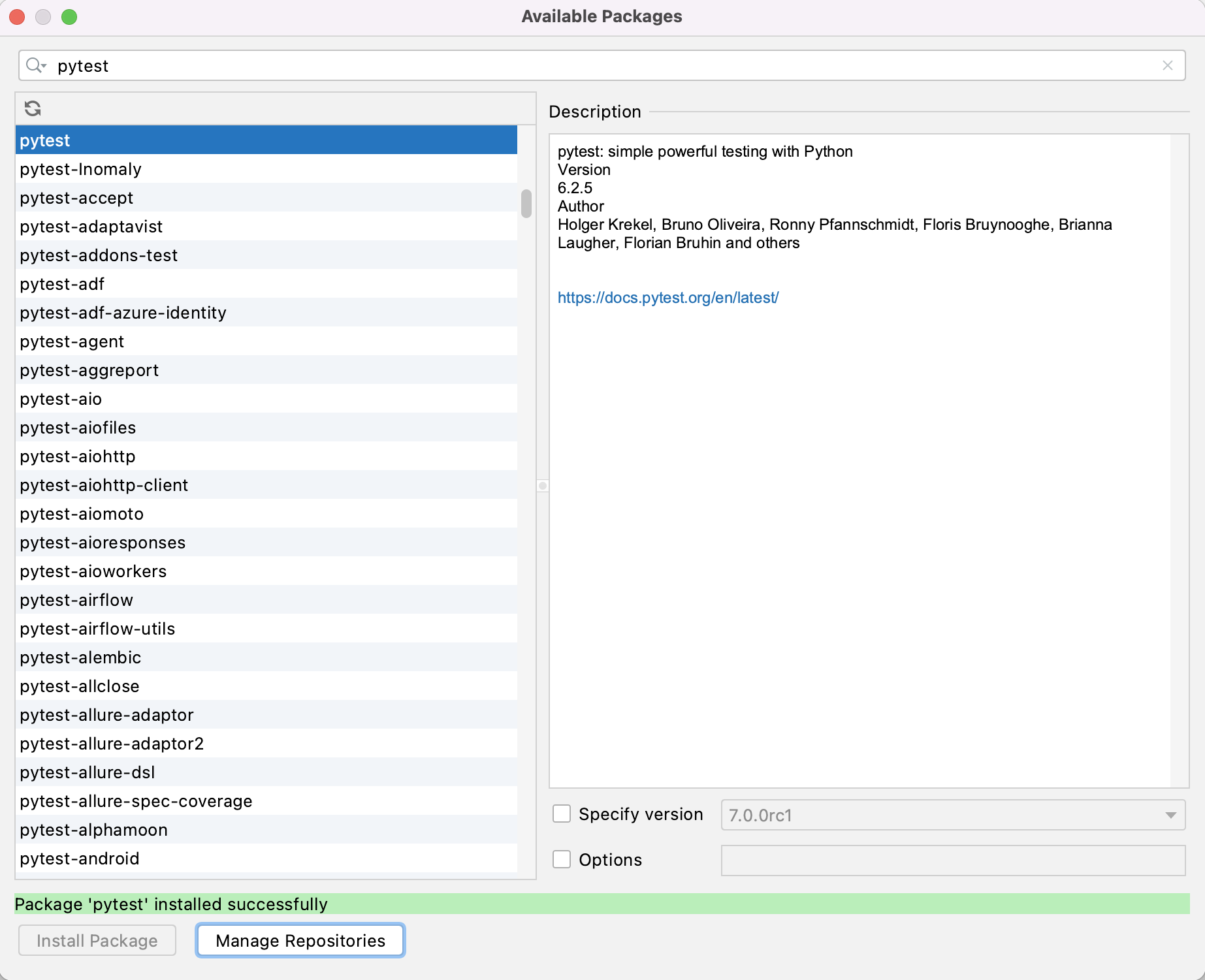

Adding an External Library

We have already added packages numpy and matplotlib to our virtual environment

from the command line in the previous episode,

so we are up-to-date with all external libraries we require at the moment.

However, we will need library pytest soon to implement tests for our code.

We will use this opportunity to install it from PyCharm in order to see

an alternative way of doing this and how it propagates to the command line.

- Select either

PyCharm>Preferences(Mac) orFile>Settings(Linux, Windows). - In the preferences window that appears,

select

Project: python-intermediate-inflammation>Project Interpreterfrom the left. - Select the

+icon at the top of the window. In the window that appears, search for the name of the library (pytest), select it from the list, then selectInstall Package. Once it finishes installing, you can close that window.

- Select

OKin thePreferences/Settingswindow.

It may take a few minutes for PyCharm to install it.

After it is done, the pytest library is added to our virtual environment.

You can also verify this from the command line by

listing the venv/lib/python3.9/site-packages subdirectory.

Note, however, that requirements.txt is not updated -

as we mentioned earlier this is something you have to do manually.

Let’s do this as an exercise.

Exercise: Update

requirements.txtAfter Adding a New DependencyExport the newly updated virtual environment into

requirements.txtfile.Solution

Let’s verify first that the newly installed library

pytestis appearing in our virtual environment but not inrequirements.txt. First, let’s check the list of installed packages:(venv) $ pip3 listPackage Version --------------- ------- attrs 21.4.0 cycler 0.11.0 fonttools 4.28.5 iniconfig 1.1.1 kiwisolver 1.3.2 matplotlib 3.5.1 numpy 1.22.0 packaging 21.3 Pillow 9.0.0 pip 20.0.2 pluggy 1.0.0 py 1.11.0 pyparsing 3.0.7 pytest 6.2.5 python-dateutil 2.8.2 setuptools 44.0.0 six 1.16.0 toml 0.10.2 tomli 2.0.0We can see the

pytestlibrary appearing in the listing above. However, if we do:(venv) $ cat requirements.txtcycler==0.11.0 fonttools==4.28.1 kiwisolver==1.3.2 matplotlib==3.5.0 numpy==1.21.4 packaging==21.2 Pillow==8.4.0 pyparsing==2.4.7 python-dateutil==2.8.2 setuptools-scm==6.3.2 six==1.16.0 tomli==1.2.2

pytestis missing fromrequirements.txt. To add it, we need to update the file by repeating the command:(venv) $ pip3 freeze > requirements.txt

pytestis now present inrequirements.txt:attrs==21.2.0 cycler==0.11.0 fonttools==4.28.1 iniconfig==1.1.1 kiwisolver==1.3.2 matplotlib==3.5.0 numpy==1.21.4 packaging==21.2 Pillow==8.4.0 pluggy==1.0.0 py==1.11.0 pyparsing==2.4.7 pytest==6.2.5 python-dateutil==2.8.2 setuptools-scm==6.3.2 six==1.16.0 toml==0.10.2 tomli==1.2.2

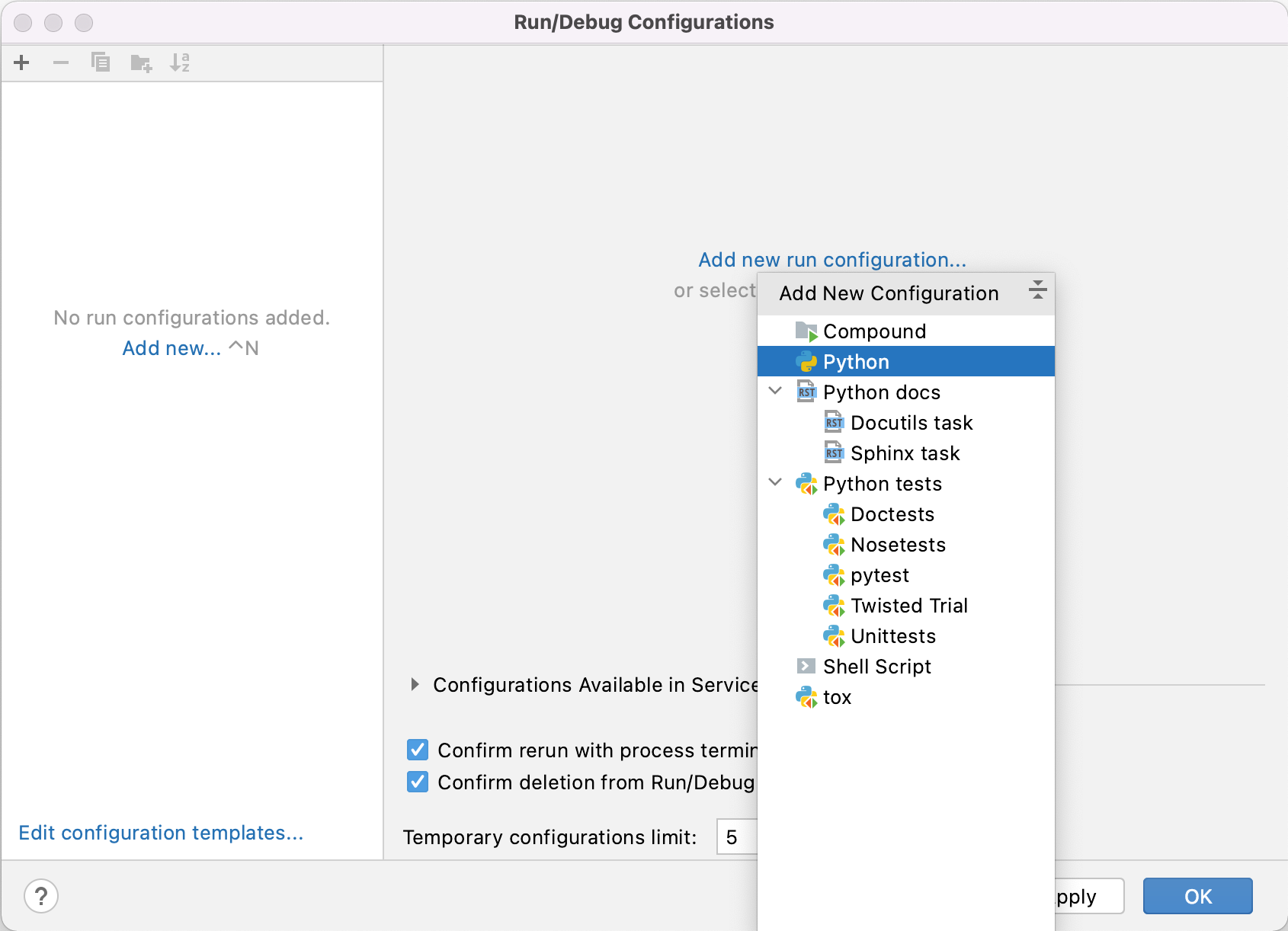

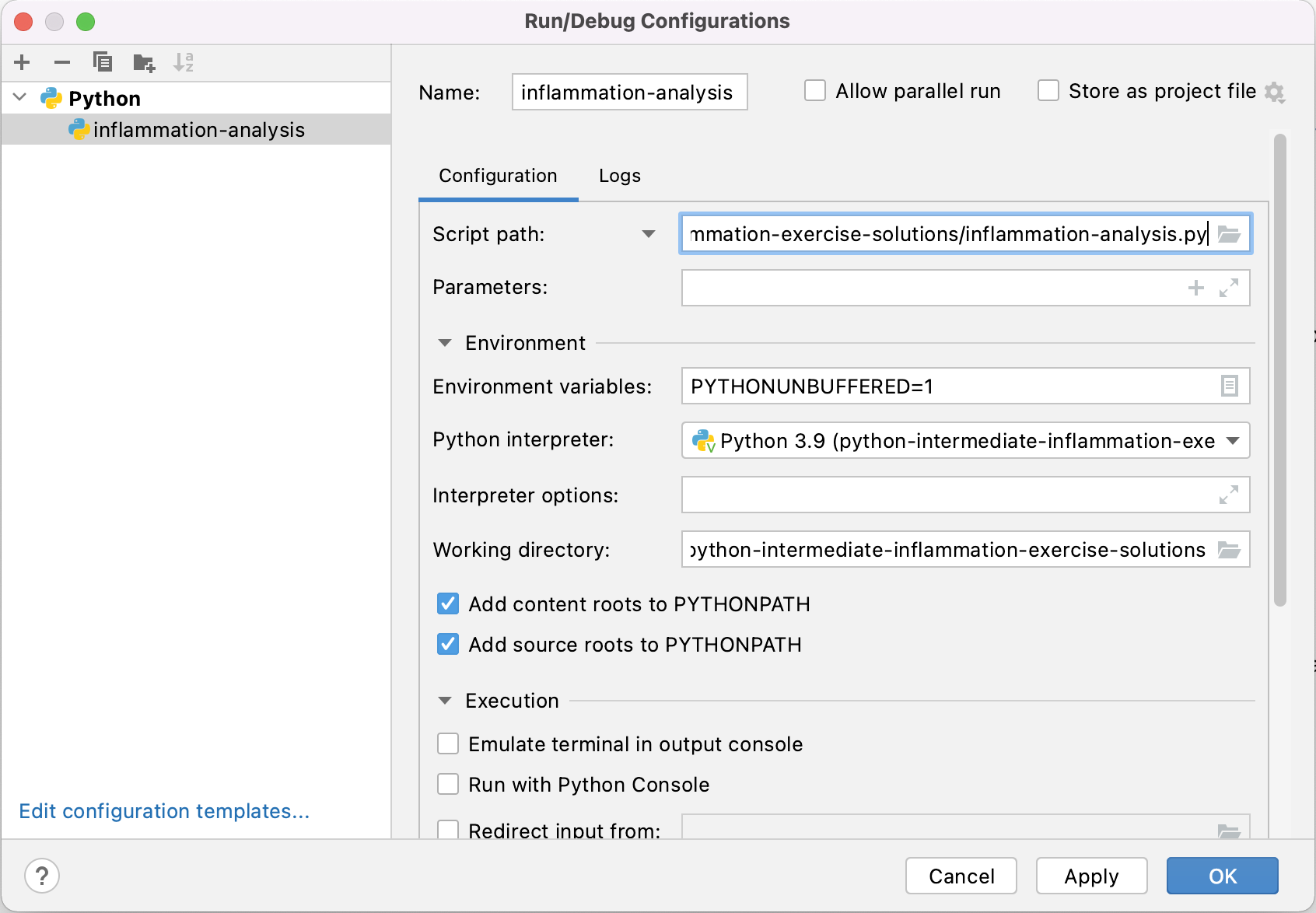

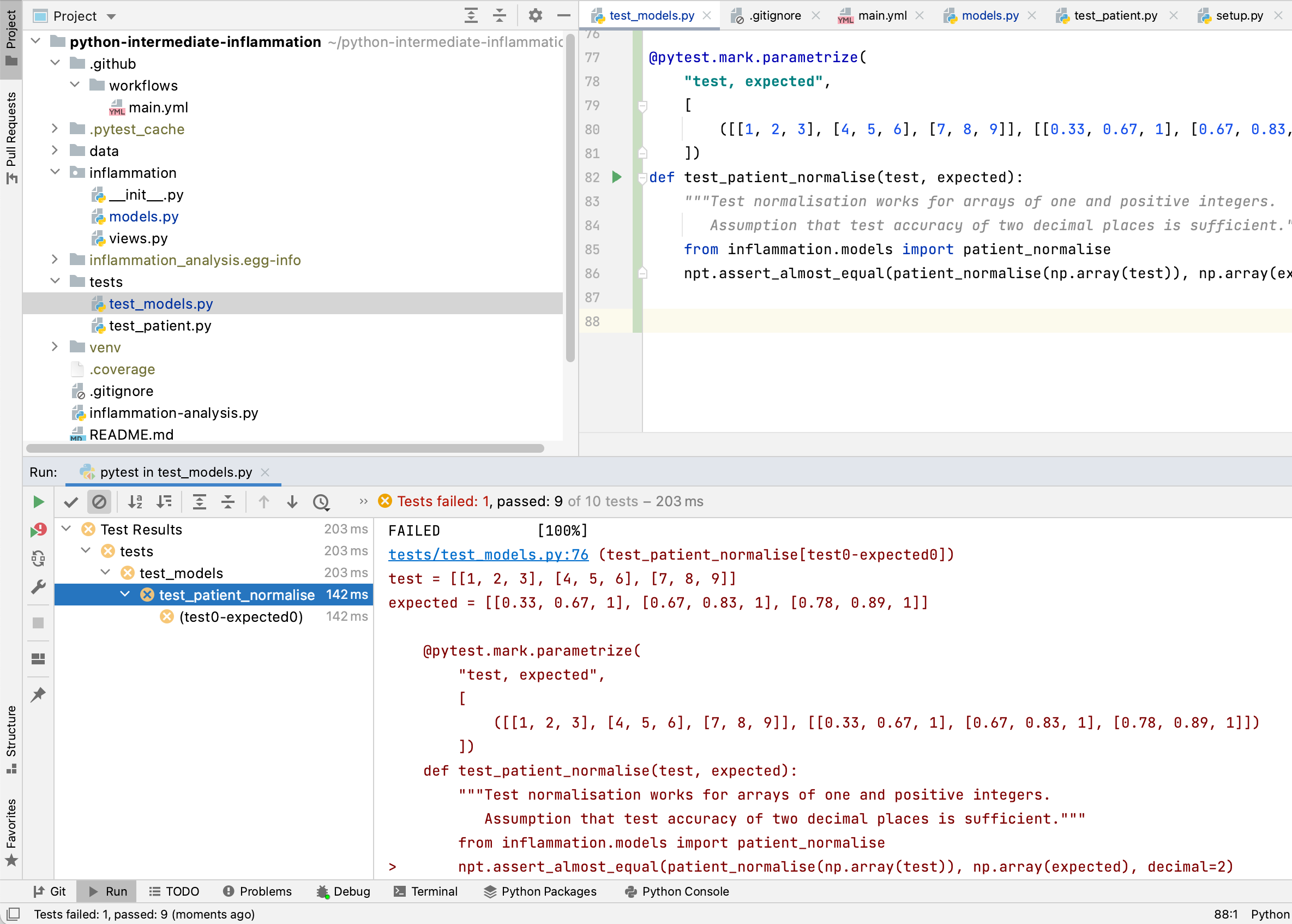

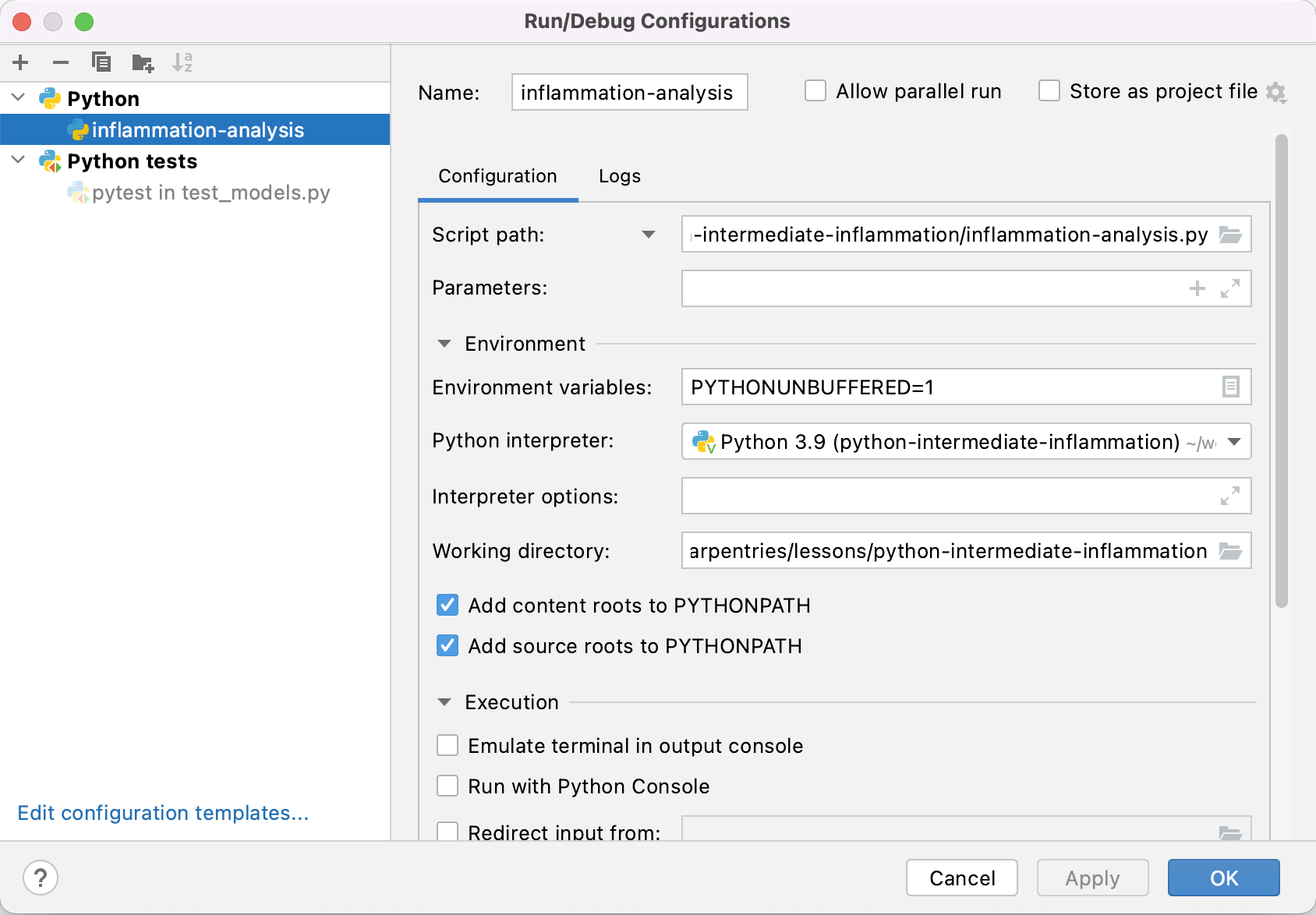

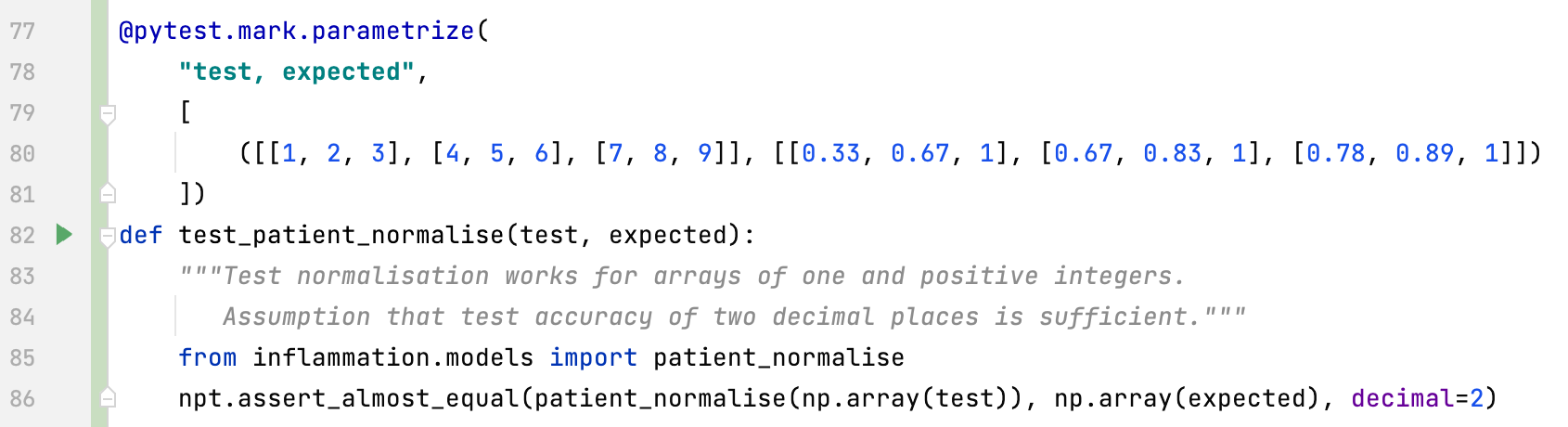

Adding a Run Configuration for Our Project

Having configured a virtual environment, we now need to tell PyCharm to use it for our project. This is done by adding a Run Configuration to a project:

- To add a new configuration for a project -

select

Run>Edit Configurations...from the top menu. - Select

Add new run configuration...thenPython.

- In the new popup window, in the

Script pathfield select the folder button and find and selectinflammation-analysis.py. This tells PyCharm which script to run (i.e. what the main entry point to our application is).

- In the same window, select “Python 3.9 (python-intermediate-inflammation)”

(i.e. the virtual environment and interpreter you configured earlier in this episode)

in the

Python interpreterfield. - You can give this run configuration a name at the top of the window if you like -

e.g. let’s name it

inflammation analysis. - You can optionally configure run parameters and environment variables in the same window - we do not need this at the moment.

- Select

Applyto confirm these settings.

Virtual Environments & Run Configurations in PyCharm

We configured the Python interpreter to use for our project by pointing PyCharm to the virtual environment we created from the command line (which also includes external libraries our code needs to run). Recall that you can create several virtual environments based on the same Python interpreter but with different external libraries - this is helpful when you need to develop different types of applications. For example, you can create one virtual environment based on Python 3.9 to develop Django Web applications and another virtual environment based on the same Python 3.9 to work with scientific libraries.

Run Configurations in PyCharm are named sets of startup properties that define what to execute and what parameters (i.e. what additional configuration options) to use on top of virtual environments. You can vary these configurations each time your code is executed, which is particularly useful for running, debugging and testing your code.

Now you know how to configure and manipulate your environment in both tools (command line and PyCharm), which is a useful parallel to be aware of. Let’s have a look at some other features afforded to us by PyCharm.

Syntax Highlighting

The first thing you may notice is that code is displayed using different colours. Syntax highlighting is a feature that displays source code terms in different colours and fonts according to the syntax category the highlighted term belongs to. It also makes syntax errors visually distinct. Highlighting does not affect the meaning of the code itself - it’s intended only for humans to make reading code and finding errors easier.

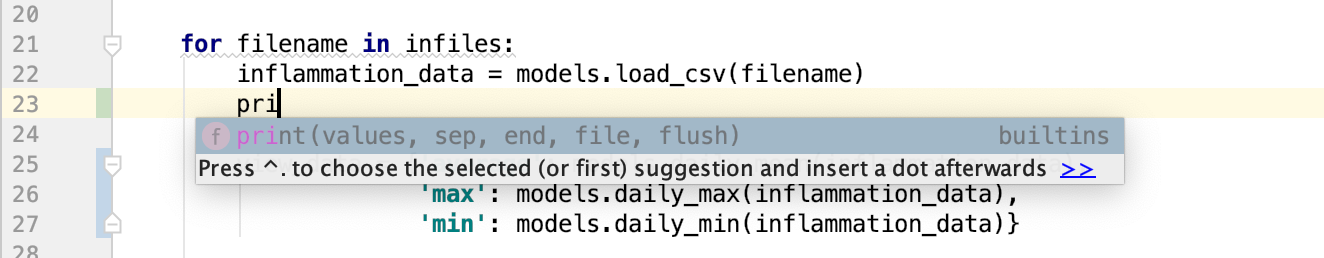

Code Completion

As you start typing code, PyCharm will offer to complete some of the code for you in the form of an auto completion popup. This is a context-aware code completion feature that speeds up the process of coding (e.g. reducing typos and other common mistakes) by offering available variable names, functions from available packages, parameters of functions, hints related to syntax errors, etc.

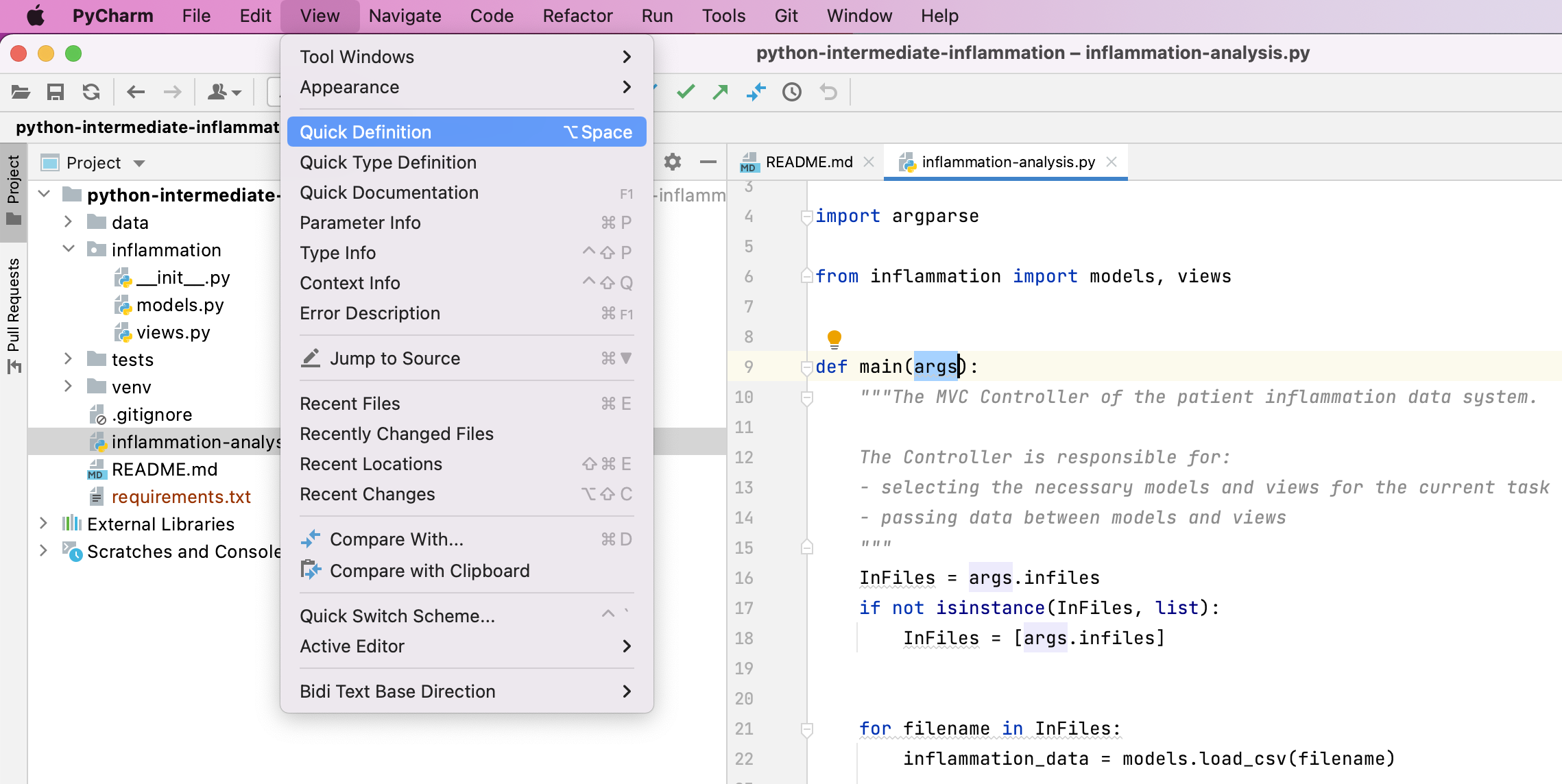

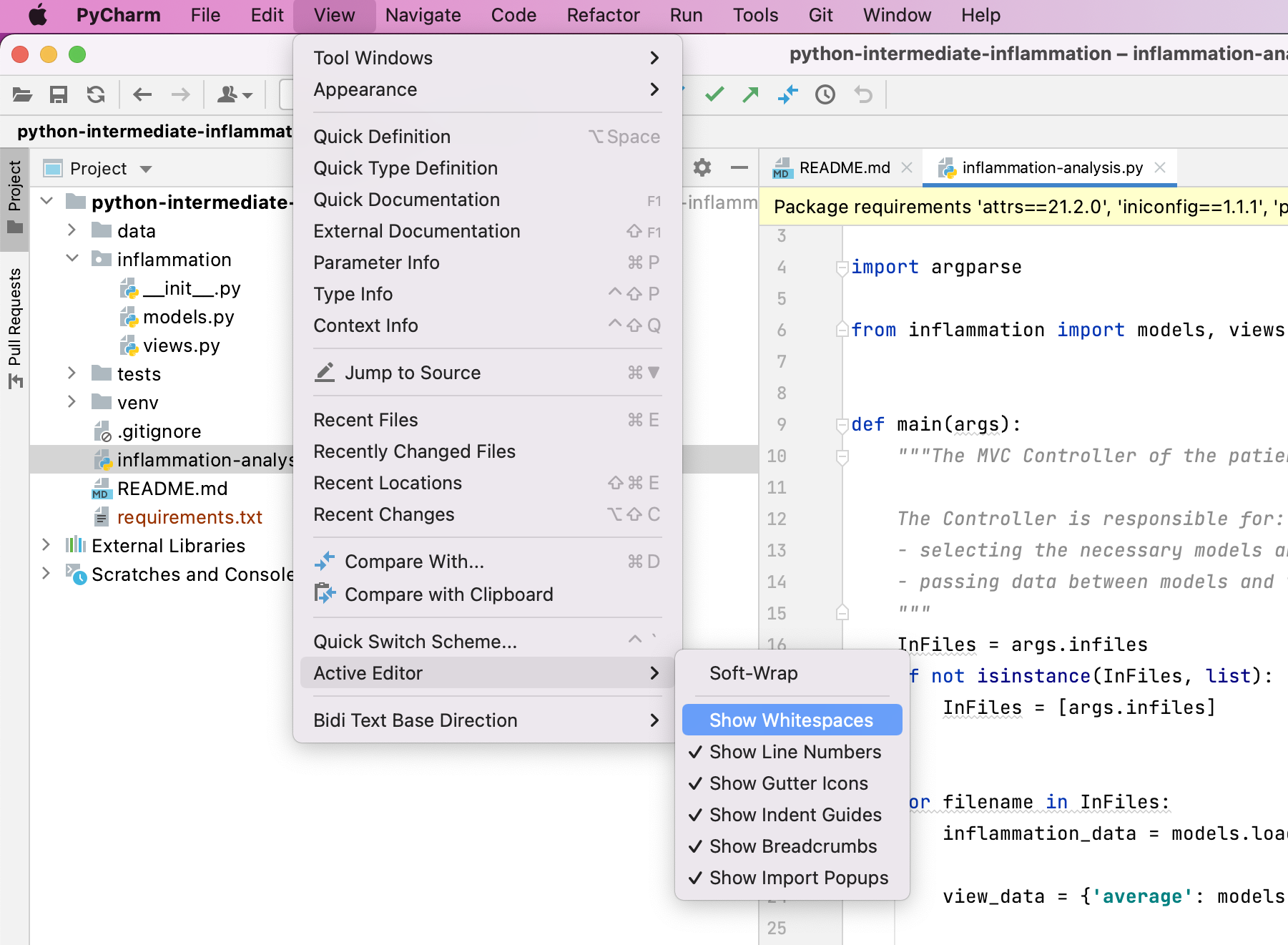

Code Definition & Documentation References

You will often need code reference information to help you code. PyCharm shows this useful information, such as definitions of symbols (e.g. functions, parameters, classes, fields, and methods) and documentation references by means of quick popups and inline tooltips.

For a selected piece of code,

you can access various code reference information from the View menu

(or via various keyboard shortcuts),

including:

- Quick Definition - where and how symbols (functions, parameters, classes, fields, and methods) are defined

- Quick Type Definition - type definition of variables, fields or any other symbols

- Quick Documentation - inline documentation (docstrings for any symbol created in accordance with PEP-257

- Parameter Info - the names and expected types of parameters in method and function calls. Use this when cursor is on the argument of a function call.

- Type Info - type of an expression

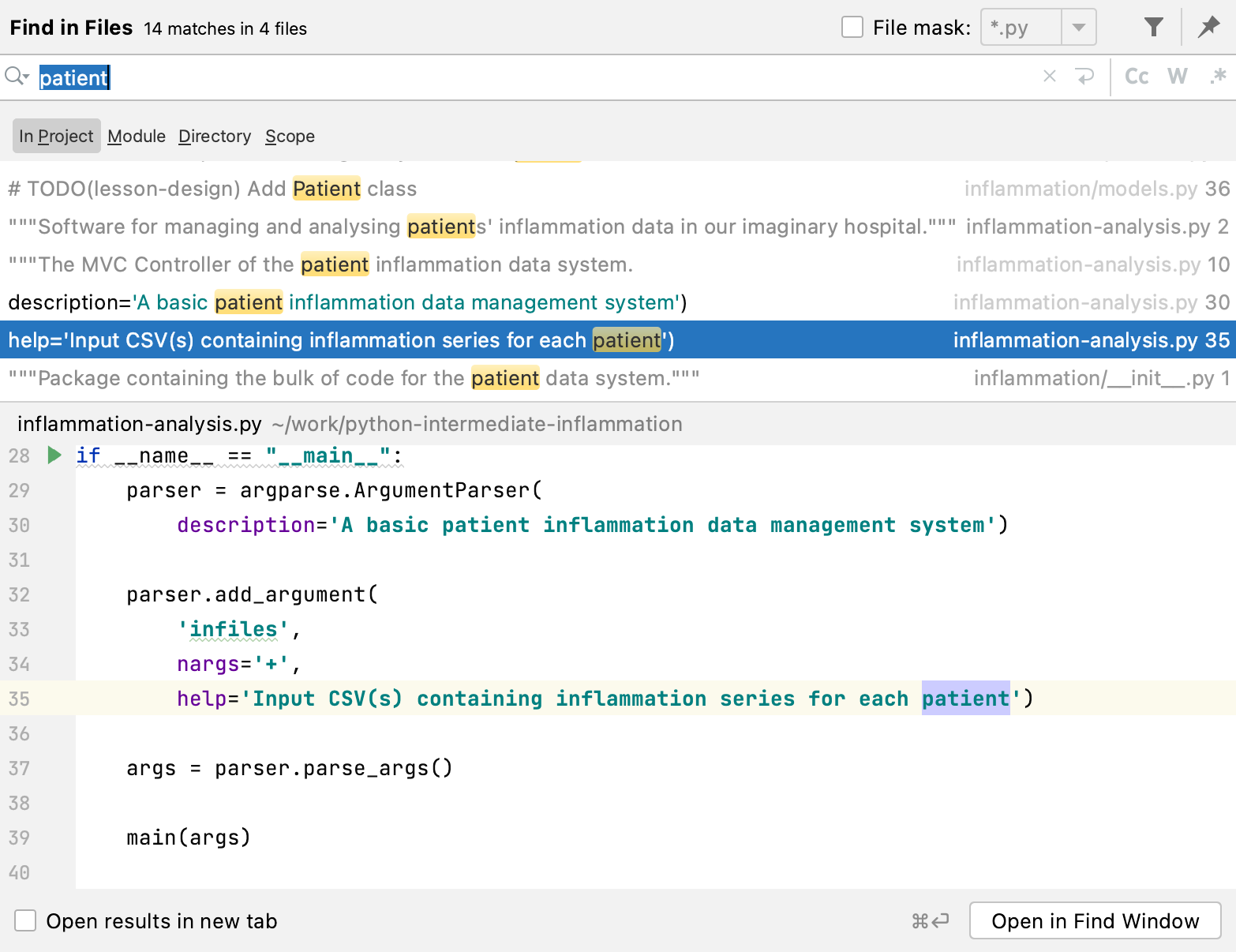

Code Search

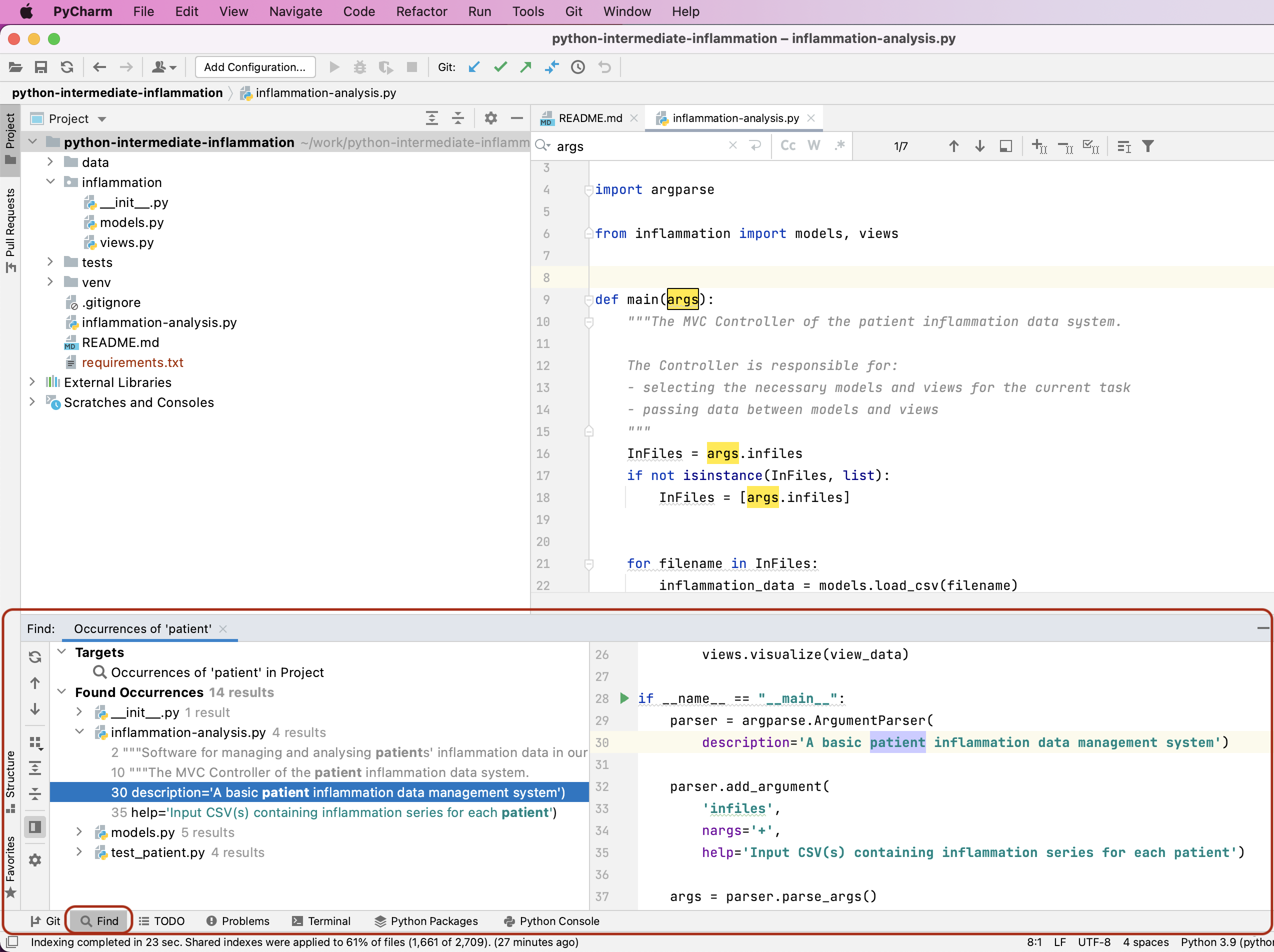

You can search for a text string within a project, use different scopes to narrow your search process, use regular expressions for complex searches, include/exclude certain files from your search, find usages and occurrences. To find a search string in the whole project:

- From the main menu,

select

Edit | Find | Find in Path ...(orEdit | Find | Find in Files...depending on your version of PyCharm). -

Type your search string in the search field of the popup. Alternatively, in the editor, highlight the string you want to find and press

Command-Shift-F(on Mac) orControl-Shift-F(on Windows). PyCharm places the highlighted string into the search field of the popup. If you need, specify the additional options in the popup.

PyCharm will list the search strings and all the files that contain them.

If you need, specify the additional options in the popup.

PyCharm will list the search strings and all the files that contain them. - Check the results in the preview area of the dialog where you can replace the search string

or select another string,

or press

Command-Shift-F(on Mac) orControl-Shift-F(on Windows) again to start a new search. -

To see the list of occurrences in a separate panel, click the

Open in Find Windowbutton in the bottom right corner. The find panel will appear at the bottom of the main window; use this panel and its options to group the results, preview them, and work with them further.

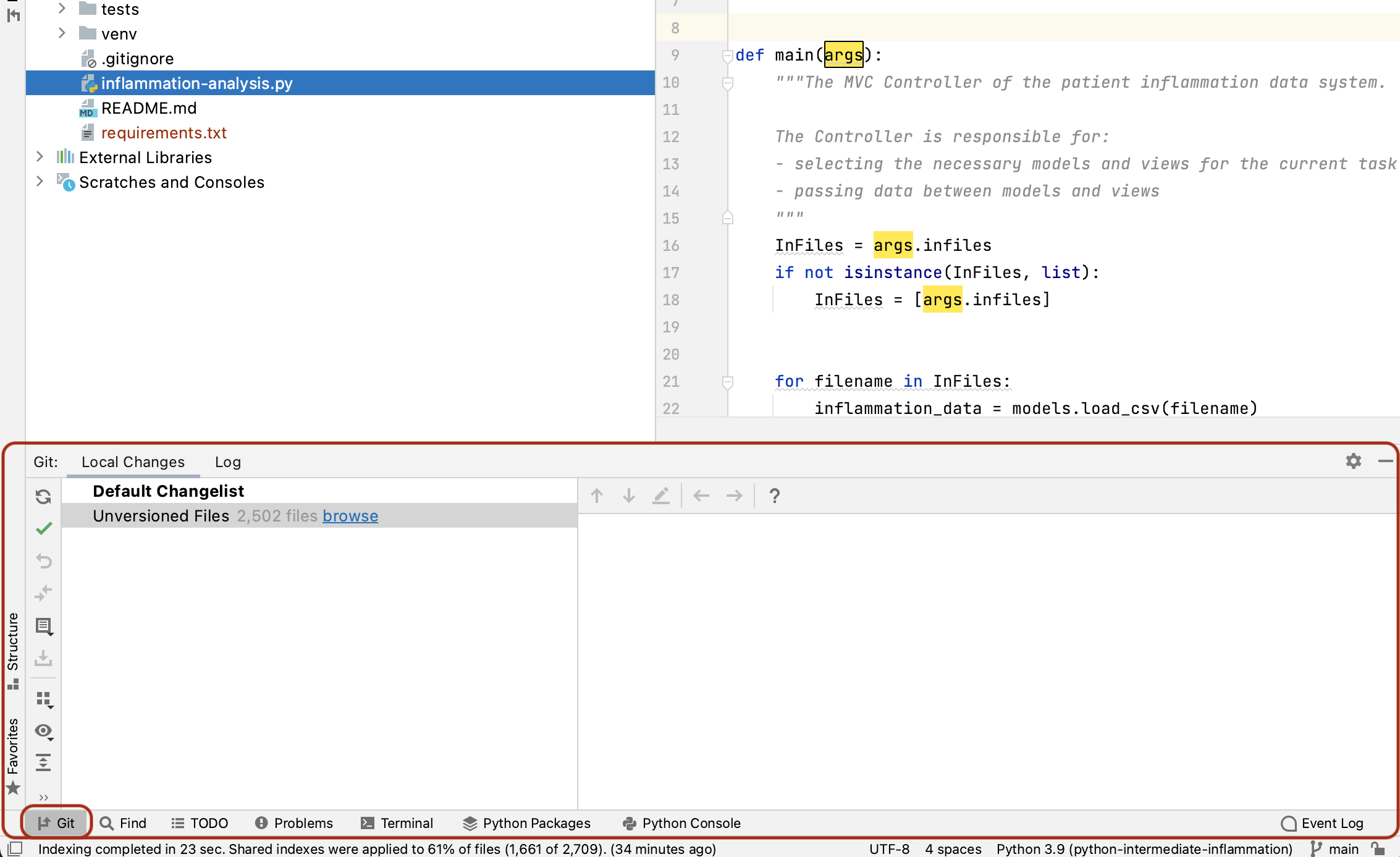

Version Control

PyCharm supports a directory-based versioning model, which means that each project directory can be associated with a different version control system. Our project was already under Git version control and PyCharm recognised it. It is also possible to add an unversioned project directory to version control directly from PyCharm.

During this course, we will do all our version control commands from the command line but it is worth noting that PyCharm supports a comprehensive subset of Git commands (i.e. it is possible to perform a set of common Git commands from PyCharm but not all). A very useful version control feature in PyCharm is graphically comparing changes you made locally to a file with the version of the file in a repository, a different commit version or a version in a different branch - this is something that cannot be done equally well from the text-based command line.

You can get a full documentation on PyCharm’s built-in version control support online.

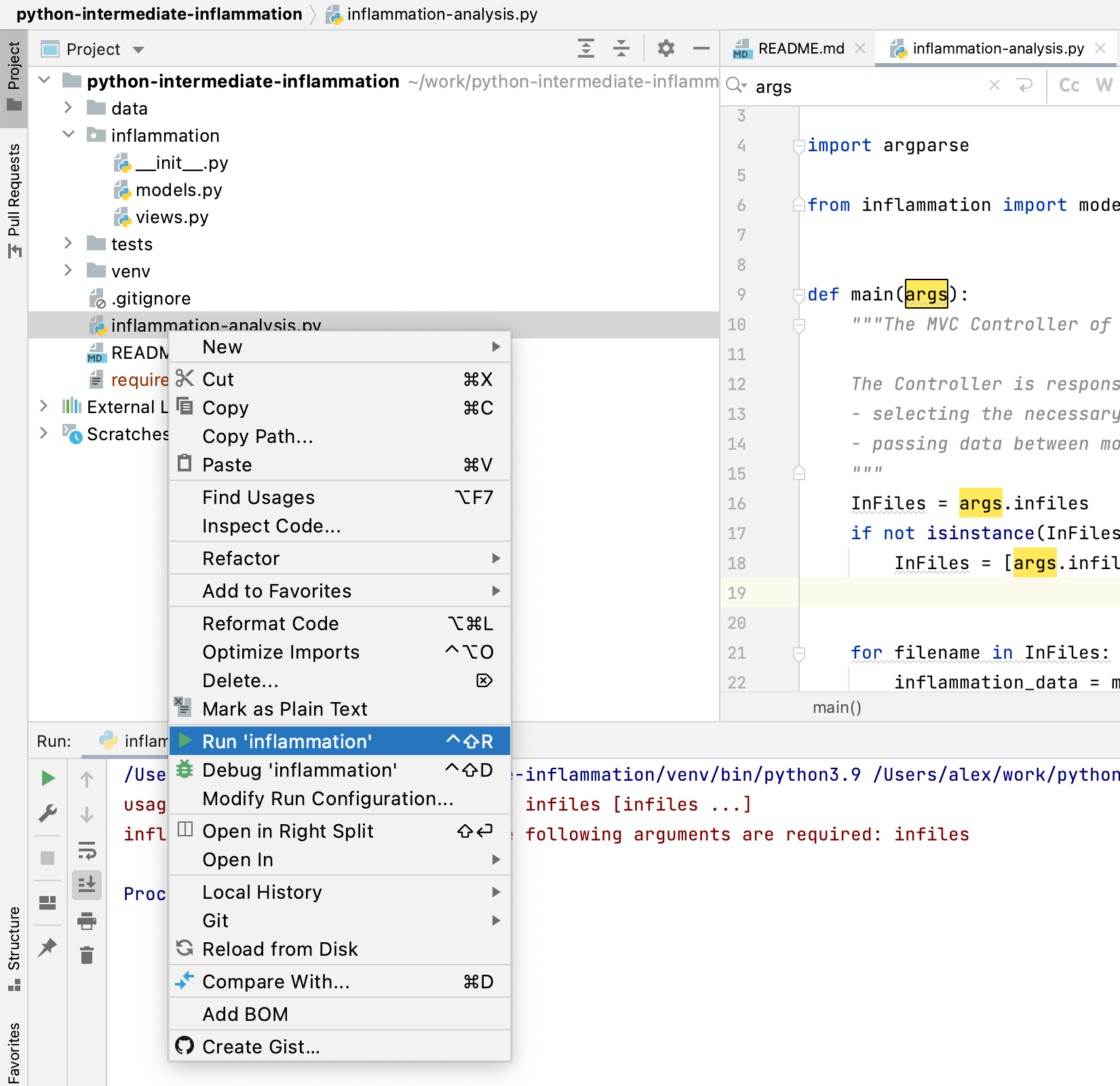

Running Scripts in PyCharm

We have configured our environment and explored some of the most commonly used PyCharm features

and are now ready to run our script from PyCharm!

To do so, right-click the inflammation-analysis.py file

in the PyCharm project/file navigator on the left,

and select Run 'inflammation analysis' (i.e. the Run Configuration we created earlier).

The script will run in a terminal window at the bottom of the IDE window and display something like:

/Users/alex/work/python-intermediate-inflammation/venv/bin/python /Users/alex/work/python-intermediate-inflammation/inflammation-analysis.py

usage: inflammation-analysis.py [-h] infiles [infiles ...]

inflammation-analysis.py: error: the following arguments are required: infiles

Process finished with exit code 2

This is the same error we got when running the script from the command line. We will get back to this error shortly - for now, the good thing is that we managed to set up our project for development both from the command line and PyCharm and are getting the same outputs. Before we move on to fixing errors and writing more code, let’s have a look at the last set of tools for collaborative code development which we will be using in this course - Git and GitHub.

Key Points

An IDE is an application that provides a comprehensive set of facilities for software development, including syntax highlighting, code search and completion, version control, testing and debugging.

PyCharm recognises virtual environments configured from the command line using

venvandpip.

Collaborative Software Development Using Git and GitHub

Overview

Teaching: 35 min

Exercises: 0 minQuestions

What are Git branches and why are they useful for code development?

What are some best practices when developing software collaboratively using Git?

Objectives

Commit changes in a software project to a local repository and publish them in a remote repository on GitHub

Create branches for managing different threads of code development

Learn to use feature branch workflow to effectively collaborate with a team on a software project

Introduction

So far we have checked out our software project from GitHub and used command line tools to configure a virtual environment for our project and run our code. We have also familiarised ourselves with PyCharm - a graphical tool we will use for code development, testing and debugging. We are now going to start using another set of tools from the collaborative code development toolbox - namely, the version control system Git and code sharing platform GitHub. These two will enable us to track changes to our code and share it with others.

You may recall that we have already made some changes to our project locally -

we created a virtual environment in the directory called “venv”

and exported it to the requirements.txt file.

We should now decide which of those changes we want to check in and share with others in our team.

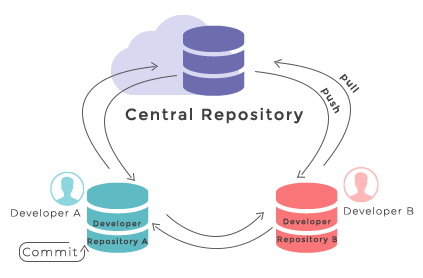

This is a typical software development workflow -

you work locally on code,

test it to make sure it works correctly and as expected,

then record your changes using version control

and share your work with others via a shared and centrally backed-up repository.

Firstly, let’s remind ourselves how to work with Git from the command line.

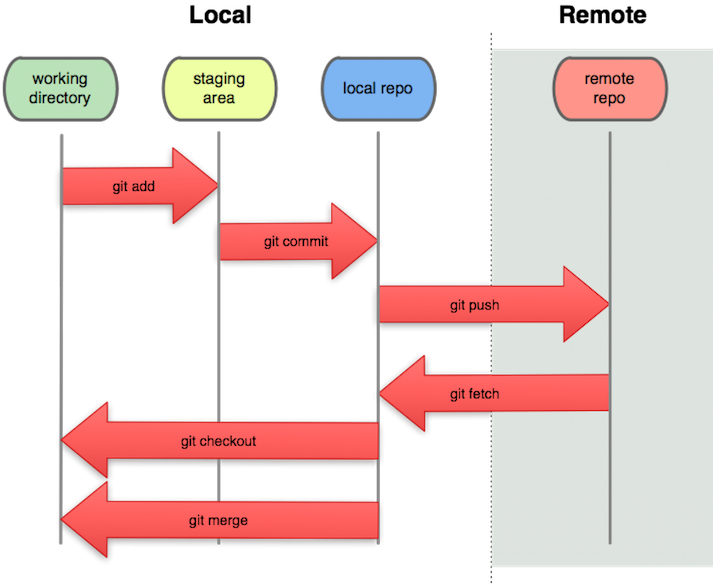

Git Refresher

Git is a version control system for tracking changes in computer files and coordinating work on those files among multiple people. It is primarily used for source code management in software development but it can be used to track changes in files in general - it is particularly effective for tracking text-based files (e.g. source code files, CSV, Markdown, HTML, CSS, Tex, etc. files).

Git has several important characteristics:

- support for non-linear development allowing you and your colleagues to work on different parts of a project concurrently,

- support for distributed development allowing for multiple people to be working on the same project (even the same file) at the same time,

- every change recorded by Git remains part of the project history and can be retrieved at a later date, so even if you make a mistake you can revert to a point before it.